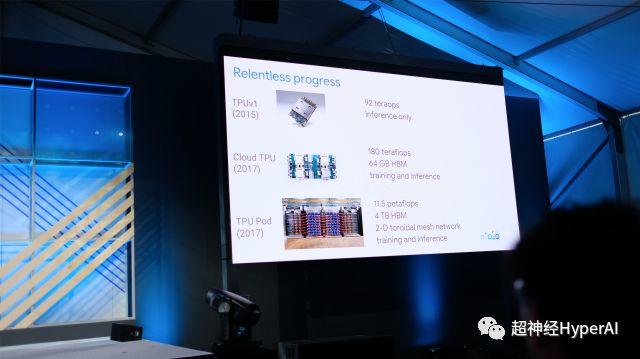

At the opening of Google 2018, Sundar Pichai briefly introduced Google's ability to use artificial intelligence to participate in diagnosis and treatment, and process audio and video. As expected, he gave the center position of the entire conference to TPU - this tensor processor built by Google for deep learning has become Google's strongest muscle in the field of artificial intelligence.

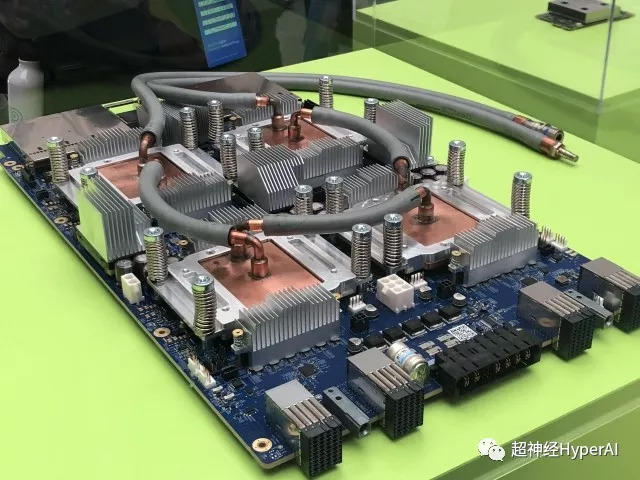

Compared with the TPUv2 released last year but not yet publicly available, the TPUv3 has a significant performance improvement, with a peak computing power eight times that of the 2.0 version. The performance improvement also comes at a considerable cost, with the huge heat generation forcing engineers to replace the radiator with a brass tube. As you might expect, Sundar Pichai also admitted that the cooling solution for this generation of TPU is liquid cooling.

The peak computing power of TPUv2 is 180Tflops, with 64GB HBM cache, which increases the training capacity compared to the first generation TPU. Zak Stone, the speaker of the TPU theme in the sub-venue, demonstrated the training process of multiple models using Tensor2Tensor, and the performance was indeed very gratifying. In the post-conference question to Zak Stone, Super Neuro asked about the adaptability of TPU to other development frameworks. Unfortunately but as expected, TPU can only support TensorFlow related tool chains, and does not support other development frameworks headed by Pytorch. Zak also mentioned that the open source project Tensor2Tensor is progressing very fast (Very faaaast).

Frank Chen, a Google engineer at the same branch venue, told Super Neuro that the 3.0 version of TPU does have a "slight increase" in power consumption. At the same time, the communication between TPU Pods in the computer room uses highly customized cables, not fiber solutions such as Infinity Band. The sufficient bandwidth and cache improve the capabilities of the entire cluster, but of course also bring a certain burden to energy consumption. When asked whether developers can be provided with access to version 3.0 this year, he smiled unnaturally and said, "I hope so."

Hey, whose service doesn't have a delay? But wait, if 3.0 can't be used immediately, when can TPUv2 be used without applying for quota? Zak Stone said that as soon as he returns to the office, all developers whose applications are waiting for review will receive an email immediately and get permission to use the service.

Well, let's hope Zak gets running and gets back to his desk soon.

Historical articles (click on the image to read)

Google I/O, known as the "Spring Festival Gala for Programmers"

What big killers have been released?

"Japanese people have strange ideas, and even their AI work is not serious"

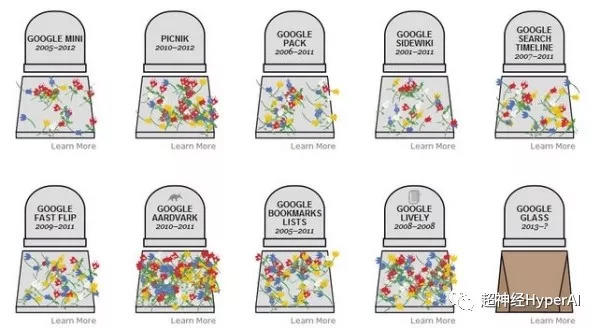

"A detailed list of the failed products released at Google I/O"》