Command Palette

Search for a command to run...

Deconstructing StyleCLIP: Text-driven, on-demand Design, Comparable to Human Photoshop

Everyone is familiar with StyleGAN. This new generative adversarial network released by NVIDIABy borrowing style transfer, a large number of new style-based images can be quickly generated.

StyleGAN has strong learning ability and generates images that are indistinguishable from real ones.However, this method of learning and secondary creation based on "looking at pictures" has become somewhat traditional and conservative after being used too many times.

Researchers from the Hebrew University, Tel Aviv University, and Adobe Research,Creatively combines the generative power of the pre-trained StyleGAN generator with the visual language capabilities of CLIP.Introducing a new way to modify StyleGAN images – Driven by text, whatever you "write" will generate whatever image you want..

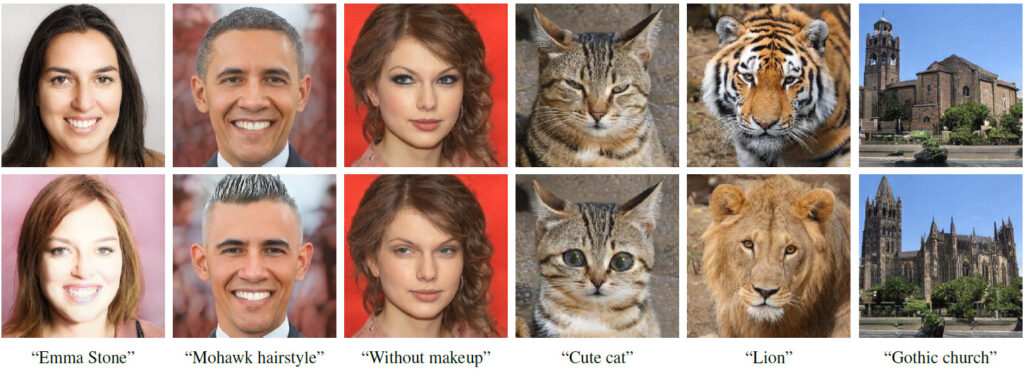

The first row is the input image, and the second row is the operation result

The text below each column of images corresponds to the text that drives the image change

Who is StyleCLIP?

StyleCLIP, as the name suggests, is a combination of StyleGAN and CLIP.

StyleGAN uses Image Inversion to represent images as Latent Code, and then controls the image style by editing and modifying the Latent Code.

CLIP stands for Contrastive Language-Image Pretraining. It is a neural network trained with 400 million image-text pairs. It can output the most relevant image based on a given text description.

In the paper, researchers studied three methods of combining StyleGAN and CLIP:

- Context-guided latent vector optimization, where the CLIP model is used as the loss network.

- Train Latent Mapper to make the latent vector correspond to the specific text one by one.

- In StyleGAN's StyleSpace, the text description is mapped to the global direction of the input image to control the intensity of image operations and the degree of separation.

Related Work

2.1 Vision and Language

Joint representationsThere are many tasks where cross-modal vision and language (VL) representations can be learned, such as text-based image retrieval, image captioning, and visual answering. With the success of BERT in various language tasks, current VL methods usually use Transformers to learn joint representations.

Text-guided image generation and processing

Train an eligible GAN to obtain text embeddings from a pre-trained encoder for text-guided image generation.

2.2 Latent Space Image Processing

StyleGAN’s intermediate Latent Space has been proven toA large number of decompositions and meaningful image processing operations can be achieved,For example, training a network to encode a given image into an embedding vector of the processed image, thereby learning to perform image processing in an end-to-end manner.

Image processing is done directly on text input, using a pre-trained CLIP model for supervision. Since CLIP is trained on hundreds of millions of text-image pairs,Therefore, this method is universal and can be used in many fields without the need for data annotation for specific fields or specific processing.

3. StyleCLIP text-driven image processing

This work explores three ways of text-driven image processing:All of these approaches combine the generative power of StyleGAN with the rich joint vision-language representation of CLIP.

4. Latent Optimization

A simple way to use CLIP to guide image processing is through direct latent code optimization.

5. Latent Mapper

Latent optimization is universal.Because it is specifically optimized for all source image-text description pairs.The downside is that editing one image can take several minutes of optimization time, and the method is somewhat sensitive to its parameter values.

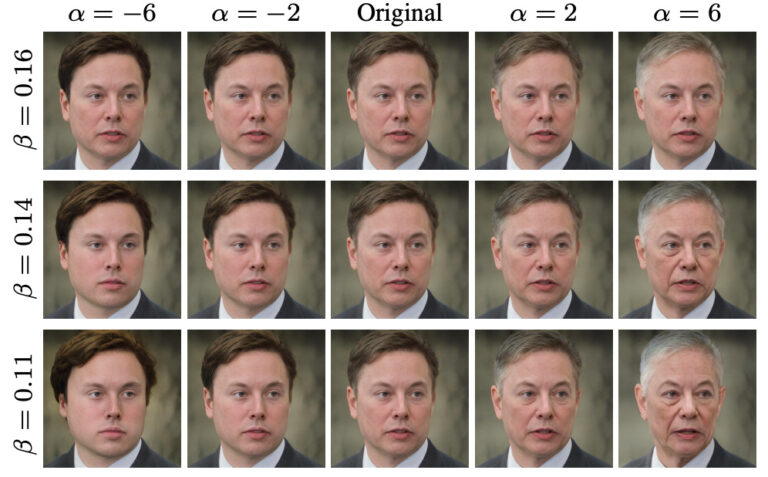

The text prompt used here is "surprise"

Different StyleGAN layers are responsible for different levels of detail in the generated image.

6. Global Directions

Mapping textual cues to a single, global direction in StyleGAN’s Style Space, which has been proven toMore discrete than other Latent Spaces.

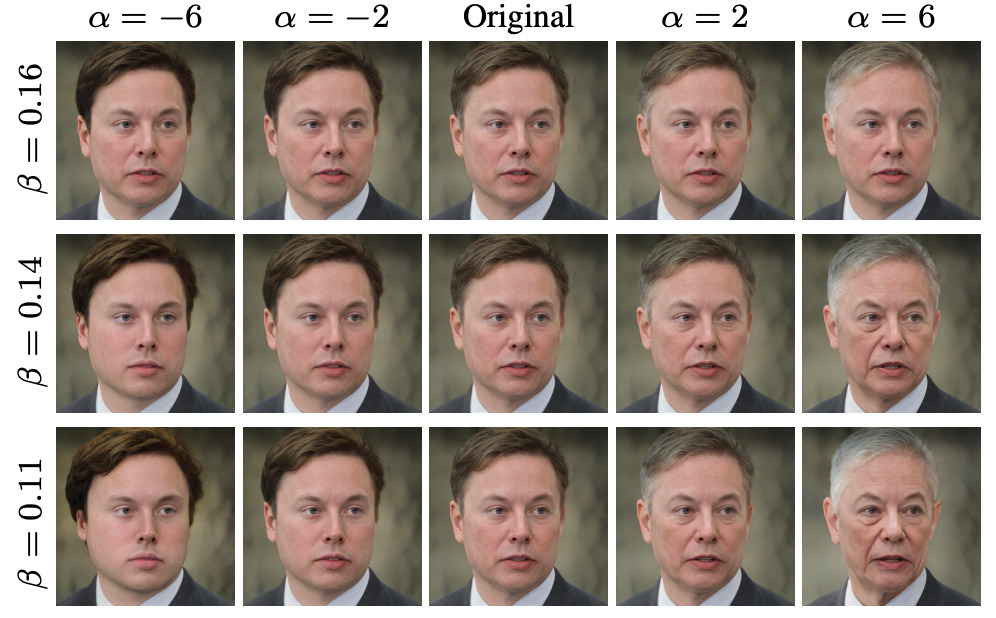

Suitable for different operating intensities and separation thresholds

The author of the paper: from Israeli universities, focusing on GAN

The first author of the paper, Or Patashnik, is a graduate student in CS at Tel Aviv University.Mainly engaged in image generation and processing related projects. She is very interested in machine learning, computer graphics and machine vision.Mainly engaged in projects involving image generation and processing, and has published several StyleGAN-related papers.

Zongze Wu, another author of the paper,is a PhD student at the Edmond & Lily Safra Center for Brain Sciences at the Hebrew University of Jerusalem.Currently, I am mainly working in the HUJI Machine Vision Laboratory, working on projects with Professors Dani Lischinski and Eli Shechtman from Adobe Research Institute.

Zongze Wu focuses on computer vision related topics.Such as generative adversarial networks, image processing, image translation, etc.

According to Zongze Wu's resume,From 2011 to 2016, he studied at Tongji University, majoring in Bioinformatics.After graduation, Zongze Wu entered the Hebrew University of Jerusalem to pursue a doctorate in computational neuroscience.

Detailed explanation of three methods combining StyleGAN and CLIP

According to the StyleCLIP related paper, researchers have developed three methods to combine StyleGAN and CLIP.These three methods are based on Latent Optimization, Latent Mapper and Global Direction respectively.

1. Based on Latent Optimization

This tutorial mainly introduces face editing based on iterative optimization. The user inputs a text expression and obtains a face edited image that matches the text.

Step 1: Prepare the code environment

import os

os.chdir(f'./StyleCLIP')

! pip install ftfy regex tqdm

! pip install git+https://github.com/openai/CLIP.gitStep 2 Parameter Setting

experiment_type = 'edit' # 可选: ['edit', 'free_generation']

description = 'A person with blue hair' # 编辑的描述,需要是字符串

latent_path = None # 优化的起点 (一般不需修改)

optimization_steps = 100 # 优化的步数

l2_lambda = 0.008 # 优化时候 L2 loss 的权重

create_video = True # 是否将中间过程存储为视频

args = {

"description": description,

"ckpt": "/openbayes/input/input0/stylegan2-ffhq-config-f.pt",

"stylegan_size": 1024,

"lr_rampup": 0.05,

"lr": 0.1,

"step": optimization_steps,

"mode": experiment_type,

"l2_lambda": l2_lambda,

"latent_path": latent_path,

"truncation": 0.7,

"save_intermediate_image_every": 1 if create_video else 20,

"results_dir": "results"

}Step 3: Run the model

from optimization.run_optimization import main

from argparse import Namespace

result = main(Namespace(**args))Step 4: Visualize the images before and after processing

# 可视化图片

from torchvision.utils import make_grid

from torchvision.transforms import ToPILImage

result_image = ToPILImage()(make_grid(result.detach().cpu(), normalize=True, scale_each=True, range=(-1, 1), padding=0))

h, w = result_image.size

result_image.resize((h // 2, w // 2))

Step 5: Save the optimization process as video output

#@title Create and Download Video

!ffmpeg -y -r 15 -i results/%05d.png -c:v libx264 -vf fps=25 -pix_fmt yuv420p /openbayes/home/out.mp4Full notebook access address

2. Based on Latent Mapper

The first step is to prepare the code environment

Step 2: Set parameters

Step 3: Run the model

Step 4: Visualize the images before and after processing

Click to visitComplete notebook

3. Based on Global Direction

This tutorial introduces how to map text information into the latent space of StyleGAN and then further modify the content of the image. Users can enter a text description and get a face edited image that matches the text very well and has good feature decoupling.

Step 1: Prepare the code environment

Step 2: Set up StyleCLIP

Step 3. Set up e4e

Step 4: Select the image and use dlib for face alignment

Step 5: Reverse the image to be edited into the latent space of StyleGAN

Step 6: Enter text description

Step 7: Select the manipulation strength (alpha) and decoupling threshold (beta) for image editing

Step 8: Generate a video to visualize the editing process

Click to visitComplete notebook

About OpenBayes

OpenBayes is a leading machine intelligence research institution in China.Provides a number of basic services related to AI development, including computing power containers, automatic modeling, and automatic parameter adjustment.

At the same time, OpenBayes has also launched many mainstream public resources such as data sets, tutorials, and models.For developers to quickly learn and create ideal machine learning models.

Visit Now openbayes.com and register,Get 600 minutes/week of vGPU usage And 300 minutes/week of free CPU computing time

Take action now and use StyleCLIP to design the face you want!

Click to visitFull Tutorial