Command Palette

Search for a command to run...

Master the Implementation Details of MobileNetV3 in TorchVision in One Article

TorchVision v0.9 adds a series of mobile-friendly models.It can be used to handle tasks such as classification, target detection, and semantic segmentation.

This article will explore the code of these models in depth, share noteworthy implementation details, explain the configuration and training principles of these models, and interpret the important trade-offs made by the authorities during the model optimization process.

The goal of this article is to present technical details of the model that are not documented in the original papers and repositories.

Network Architecture

The implementation of the MobileNetV3 architecture strictly follows the settings in the original paper.Supports user customization and provides different configurations for building classification, target detection, and semantic segmentation Backbones.Its structural design is similar to MobileNetV2, and both share the same building blocks.

Ready to use out of the box.There are two official variants: Large and Small. Both are built with the same code, the only difference is the configuration (number of modules, size, activation function, etc.).

Configuration parameters

Although users can customize the InvertedResidual setting and pass it directly to the MobileNetV3 class, for most applications,Developers can adjust existing configurations by passing parameters to the model building method.Some key configuration parameters are as follows:

* width_mult The parameter is a multiplier that determines the number of model pipelines. The default value is 1. By adjusting the default value, you can change the number of convolution filters, including the first and last layers. When implementing, make sure that the number of filters is a multiple of 8. This is a hardware optimization technique that can speed up the vectorization process of the operation.

* reduced_tail The parameter is mainly used for running speed optimization, which halves the number of pipelines in the last module of the network. This version is often used in object detection and semantic segmentation models. According to the description of the MobileNetV3 related paper, using the reduced_tail parameter can reduce the latency of 15% without affecting the accuracy.

* dilated The parameters mainly affect the last three InvertedResidual modules of the model. The Depthwise convolution of these modules can be converted into Atrous convolution, which is used to control the output step size of the module and improve the accuracy of the semantic segmentation model.

Implementation details

The MobileNetV3 class is responsible for building a network from the provided configuration. The implementation details are as follows:

* The last convolutional module expands the output of the last InvertedResidual module by a factor of 6. This implementation can be adapted to different multiplier parameters.

* Similar to the MobileNetV2 model, there is a Dropout layer before the last Linear layer of the classifier.

The InvertedResidual class is the main building block of the network. The implementation details that need to be noted are as follows:

* If the input pipeline and the expansion pipeline are the same, there is no need for the Expansion step. This happens on the first convolutional module of the network.

* Even if the Expanded pipeline has the same output channels, a Projection step is always required.

* The activation of the Depthwise module takes precedence over the Squeeze-and-Excite layer, which can improve the accuracy to a certain extent.

Classification

The benchmarks and configuration, training, and quantization details of the pre-trained models are explained here.

Benchmarks

Initialize the pre-trained model:

large = torchvision.models.mobilenet_v3_large(pretrained=True, width_mult=1.0, reduced_tail=False, dilated=False)

small = torchvision.models.mobilenet_v3_small(pretrained=True)

quantized = torchvision.models.quantization.mobilenet_v3_large(pretrained=True)

As shown in the figure, if the user is willing to sacrifice a little accuracy in exchange for a speed increase of about 6 times,Then MobileNetV3-Large can be a substitute for ResNet50.

Note that the inference time here is measured on the CPU.

Training process

All pre-trained models were configured as non-dilated models with width multiplier 1 and full tails and fit on ImageNet. Both Large and Small variants were trained with the same hyperparameters and scripts.

Fast and stable model training

Correctly configuring RMSProp is crucial to speeding up the training process and ensuring numerical stability. The authors used TensorFlow in their experiments and used a significantly higher rmsprop_epsilon .

Normally, this hyperparameter is used to avoid zero denominators, so its value is small, but in this particular model,Choosing the right value is important to avoid numerical instability in losses.

Another important detail is that, while PyTorch and TensorFlow’s RMSProp implementations generally behave similarly, in the setting here it is important to note the differences in how the two frameworks handle the epsilon hyperparameter.

Specifically, PyTorch adds epsilon outside of the square root calculation, while TensorFlow adds epsilon inside it.This means that when users transplant the hyperparameters of this article, they need to adjust the epsilon value, which can be expressed as PyTorch_eps=sqrt(TF_eps) to calculate a reasonable approximation.

Improve model accuracy by adjusting hyperparameters and improving the training process

After configuring the optimizer to achieve fast and stable training, you can start to optimize the accuracy of the model. There are several techniques that can help users achieve this goal.

First, to avoid overfitting,You can use AutoAugment and RandomErasing to augment the data.In addition, using cross-validation to adjust parameters such as weight decay and averaging the weights of different epoch checkpoints after training is also of great significance. Finally, using methods such as Label Smoothing, random depth, and LR noise injection can also improve the overall accuracy by at least 1.5%.

Note that once the set accuracy is reached, the model performance will be verified on the validation set.This process helps detect overfitting.

Quantification

Provides quantized weights for the QNNPACK backend of the MobileNetV3-Large variant, making it 2.5 times faster. To quantize the model,Quantization Aware Training (QAT) is used here.

Note that QAT allows modeling the impact of quantization and adjusting weights in order to improve model accuracy.Compared with the quantized results of the simply trained model, the accuracy is improved by 1.8%:

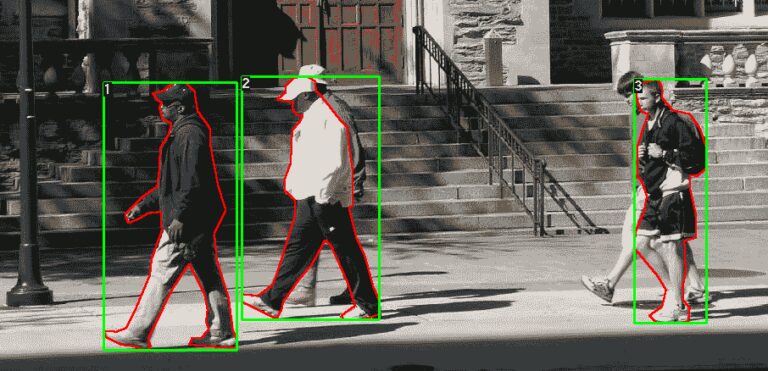

Object Detection

This section will first provide a baseline of published models and then discuss how MobileNetV3-Large Backbone is used with the FasterRCNN detector in the Feature Pyramid Network for object detection.

It also explains how the network is trained and tuned, and where trade-offs must be made.(This section does not cover the details of how to use it with SSDlite).

Benchmarks

Initialize the model:

high_res = torchvision.models.detection.fasterrcnn_mobilenet_v3_large_fpn(pretrained=True)

low_res = torchvision.models.detection.fasterrcnn_mobilenet_v3_large_320_fpn(pretrained=True)

As you can see, if the user is willing to sacrifice a little accuracy for a 5-fold faster training speed,High-resolution Faster R-CNN with MobileNetV3-Large FPN backbone, which can replace the equivalent ResNet50 model.

Implementation details

The detector uses an FPN-style backbone that extracts features from different convolutions of the MobileNetV3 model.By default, the pre-trained model uses the output of the 13th InvertedResidual module and the output of the convolution before the pooling layer. The implementation also supports using the output of more stages.

All feature maps extracted from the network are projected into 256 pipelines by the FPN module.This can greatly increase network speed.These feature maps provided by the FPN backbone will be used by the FasterRCNN detector to provide box and class predictions of different scales.

Training and tuning process

Currently, the official provides two pre-trained models that can perform object detection at different resolutions. Both models are trained on the COCO dataset with the same hyperparameters and scripts.

The high-resolution detector is trained with images of 800-1333px, while the mobile-friendly low-resolution detector is trained with images of 320-640px.

The reason for providing two separate sets of pre-trained weights is that training the detector directly on smaller images is more efficient than passing the small images to a pre-trained high-resolution model.This will result in an increase in accuracy of 5 mAP.

Both backbones are initialized with the weights on ImageNet, and the last three stages of their weights are fine-tuned during training.

Additional speed optimizations can be made for mobile-friendly models by adjusting the RPN NMS threshold.By sacrificing 0.2 mAP accuracy, the CPU speed of the model can be increased by about 45%. The optimization details are as follows:

Semantic Segmentation

This section first provides some published pre-trained model benchmarks, and then discusses how the MobileNetV3-Large backbone is combined with segmentation heads such as LR-ASPP, DeepLabV3, and FCN for semantic segmentation.

The network training process will also be explained and some alternative optimization techniques will be proposed for speed-critical applications.

Benchmarks

Initialize the pre-trained model:

lraspp = torchvision.models.segmentation.lraspp_mobilenet_v3_large(pretrained=True)

deeplabv3 = torchvision.models.segmentation.deeplabv3_mobilenet_v3_large(pretrained=True)

As can be seen in the figure, DeepLabV3 with MobileNetV3-Large backbone is a viable alternative to FCN and ResNet50 in most applications.While maintaining similar accuracy, the running speed is increased by 8.5 times.In addition, the performance of the LR-ASPP network in all indicators exceeds that of the FCN under the same conditions.

Implementation details

This section discusses important implementation details of the tested segmentation head. Note that all models described in this section use the dilated MobileNetV3-Large backbone.

LR-ASPP

LR-ASPP is a simplified version of the Reduced Atrous Spatial Pyramid Pooling model proposed by the authors of the MobileNetV3 paper. Unlike other segmentation models in TorchVision,Instead of using auxiliary loss, it uses low-level and high-level features with output strides of 8 and 16 respectively.

Different from the 49×49 AveragePooling layer and variable step size used in the paper, the AdaptiveAvgPool2d layer is used here to process global features.

This can provide users with a general implementation method that can be run on multiple data sets.Finally, before returning the output, a bilinear interpolation is always performed to ensure that the dimensions of the input and output images match exactly.

DeepLabV3 & FCN

The combination of MobileNetV3 with DeepLabV3 and FCN is very similar to the combination of other models, and the stage evaluation of these methods is the same as LR-ASPP.

It should be noted that no high-level or low-level features are used here.Instead, a normal loss is appended to the feature maps with an output stride of 16, and an auxiliary loss is appended to the feature maps with an output stride of 8.

FCN is inferior to LR-ASPP in both speed and accuracy, so it is not considered here. Pre-trained weights are still available with only minor code modifications.

Training and tuning process

Here are two MobileNetV3 pre-trained models that can be used for semantic segmentation:LR-ASPP and DeepLabV3.The backbones of these models were initialized with ImageNet weights and trained end-to-end.

Both architectures were trained on the COCO dataset using the same script and similar hyperparameters.

Typically, images are resized to 520 pixels during inference. An optional speed optimization is to build a low-resolution model configuration using high-resolution pre-trained weights and reduce the inference size to 320 pixels.This will increase the CPU execution time by about 60%, while sacrificing a few mIoU points.

The above are the implementation details of MobileNetV3 summarized in this issue. I hope these can give you a further understanding and knowledge of this model.