Command Palette

Search for a command to run...

After 80,000 Paintings + Manual Annotation Training, the Algorithm Learned to Appreciate Famous Paintings

Artworks often carry the author's inner emotions. When people appreciate a piece of music or a painting, they will also have emotional resonance. Can computers understand the emotions in art paintings? A research team at Stanford University is developing an algorithm to do this.

Leo Tolstoy said: "Art is a human activity in which a person consciously conveys his or her feelings to others through some external symbols, and others are infected by these feelings and truly experience them."

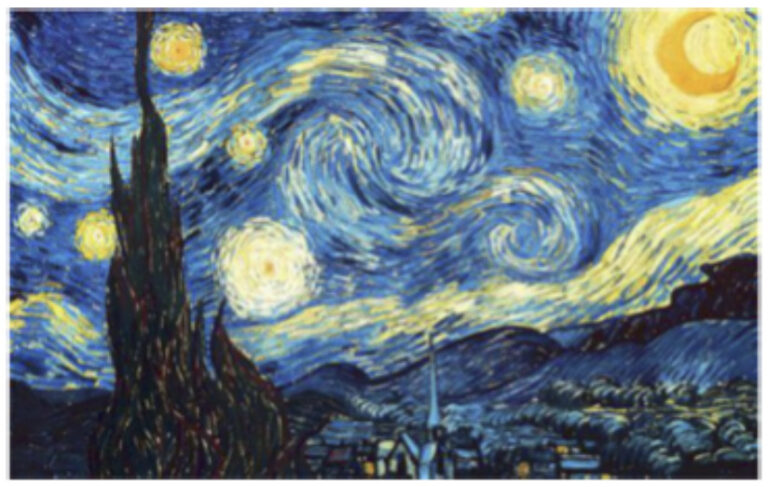

Take art paintings as an example. Behind every work, there is a certain emotion of the painter. Famous painters such as Van Gogh and Picasso have expressed their unique moods and emotions at different times through different colors and compositions.

Can computers understand the emotions contained in these works of art? A computer science research team at Stanford University has collected a new dataset called ArtEmis, which contains a large number of art paintings and manually annotated corresponding emotional experiences, and trained a computer model that can produce emotional responses to visual art.

Understanding paintings, starting with sentiment labeling datasets

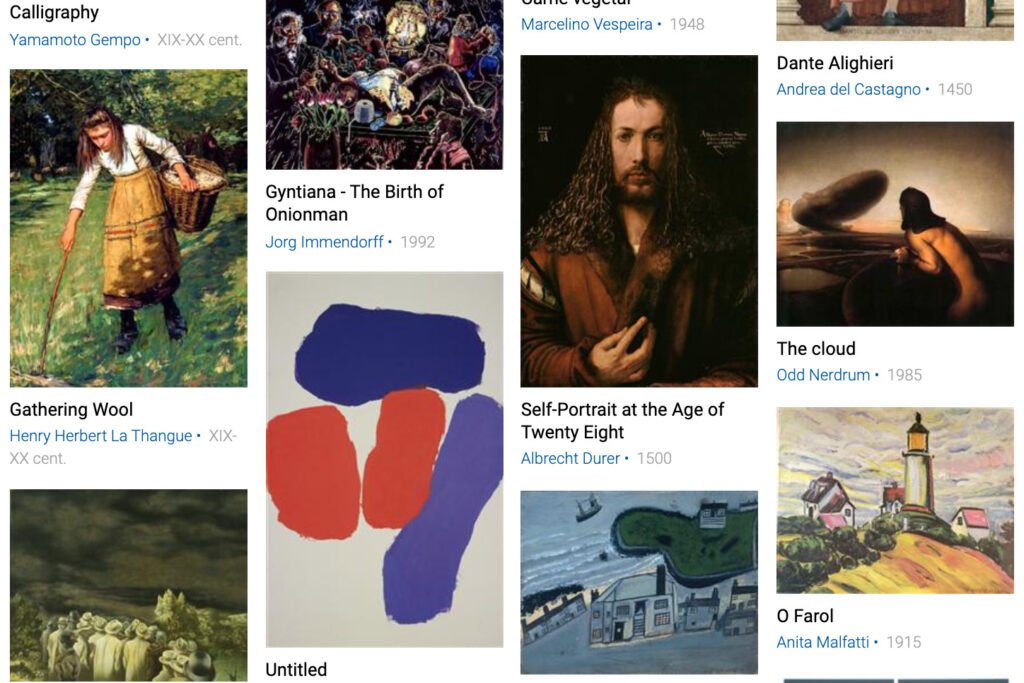

WikiArt: Online Museum of Famous Paintings

WikiArt, a non-profit volunteer project, has collected visual art works from all over the world since its launch in 2010 and can be regarded as a large online museum of famous paintings.

According to the website, as of January 2020,The site contains 169,057 paintings from 3,293 artists, including 61 genres.

WikiArt has a huge number of paintings with clear classification, so it has become a data set used by many AI researchers to train algorithms.

In 2015, researchers from Rutgers University and Facebook AI Lab collaborated to develop GAN (Generative Adversarial Network) and trained it on WikiArt data, enabling GAN to distinguish between different styles of art.

ArtEmis: A new dataset born from WikiArt

The Stanford University team created a new visual art annotation dataset ArtEmis based on the works on WikiArt.

They annotated 81,446 works of art by 1,119 artists on WikiArt.These works range from artworks created in the 15th century to modern fine art paintings created in the 21st century, covering 27 artistic styles (abstract, Baroque, Cubism, Impressionism, etc.) and 45 genres (urban landscapes, landscapes, portraits, still lifes, etc.), bringing a very diverse visual impact to the audience.

Each work requires at least 5 annotators to write down their dominant emotions when they see the painting and explain the reasons for such emotions.

Specifically,After observing a piece of art, the annotator is required to first choose one of the eight basic emotional states (anger, disgust, fear, sadness, amusement, awe, satisfaction and excitement) as the main emotion he or she feels. If it is none of the above eight emotions, you can also mark it as "other".

After labeling the emotional feelings, the annotator needs to use words to further explain why he or she feels this way or why there is no strong emotional reaction.

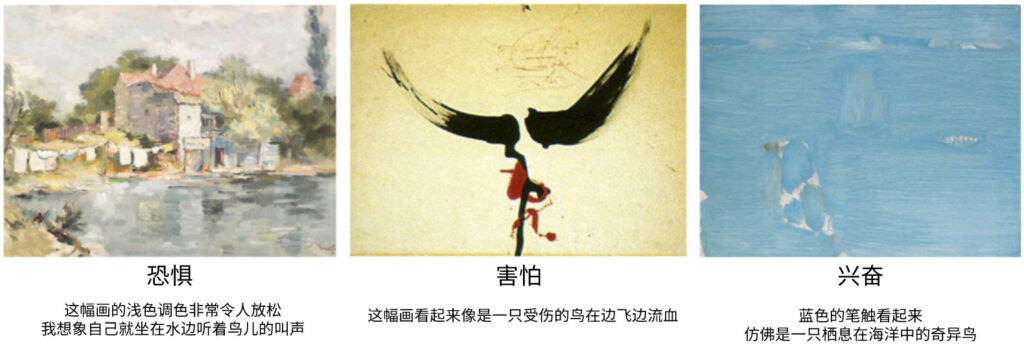

Below are the sentiment labels that human annotators gave to the paintings, along with detailed explanations:

The labeling work was ultimately completed by 6,377 labelers on Amazon's crowdsourcing platform, taking a total of 10,220 hours.

The team said that compared with other existing similar datasets,ArtEmis annotations use richer, more emotional, and more diverse language, and the corpus formed by these annotations contains a total of 36,347 different words.

ArtEmis(Sentiment Labeling Dataset for Visual Arts) Details:

Publishing Agency:Stanford University, École Polytechnique, and King Abdullah University of Science and Technology

Quantity included:A total of 439121 paintings were annotated

Data format:csv Data size:21.8 MB

address:https://orion.hyper.ai/datasets/14861

How to create an algorithm that can perceive emotions

In order to enable computers to have emotional responses to visual art like humans and to use language to justify the reasons for these emotions, the team trained a Neural Speaker based on this large-scale dataset.

Guibas, a professor at Stanford University's HAI Institute, said that this is a new exploration in the field of computer vision. Previously, classic computer vision methods often pointed out what was in the image, such as: there are three dogs; someone is drinking coffee...but their work is to define emotions in visual art.

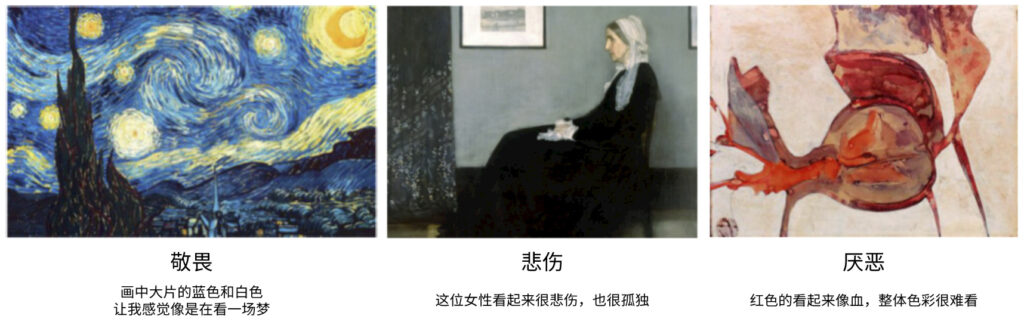

After training on the ArtEmis dataset,The algorithm recognizes the emotions contained in different paintings and automatically generates the basis for such judgment.The example results are as follows:

The paper introduces the specific training ideas.First, ArtEmis is used to train the model to solve the problem of emotional interpretation of art paintings.This is a classic 9-way text classification problem, and the team used cross-entropy based optimization applied to an LSTM text classifier trained from scratch, while also considering fine-tuning a BERT model pre-trained for this task.

In addition, let the computer predict the emotional reactions that humans typically have to the work.

To address this issue, the team fine-tuned a pre-trained ResNet32 encoder on ImageNet by minimizing the KL-divergence between the output and ArtEmis user annotations.

For a given painting, the classifier first determines whether the emotion it conveys is positive or negative, and then further determines which specific emotion it is.

Team Introduction,For a painting, the algorithm can not only perceive the overall emotional color, but also distinguish the emotions of different characters in the painting.Taking Rembrandt's "The Beheading of Saint John the Baptist" as an example, the AI algorithm not only captured the pain of John being beheaded, but also perceived the "satisfaction" of Salome, the woman whose head was offered in the painting.

When algorithms have empathy

Human emotions are very rich, complex and subtle. Even we humans cannot fully understand the feelings that some artists want to express. Therefore, there are bound to be certain challenges in allowing AI to accurately understand the artist's intentions.

However, the release of the ArtEmis dataset has allowed AI to take the first step in processing the emotional attributes of images.

The team said that after further research and improvement, the algorithm may be able to perceive human joys and sorrows, and artists can use the algorithm to evaluate whether their works can achieve the expected emotional expression effect. In addition, once the algorithm can understand human nature, the process of human-computer interaction will also be more natural and harmonious.

News Source:

https://techxplore.com/news/2021-03-artist-intent-ai-emotions-visual.html

Dataset paper: https://arxiv.org/pdf/2101.07396.pdf

Project homepage: https://www.artemisdataset.org/#videos