Command Palette

Search for a command to run...

Super SloMo: Using Neural Networks to Create Super Slow Motion

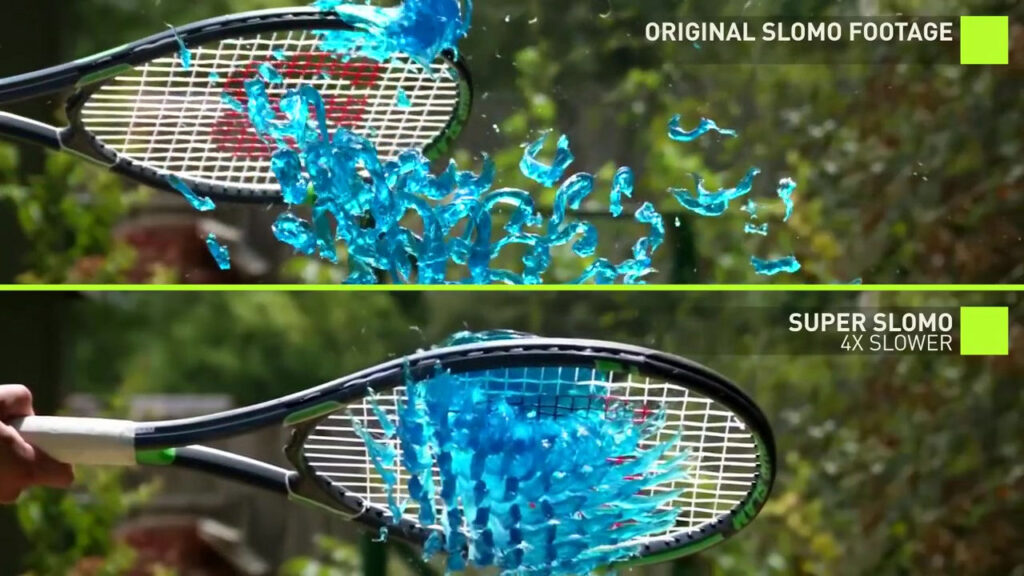

In order to solve the problems of video freezing and not being smooth, the video interpolation method came into being. The Super SloMo method proposed by NVIDIA is far superior to many other methods. For a video recorded by ordinary equipment, it can "imagination" a high frame rate slow motion video. With this magic tool, the threshold for video production has been further lowered.

Nowadays, people are pursuing higher and higher video frame rates, because high frame rate videos are smoother and can greatly enhance people's viewing experience.

The frame rate of videos shot by existing cameras has also been continuously improved from 25 FPS (Frames Per Second) to 60 FPS, and then to 240 FPS and even higher.

However, high frame rate video equipment requires a lot of memory and is expensive, so it is not yet popular. In order to obtain high frame rate videos without professional equipment, video interpolation technology came into being.

NVIDIA's AI "brain supplement" method Super SloMo is far ahead of many video interpolation technologies, even if the frame rate is only 30-frame videos can also be supplemented to 60 frames, 240 frames or even higher using Super SloMo.

Advantages and disadvantages of traditional frame insertion methods

To better understand Super SloMo, let us first take a look at the existing more traditional video interpolation technology.

Frame sampling

Frame sampling is to use key frames as compensation frames, which actually lengthens the display time of each key frame, which is equivalent to no interpolation. In addition to obtaining a higher frame rate and a larger file size at the same video quality, it will not bring any improvement in visual perception.

advantage:Frame sampling consumes less resources and is fast.

shortcoming:This may make the video look not very smooth.

Frame Mixing

Frame blending, as the name suggests, is to increase the transparency of the previous and next key frames, and then blend them into a new frame to fill the gap.

advantage:Calculate how long it will take.

shortcoming:The effect is not good. Since the original key frame is simply made semi-transparent, when the outline of the moving object overlaps the previous and next frames, an obvious blur will occur, which has little effect on the smoothness of the visual effect of the video.

Motion Compensation

The principle of motion compensation (MEMC) is to find the blocks with motion in the horizontal and vertical directions based on the difference between two frames, analyze the motion trend of the image blocks, and then calculate the intermediate frames.

MEMC is mainly used in TVs, monitors and mobile devices to improve video frame rate and give viewers a smoother viewing experience.

advantage:Reduce motion jitter, weaken image trailing and ghosting, and improve image clarity.

shortcoming:When the background of the moving object is complex, the bug of object edge movement will appear.

Optical flow

Optical flow is an important direction in computer vision research. It infers the trajectory of pixel movement based on the upper and lower frames and automatically generates new empty frames. It is somewhat similar to the motion blur calculation method.

advantage:The picture is smoother and the sense of lag is less.

shortcoming:The calculation is large and time-consuming; it is sensitive to light and is prone to image disorder errors when the light changes greatly.

Super SloMo: AI interpolation method, a classic in the industry

At the 2018 computer top conference CVPR, NVIDIA published a paper "Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation"In the paper, Super SloMo was proposed, which attracted wide attention in the industry.

Paper address: https://arxiv.org/pdf/1712.00080.pdf

Super SloMo is different from traditional methods. It uses deep neural networks to implement frame interpolation. The basic idea is:Use a large number of normal videos and slow-motion videos for training, and then let the neural network learn to reason and generate high-quality super slow-motion videos based on normal videos.

The method proposed by the Super SloMo team,Its entire framework relies on two fully convolutional neural networks U-Net.

First, a U-Net is used to compute the bidirectional optical flow between adjacent input images. Then, these optical flows are linearly fitted at each time step to approximate the bidirectional optical flow of the intermediate frames.

To solve the problem of motion boundary artifacts, another U-Net is used to improve the approximate optical flow and predict the flexible visibility map. Finally, the two input images are warped and linearly fused to form an intermediate frame.

In addition, the parameters of Super SloMo's optical flow calculation network and interpolation network do not depend on the specific time step of the interpolated frame (the time step is used as the input of the network). Therefore, it can interpolate frames at any time step between two frames in parallel, thus breaking through the limitations of many single-frame interpolation methods.

The authors say that using their unoptimized PyTorch code, generating 7 intermediate frames with a resolution of 1280*720 takes only 0.97 seconds and 0.79 seconds on a single NVIDIA GTX 1080Ti and Tesla V100 GPU, respectively.

To train the network,The authors collected multiple 240-fps videos from YouTube and handheld cameras. In total, 1,100 video segments were collected, consisting of 300,000 independent video frames with a resolution of 1080×720.These videos cover a wide range of scenarios, from indoors to outdoors, from static cameras to dynamic cameras, and from daily activities to professional sports.

The model was then verified on other datasets, and the results showed that this study significantly improved the performance of existing methods on these datasets.

Follow the tutorial to achieve Super SloMo with one click

Although the authors of this NVIDIA paper have not yet released the dataset and code, there are experts among the public. A user named avinashpaliwal on GitHub has open-sourced his own PyTorch implementation of Super SloMo, and the results are almost the same as described in the paper.

The project details are as follows:

Since model training and testing are done on PyTorch 0.4.1 and CUDA 9.2, it is essential to install these two software. In addition, you also need an NVIDIA graphics card.

In addition, the model cannot be trained directly using videos, so you also need to install ffmpeg to extract frames from the video. After all these preparations are ready, you can download the adobe 240fps dataset for training.

However, you don’t need to prepare these, just be a quiet “asker” and achieve Super SloMo with one click.

We found the corresponding tutorial on the domestic machine learning computing container service platform (https://openbayes.com). From data sets to codes to computing power, everything is available, and even a novice can easily get started.

Tutorial Link:

https://openbayes.com/console/openbayes/containers/xQIPlDQ0GyD/overview

Tutorial User Guide

First, register and log in to https://openbayes.com/, under the "Public Resources" menu 「Public Tutorial」, select this tutorial——"Super-SloMo Super Slow Motion Lens PyTorch Implementation".

The sample display file in the tutorial is Super-SloMo.ipynb. Running this file will install the environment and display the super slow motion effect of the final interpolated frames.

You can also use your own video material and change lightning-dick-clip.mp4 in the generated code below to your video file name.

The attribute "scale" is used to control the speed of the generated video. For example, if it is set to 4, it will be 4 times slow motion.

Generate code:

!python3 'Super-SloMo/eval.py' \ 'lightning-dick-clip.mp4' \ --checkpoint='/openbayes/input/input0/SuperSloMo.ckpt' \ --output='output-tmp.mp4' \ --scale=4print('Done')Convert video format code:

!ffmpeg -i output-tmp.mp4 -vcodec libx264 -acodec aac output.mp4In this tutorial, a video clip from the Internet was used to perform Super SloMo interpolation, and the following results were obtained:

Currently, the platform is also offering free vGPU usage time every week. Everyone can complete it easily, so try it out now!

References:

Paper: https://arxiv.org/pdf/1712.00080.pdf

Project homepage: http://jianghz.me/projects/superslomo/

https://zhuanlan.zhihu.com/p/86426432