Command Palette

Search for a command to run...

Face Recognition Is Ineffective for Animation, Disney Creates a Face Recognition Library Specifically for Animation

Face recognition has also encountered a pitfall. It can recognize three-dimensional images, but it is ineffective for two-dimensional images. Disney's technical team is developing this algorithm to help animators with post-search. The team uses PyTorch to greatly improve efficiency.

When it comes to animation, we have to mention Disney, a business empire established in 1923. Disney, which started out as an animation company, has been leading the development of animated films around the world to this day.

Behind every animated film, there are hundreds of people's hard work and sweat. Since the release of the first computer 3D animation "Toy Story", Disney has embarked on the journey of digital animation creation. With the development of CGI and AI technology, the production and archiving methods of Disney animated films have also undergone great changes.

Currently, Disney has also absorbed a large number of computer scientists who are using cutting-edge technology to change the way content is created and reduce the burden on filmmakers behind the scenes.

How does a century-old film giant manage digital content?

It is understood that there are about 800 employees from 25 different countries in Walt Disney Animation Studios, including artists, directors, screenwriters, producers and technical teams.

Making a movie requires going through many complex processes, from generating inspiration, to writing a story outline, to drafting the script, art design, character design, dubbing, animation effects, special effects production, editing, post-production, etc.

As of March 2021, Walt Disney Animation Studios, which specializes in the production of animated films alone, has produced and released 59 feature-length animations, and the number of animated characters in these films adds up to hundreds and thousands.

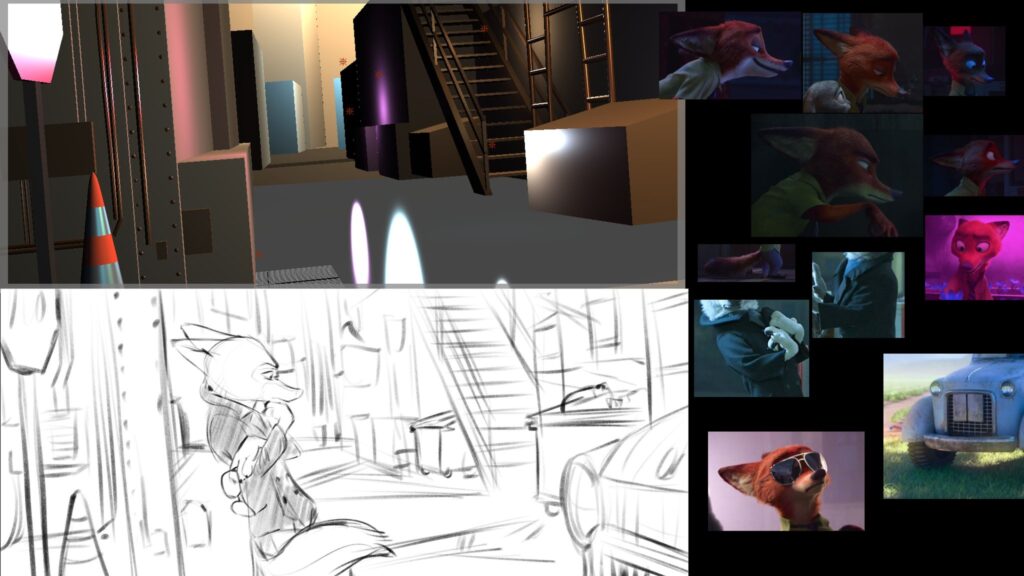

When animators are working on a sequel or want to reference a certain character, they need to search for specific characters, scenes or objects in the massive content archive.They often need to spend hours watching videos, relying solely on their eyes to filter out the clips they need.

To solve this problem, Disney started a project called 「Content Genome」AI projects,Designed to create an archive of Disney digital content, it helps animators quickly and accurately identify faces in animations (whether they are people or objects).

Training animation-specific face recognition algorithms

The first step in digitizing a content library is to detect and mark the content in past works to facilitate searches by producers and users.

Facial recognition technology is already relatively mature, but can the same method be used for facial recognition in animations?

After experiments, the Content Genome technical team found that this was only possible in certain circumstances.

They selected two animated films, "Elena of Aval" and "The Lion Guard", and manually annotated some samples, marking the faces in hundreds of frames of the film with squares.The team verified that face recognition technology based on the HOG + SVM pipeline performed poorly on animated faces (especially human-like faces and animal faces).

After analysis, the team confirmed that methods like HOG+SVM are robust to changes in color, brightness or texture, but the models used can only match animated characters with human proportions (i.e. two eyes, a nose and a mouth).

Furthermore, since the background of animated content usually has flat areas and few details, the Faster-RCNN model will mistakenly identify anything that stands out against the simple background as an animated face.

Therefore, the team decided they needed a technique that could learn more abstract concepts about faces.

The team chose to use PyTorch to train the model. The team introduced,With PyTorch, they can access state-of-the-art pre-trained models to meet their training needs and make the archiving process more efficient.

During the training process, the team found that their dataset had enough positive samples, but not enough negative samples to train the model. They decided to increase the initial dataset with other images that did not contain animated faces but had animated features.

In order to do this technically, They extended Torchvision’s Faster-RCNN implementation to allow loading negative samples during training without annotations.

This is also a new feature that the team has made for Torchvision 0.6 under the guidance of Torchvision core developers.Adding negative examples to the dataset can significantly reduce false positives at inference time, leading to superior results.

Using PyTorch to process videos increases efficiency by 10 times

After achieving facial recognition for animated characters, the team’s next goal is to speed up the video analysis process, and applying PyTorch can effectively parallelize and accelerate other tasks.

The team introduced,Reading and decoding the video is also time-consuming, so the team used a custom PyTorch IterableDataset, combined with PyTorch’s DataLoader, to allow different parts of the video to be read using parallel CPUs.

This way of reading videos is already very fast, but the team also tried to complete all calculations with just one read. So, they executed most of the pipeline in PyTorch and took GPU execution into consideration. Each frame is sent to the GPU only once, and then all algorithms are applied to each batch, reducing the communication between the CPU and GPU to a minimum.

The team also used PyTorch to implement more traditional algorithms, such as the shot detector, which does not use neural networks and mainly performs operations such as color space transformation, histograms, and singular value decomposition (SVD). PyTorch allowed the team to move computations to the GPU with minimal cost and easily recycle intermediate results shared between multiple algorithms.

By using PyTorch, the team offloaded the CPU portion to the GPU and used DataLoader to accelerate video reading, making full use of the hardware and ultimately reducing processing time by 10 times.

The team's developers concluded that PyTorch's core components, such as IterableDataset, DataLoader, and Torchvision, allow the team to improve data loading and algorithm efficiency in production environments. From inference to model training resources to a complete pipeline optimization toolset, the team is increasingly choosing to use PyTorch.

This article is compiled and published by the official account PyTorch Developer Community