Command Palette

Search for a command to run...

PyTorch Matrix Multiplication Method Summary & Problem Solving

In machine learning and deep learning, matrix operations are the most common and effective way to improve computing efficiency. Because features and weights are stored as vectors, matrix operations are particularly important.Important machine learning methods such as gradient descent, back propagation, and matrix factorization all require matrix operations.

In deep learning, neural networks store weights in matrices, and linear algebra-based operations on the GPU can perform simple and fast calculations on the matrices.

Small-scale matrix operations can be quickly calculated using for loops. However, once a huge amount of data is encountered,Loop statements will slow down the calculation speed.At this time, we often use matrix multiplication, which can greatly improve the efficiency of calculations.

Common matrix, vector, and scalar multiplication methods

- torch(mm) 2D matrix multiplication

torch.mm(mat1,ma2,out=None), where mat1(n*m), mat2(m*d), the dimension of output out is (n*d).

Note: Calculates the matrix multiplication of two 2D matrices and does not support broadcasting.

- torch.bmm() 3D Batch Matrix Multiplication

torch.bmm(bmat1,bmat2,out=None), where bmat1(b*n*m), bmat2(B*m*d), the output out has a dimension of (B*n*d) .

Note: The two inputs of this function must be three-dimensional matrices with the same first dimension (indicating the Batch dimension). Broadcasting is not supported.

- torch.matmul() mixed matrix multiplication

torch.matmul(input,other,out=None), this method is more complicated, please refer to the document, it is the matrix product of two tensors, the calculation result depends on the dimension of the tensor, and it supports broadcasting.

- torch.mul() Matrix element-wise multiplication

torch.mul(mat1,other,out=None), where other multiplier can be a scalar or a matrix of any dimension, as long as the final multiplication is satisfied, and broadcasting is supported.

- torch.mv() matrix-vector multiplication

torch.mv(mat, vec, out=None) multiplies the matrix mat and the vector vec. If mat is an n×m tensor and vec is an m-element one-dimensional tensor, it will output an n-element one-dimensional tensor, and does not support broadcasting.

- torch.dot() tensor dot multiplication

torch.dot(tensor1, tensor2) calculates the dot product of two tensors. Both tensors are 1-D vectors and do not support broadcasting.

Forum Hot Questions

Q: How to multiply batches of data vectors without using a for loop?

input shape: N x M x VectorSize weight shape: M x VectorSize x VectorSize target output shape: N x M x VectorSize

N represents the batch size, M represents the number of vectors, and VectorSize represents the size of the vectors.

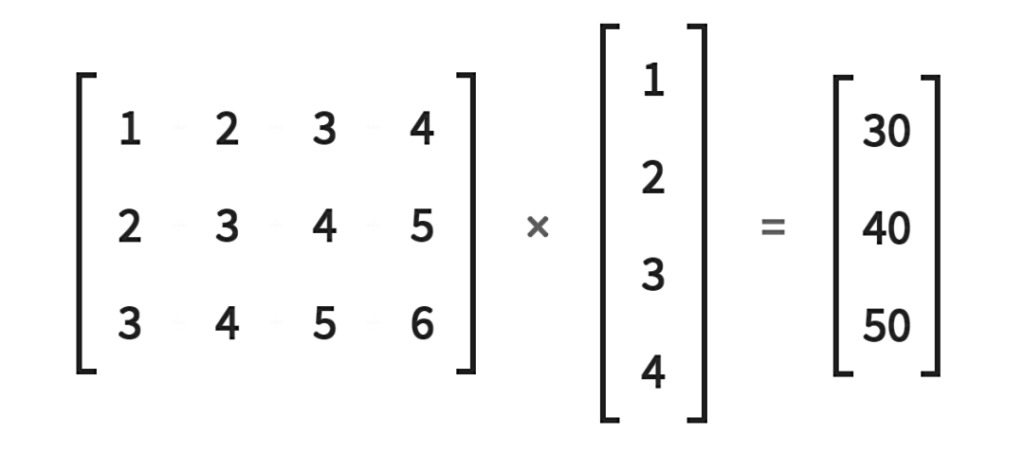

As shown in the following figure: Solution:

N, M, V=2, 3, 5a = torch.randn(N, M, V)b = torch.randn(M, V, V)a_expand = a.unsqueeze(-2)b_expand = b.expand(N, -1, -1, -1)c = torch.matmul(a_expand, b_expand).squeeze(-2)

Original post address:https://discuss.pytorch.org/t/batch-matrix-vector-multiplication-without-for-loop/112841

Q: When calculating matrix-vector multiplication by batch vector input, a RuntimeError occurs

Given:

# (batch x inp)v = torch.randn(5, 15)# (inp x output)M = torch.randn(15, 20)

calculate:

# (batch x output)out = torch.Tensor(5, 20)for i, batch_v in enumerate(v):out[i] = (batch_v * M).t()

However, (i) multiplication will result in the following error for two inputs of the same dimension: RuntimeError: inconsistent tensor size at /home/enrique/code/vendor/pytorch/torch/lib/TH/generic/THTensorMath.c:623

Why does this error occur? Is there a way to avoid looping over row vectors?

Solution:

* represents elementwise multiplication. If you are using Python 3, you can use the @ operator for matrix-vector multiplication and matrix-matrix multiplication.

You can also use batch_v.mm(M) since batched vector matrix is matrix-matrix multiplication.

In addition, you can also use methods such as bmm and baddbmm to eliminate loop operations in Python.

Original post address:https://discuss.pytorch.org/t/matrix-vector-multiply-handling-batched-data/203

Q: How to calculate matrix-vector multiplication without using loop statements?

How can we multiply a 2×2 matrix by three vectors of size 2 without using loops?

Remark:

1. When using a loop statement, the code is as follows:

A=torch.tensor([[1.,2.],[3.,4.]])b=torch.tensor([[3.,4.], [5.,6], [7.,8.]])res = []for i in range(b.shape[0]):res.append(torch.matmul(A, b[i]))

2. After trying torch.matmul (A.repeat ((2,1,1)) , b) does not work.

Solution:

This can be achieved using a.matmul(bt()).

Original post address:https://discuss.pytorch.org/t/vectorize-matrix-vector-multiplication/88051

This official account will continue to update the latest PyTorch information and development skills, welcome to follow!