Command Palette

Search for a command to run...

Remote Sensing Resources (Part 1): Using Open Source Code to Train Land Classification Models

Land classification is one of the important application scenarios of remote sensing images. This article introduces several common methods of land classification and uses open source semantic segmentation code to create a land classification model.

Remote sensing images are important data for surveying and mapping geographic information work. They are of great significance for national geographical conditions monitoring and updating of geographic information databases, and play an increasingly important role in military, commercial, and people's livelihood fields.

In recent years, with the improvement of the country's satellite image acquisition capabilities, the efficiency of remote sensing image data collection has been greatly improved, forming a pattern in which multiple sensors such as low spatial resolution, high spatial resolution, wide viewing angle, multi-angle, radar, etc. coexist.

A full range of sensors to meet the needs of earth observation for different purposes.However, it also caused problems such as inconsistent remote sensing image data formats and consumption of a large amount of storage space.There are often great challenges in the image processing process.

Taking land classification as an example, in the past, remote sensing images were used to classify land.Often rely on a lot of manpower for labeling and statistics,It takes several months or even a year; coupled with the complexity and diversity of land types, human statistical errors are inevitable.

With the development of artificial intelligence technology, the acquisition, processing and analysis of remote sensing images have become more intelligent and efficient.

Common land classification methods

The commonly used land classification methods are basically divided into three categories:Traditional classification methods based on GIS, classification methods based on machine learning algorithms, and classification methods using neural network semantic segmentation.

Traditional method: using GIS classification

GIS is a tool often used when processing remote sensing images. Its full name is Geographic Information System, also known as geographic information system.It integrates advanced technologies such as relational database management, efficient graphics algorithms, interpolation, zoning and network analysis.Make spatial analysis simple and easy.

Using GIS spatial analysis technology,It can obtain information on the spatial location, distribution, morphology, formation and evolution of the corresponding land type.Identify land features and make judgements.

Machine Learning: Using Algorithms for Classification

Traditional land classification methods include supervised classification and unsupervised classification.

Supervised classification is also called training classification.It refers to comparing and identifying pixels of training samples of confirmed categories with pixels of unknown categories.Then complete the classification of the entire land type.

In supervised classification, when the training samples are not accurate enough, the training area is usually reselected or manually modified visually to ensure the accuracy of the training sample pixels.

Unsupervised classification means that there is no need to obtain a priori category standards in advance, but statistical classification is performed entirely according to the spectral characteristics of the pixels in the remote sensing image.This method has a high degree of automation and requires little human intervention.

With the help of machine learning algorithms such as support vector machines and maximum likelihood methods, the efficiency and accuracy of supervised and unsupervised classification can be greatly improved.

Neural Networks: Using Semantic Segmentation for Classification

Semantic segmentation is an end-to-end pixel-level classification method that can enhance the machine's understanding of environmental scenes and is widely used in fields such as autonomous driving and land planning.

Semantic segmentation technology based on deep neural networks performs better than traditional machine learning methods when dealing with pixel-level classification tasks.

High-resolution remote sensing images have complex scenes and rich details, and the spectral differences between objects are uncertain, which can easily lead to low segmentation accuracy or even invalid segmentation.

Using semantic segmentation to process high-resolution and ultra-high-resolution remote sensing images,The pixel features of the image can be extracted more accurately, and specific land types can be identified quickly and accurately, thereby improving the processing speed of remote sensing images.

Commonly used semantic segmentation open source models

Commonly used pixel-level semantic segmentation open source models include FCN, SegNet, and DeepLab.

1. Fully Convolutional Network (FCN)

characteristic:End-to-end semantic segmentation

advantage:No restrictions on image size, universal and efficient

shortcoming:Unable to perform real-time reasoning quickly, processing results are not fine enough, and are not sensitive to image details

2. SegNet

characteristic:Move the max pooling index to the decoder, improving segmentation resolution

advantage:Fast training speed, high efficiency, and low memory usage

shortcoming:The test is not feed-forward and needs to be optimized to determine the MAP label

3. DeepLab

DeepLab was released by Google AI.Advocate using DCNN to solve semantic segmentation tasks,There are four versions in total: v1, v2, v3, and v3+.

DeepLab-v1 solves the problem of information loss caused by pooling.The method of dilated convolution is proposed.The receptive field is enlarged without increasing the number of parameters, while ensuring that information is not lost.

DeepLab-v2 is based on v1.Added multi-scale parallelism,The problem of simultaneous segmentation of objects of different sizes is solved.

DeepLab-v3 The hole convolution is applied to the cascade module.And the ASPP module has been improved.

DeepLab-v3+ The SPP module is used in the encoder-decoder structure.It can restore fine object edges and refine the segmentation results.

Model training preparation

Purpose: Based on DeepLab-v3+, develop a 7-classification model for land classification

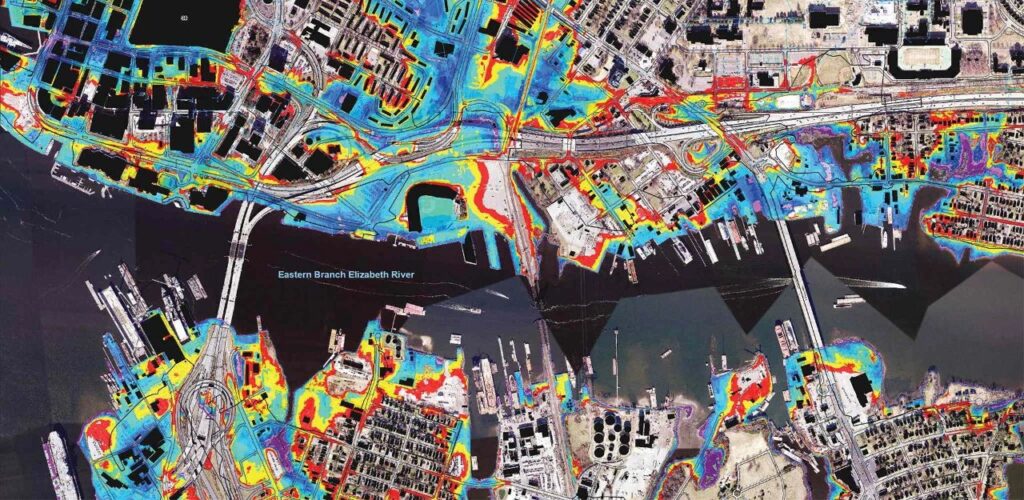

data:304 remote sensing images of a certain area from Google Earth. In addition to the original images, it also includes professionally annotated supporting 7-classification maps, 7-classification masks, 25-classification maps, and 25-classification mask images. The image resolution is 560*560, and the spatial allocation rate is 1.2m.

The upper part is the original image, and the lower part is the 7-classification image

The parameter adjustment code is as follows:

net = DeepLabV3Plus(backbone = 'xception')criterion = CrossEntropyLoss()optimizer = optim.SGD(net.parameters(), lr=0.05, momentum=0.9,weight_decay=0.00001)lr_fc=lambda iteration: (1-iteration/400000)**0.9exp_lr_scheduler = lr_scheduler.LambdaLR(optimizer,lr_fc,-1)

Training Details

Hashrate selection:NVIDIA T4

Training framework:PyTorch V1.2

Iterations:600 epoch

Training duration:About 50h

IoU:0.8285 (training data)

AC:0.7838 (training data)

Dataset link: https://openbayes.com/console/openbayes/datasets/qiBDWcROayo

Detailed training process direct link: https://openbayes.com/console/openbayes/containers/dOPqM4QBeM6

Tutorial Usage

The sample display file in the tutorial is predict.ipynb. Running this file will install the environment and display the recognition effect of the existing model.

Project Path

– Test image path:

semantic_pytorch/out/result/pic3

– Mask image path:

semantic_pytorch/out/result/label

– Predict image path:

semantic_pytorch/out/result/predict

– Training data list:train.csv

– Test data list:test.csv

Instructions

The training model enters semantic_pytorch and the trained model is saved in model/new_deeplabv3_cc.pt.

The model uses DeepLabV3plus. In the training parameters, the loss uses binary cross entropy. The epoch is 600, and the initial learning rate is 0.05.

Training instructions:

python main.pyIf you use the model we have trained, save it in the model folder fix_deeplab_v3_cc.pt and call it directly in predict.py.

Predictive Instructions:

python predict.pyTutorial address:https://openbayes.com/console/openbayes/containers/dOPqM4QBeM6

Model Author:

Wang Yanxin, a second-year software engineering student at Heilongjiang University, currently interning at OpenBayes.

Question 1: In order to develop this model, what channels did you use and what information did you consult?

Wang Yanxin:Mainly through technical communities, GitHub and other channels, I checked some DeepLab-v3+ papers and related project cases, learned in advance what the pitfalls were and how to overcome them, and made sufficient preparations to query and solve problems at any time during the subsequent model development process.

Question 2: What obstacles did you encounter during the process? How did you overcome them?

Wang Yanxin:The amount of data is not enough, resulting in average performance of IoU and AC. Next time, you can try using a public remote sensing dataset with richer data.

Question 3: What other directions do you want to try regarding remote sensing?

Wang Yanxin:This time I am classifying the land. Next, I want to use a combination of machine learning and remote sensing technology to analyze the ocean landscape and marine elements, or combine acoustic technology to try to identify and judge the seabed topography.

The amount of data used in this training is relatively small, and the IoU and AC performance on the training set is average. You can also try to use existing public remote sensing datasets for model training. Generally speaking, the more thorough the training and the richer the training data, the better the model performance.

In the next article in this series,We have compiled 11 mainstream public remote sensing datasets and classified them.You can choose according to your needs and train a more complete model based on the training ideas provided in this article.

refer to:

http://tb.sinomaps.com/CN/0494-0911/home.shtml

file:///Users/antonia0912/Downloads/2018-6A-50.pdf

https://zhuanlan.zhihu.com/p/75333140

http://www.mnr.gov.cn/zt/zh/zljc/201106/t20110619_2037196.html