Command Palette

Search for a command to run...

Beyond PyTorch and TensorFlow, This Domestic Framework Has Something Special

There are already so many deep learning frameworks, why do we need OneFlow? In the field of machine learning, Yuan Jinhui has a longer-term vision than anyone at 90%.

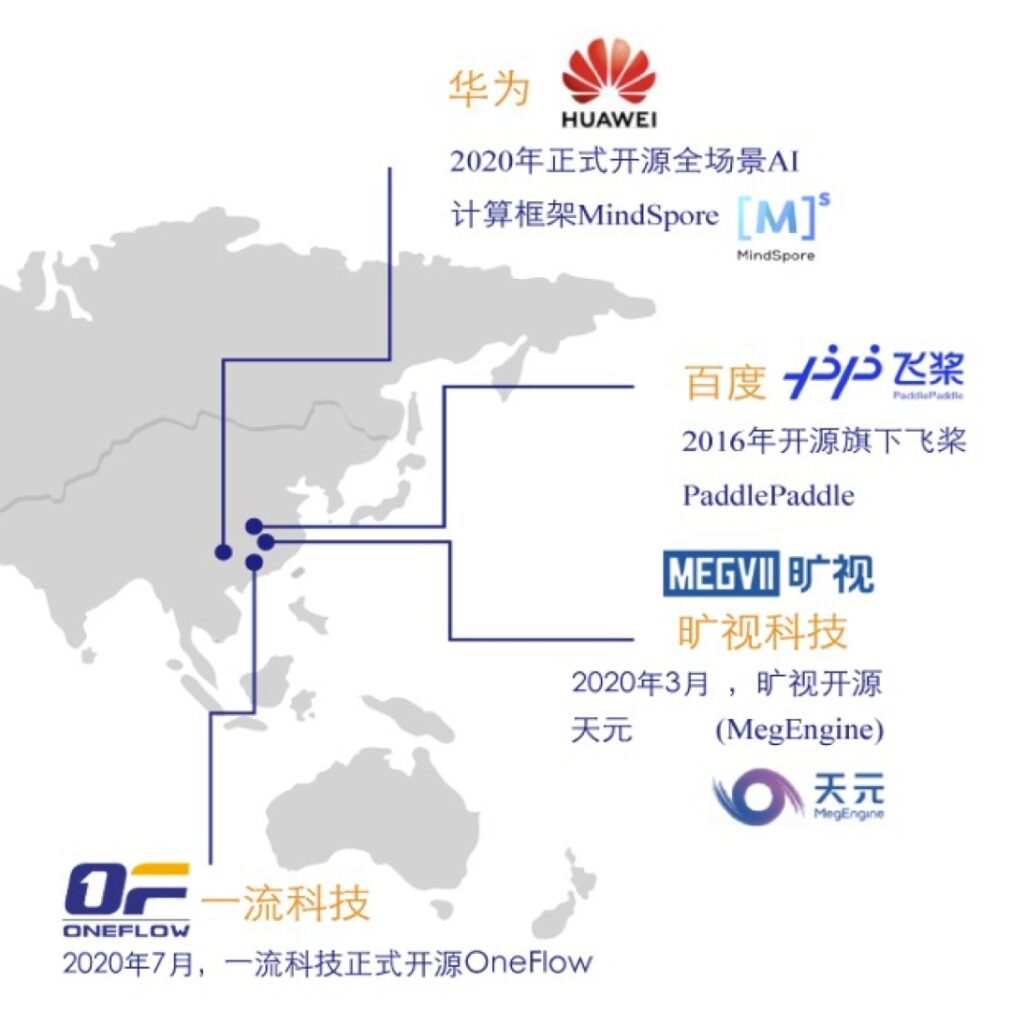

In the field of deep learning, mainstream frameworks such as PyTorch and TensorFlow undoubtedly occupy the vast majority of the market share. Even a company of Baidu's level has spent a lot of manpower and resources to push PaddlePaddle into the mainstream.

In such a resource-dominated, predator-focused competitive environment, a domestic deep learning framework startup, OneFlow, emerged.

It excels at processing large-scale models, and even this year it made all its source code and experimental comparison data open source on GitHub.

Inevitably, questions arise:Is a new architecture like OneFlow, which is good at solving large model training problems, necessary? Is the efficiency of deep learning frameworks so important? Is it possible for startups to stand out from the competition?

We took the opportunity of CosCon 20′ open source annual conference to interview Yuan Jinhui, CEO of Yili Technology, and learned about the story behind more than 1,300 days and nights and hundreds of thousands of lines of code that he and Yili Technology’s engineers spent on the project.

No matter how many halos you have, you still have to take one step at a time when starting a business

In November 2016, Yuan Jinhui wrote down the first version of the design concept of OneFlow in an office building near Tsinghua University. At that time, Yuan Jinhui had just resigned from Microsoft Research Asia (MSRA), where he had worked for nearly four years.

"Former MSRA employee" is not the only tag associated with Yuan Jinhui. After graduating from Xidian University in 2003, he was recommended to Tsinghua University's Department of Computer Science for direct doctoral studies.He studied under Professor Zhang Bei, an academician of the Chinese Academy of Sciences and one of the founders of China's AI discipline.

After graduating from Tsinghua University in 2008, Yuan Jinhui joined NetEase and 360 Search. The Hawkeye system he developed is used as a daily training auxiliary system by the Chinese national team. In addition, during his time at MSRA, he focused on large-scale machine learning platforms.We also developed the world's fastest topic model training algorithm and system, LightLDA,Used in Microsoft online advertising system.

LightLDA was launched in 2014. Just two years later, Yuan Jinhui, who had a unique vision, came up with a bold conjecture:As business needs and scenarios increase, distributed deep learning frameworks that can efficiently handle large model training will inevitably become the core of infrastructure in the data intelligence era after Hadoop and Spark.

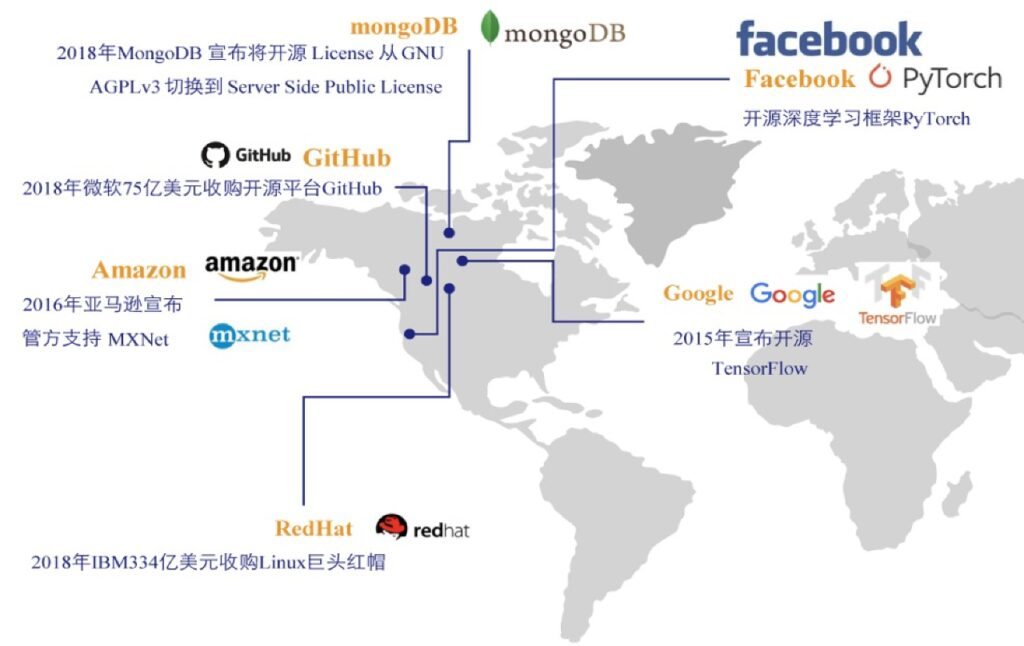

However, the mainstream deep learning frameworks at the time were all developed by major companies such as Google, Amazon, and Facebook, and the situation was similar even in China.This is because developing a deep learning framework requires not only huge R&D costs, but more importantly, the ability to endure loneliness and be prepared for a protracted war. Therefore, no startup dares to test the waters in this field.

The existing deep learning frameworks are already in full swing. Will users buy a new framework from a startup? Yuan Jinhui, a man of action, not only dares to think, but also dares to act.

When he typed the first line of code for OneFlow, he had not yet thought through the detailed implementation strategy, let alone the perfect business logic. His idea was simple, yet complex.We want to make a product that developers love to use.

A group of geniuses + 21 months, the first version of OneFlow is launched

In January 2017, Yuan Jinhui founded Yiliu Technology and gathered more than 30 engineers to start the official "team battle" of OneFlow. Although everyone had fully anticipated the difficulties, as the development progressed, the difficulties that emerged were still beyond the team's expectations.

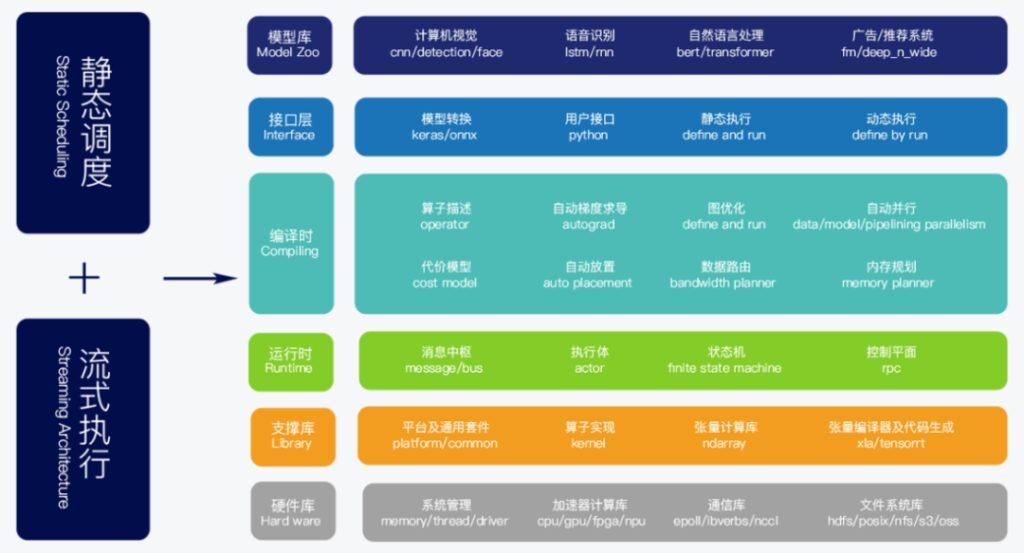

The technology of deep learning framework is very complex. Moreover, OneFlow adopts a brand-new technical architecture with no precedent to refer to. It took almost two years just to implement the technical concept.

In the fall of 2018, the development of Yiliu Technology entered its most difficult stage. Product development was delayed, some employees’ patience and confidence were exhausted, and the company’s next round of financing was full of twists and turns, which put the team’s morale and confidence in great challenges.

There is a saying in the startup circle that there is an "18-month curse", which means that after a year and a half without seeing hope or positive feedback, the startup team's mentality will change and they will lose patience. Yuan Jinhui realized that he could not wait any longer.OneFlow must be used in real scenarios as soon as possible to let everyone see that OneFlow's innovation is indeed valuable, thus forming positive feedback.

In September 2018, after 1 year and 9 months of research and development,Yuan Jinhui and his team launched the closed source version of OneFlow.At that time, OneFlow had not yet been open sourced and there were problems of varying sizes, but the official release of the product finally gave the team members peace of mind.

Focus on large-scale training, and its efficiency is much better than similar frameworks

In November 2018, the god of luck descended upon First-Class Technology. Google launched the most powerful natural language model BERT, opening a new era of NLP. This verified Yuan Jinhui’s prediction.New architectures that are good at handling large-scale training are necessary and essential.

soon,First-class technology engineers supported the distributed training of BERT-Large based on OneFlow.This was also the only framework at the time that supported distributed BERT-Large training, with performance and processing speed far exceeding existing open source frameworks.

OneFlow became famous overnight, which also provided an opportunity for First-Class Technology to accumulate the first batch of leading Internet enterprise users. Surprisingly,At that time, Yuan Jinhui chose an extremely low-key path because he was "still not satisfied with the product".

From the release of the closed-source version in September 2018 to its official open source release in July 2020, Yuan Jinhui spent another 22 months polishing OneFlow.He and his team continued to optimize the classic model while solving unexpected problems.In Yuan Jinhui's opinion, even if the product documentation is not well done, he will not easily push OneFlow to the table.

On July 31, 2020, OneFlow was officially open sourced on GitHub.This open source framework, known for its ability to train large-scale models, has stepped into the spotlight for the second time, perfectly illustrating the saying: efficiency is king.

Faster training speed, higher GPU utilization, higher multi-machine acceleration ratio, lower operation and maintenance costs, and easier user experience.Five powerful advantages enable OneFlow to quickly adapt to various scenarios and be rapidly extended. Yuan Jinhui and his team have achieved the ultimate in performance pursuit and optimization of OneFlow.

Recently, OneFlow released version v0.2.0.Up to 17 new performance optimizations have been added.This greatly improves the automatic mixed precision training speed of CNN and BERT.

The development team also established an open source project called DLPerf, which fully open-sources the experimental environment, experimental data, and reproducible algorithms.The throughput and speedup ratio of OneFlow and several other mainstream frameworks on the ResNet50-v1.5 and BERT-base models were evaluated in the same physical environment (4 V100 16G x8 machines).

The results show that OneFlow's throughput is significantly ahead of other frameworks on both a single machine and a single GPU, and on multiple machines and multiple GPUs, making it the fastest framework for training ResNet50-v1.5 and BERT-base models on mainstream flagship graphics cards (V100 16G).OneFlow ResNet50-v1.5 AMP single card is 80% faster than NVIDIA's deeply optimized PyTorch and 35% faster than TensorFlow 2.3.

The specific evaluation report can be found at the following link:

https://github.com/Oneflow-Inc/DLPerf

Facing doubts and becoming a "minority" in the track

In fact, OneFlow has been questioned quite a bit since its inception."Getting on board late and having a small living space" is the most mainstream voice.Yuan Jinhui showed extraordinary calmness about this.

In his opinion, the deep learning framework is a new thing, and both the technology and the industry are in the early to mid-stages, so there is no question of getting on board early or late.Products with high performance, strong ease of use and user-friendly value will be favored by users.

As for the idea that there is less living space, it is completely false.Open source allows small companies and large companies' products to compete fairly.Excellent new frameworks challenge authoritative frameworks, which is one of the core elements of the open source spirit.

Doubts did not hinder the development of OneFlow. On the contrary,Yuan Jinhui and his team accelerated the upgrade and improvement process of OneFlow, updating and optimizing performance, sorting out developer documentation, and collecting community feedback...These efforts and persistence have attracted more users to OneFlow, including some of the initial "skeptics".

At COSCon'20 China Open Source Annual Conference, Yuan Jinhui gave a presentation titled "Evolution of Deep Learning Training Systems".Introduced the next development plan of OneFlow to all developers. In addition to insisting on efficiency first and continuing performance optimization,The development team is also working hard to reduce users' learning and migration costs.Currently, the cost for PyTorch users to migrate to OneFlow is already quite low, because the user interfaces of the two are almost the same, and the cost of converting trained models to OneFlow is also low enough.

Objectively speaking, OneFlow still lags behind TensorFlow and PyTorch in terms of completeness and ease of use. However, OneFlow is characterized by high efficiency, good scalability, and distributed ease of use.It is very suitable for large-scale face recognition, large-scale advertising recommendation systems, and model training scenarios with huge model parameters such as GPT-3.

At the end of the interview, Mr. Yuan Jinhui did not hide his desire for talent. He said that OneFlow is recruiting machine learning engineers and deep learning engineers, and very much welcomes people of insight to join this energetic team that is eager to win.

-- over--