Command Palette

Search for a command to run...

I Thought Deepfake Would Affect the US Election, but It Turns out That Netizens Were Worrying Too Much

Before the 2020 US election, people were worried that fake news and fake videos might affect the election results. Now that the election has produced preliminary results, it seems that everyone's previous concerns have not come true.

The US election, which has attracted worldwide attention, finally got its interim results on November 7, local time in the United States. After four days of intense vote counting, Biden received more than 270 electoral votes, and many mainstream American media have announced Biden's victory.

Long before the election, many people predicted that Deepfake videos would play a leading role in the 2020 US election and interfere with the election. Many social platforms and even the US House of Representatives have been looking for strategies to deal with Deepfake in advance.

Now that the 2020 election is over, looking back at the entire election process, has Deepfake really had an impact on the US election as everyone worried?

Trump loves Deepfakes

Deepfake technology seems to have been destined to be abused since its inception, especially in the political field.

According to a report published in September by Creopoint, a US business intelligence company, with the US election approaching,Post on the InternetThe number of fake videos has increased 20-fold in the past year.

Among them, the main targets of fake videos are celebrities and executives. Creopoint said:60% of fake videos found on its platform targeted politicians.

These fake videos targeting American politics generally glorify one party and vilify the other. Such videos are also the easiest to leave a deep impression on people and spread, so they may eventually affect voters' judgment of candidates.

Trump is glorified

In May this year, Twitter user @mad_liberals released a Deepfake video. In the video, Trump was photoshopped into a scene from the sci-fi movie "Independence Day". Trump "transformed" into President Thomas Whitmore in the movie and delivered a speech saying "We have to fight for our survival", looking like a hero. The audience was also "replaced" with the White House team supporting Trump.

Trump happily retweeted this fake video at the time, and the video was played more than 10 million times that night.

Biden is vilified

Trump is also very keen on forwarding some Deepfake videos that vilify his rival Biden.

In April this year, Trump retweeted a fake video of Biden sticking out his tongue on Twitter and captioned it "sloppy joe" in an attempt to damage Biden's image.

However, Trump's forwarding of such fake videos or images that vilify his opponents did not always have a favorable impact on himself. Some voters commented disappointedly: "A real president would not forward such weird content."

In fact, people with a certain degree of judgment will not believe these fake videos. But it is hard to avoid that some groups will still be confused and misled, which may eventually affect their choice of voting in the general election.

Media releases Deepfake video, warns netizens of serious consequences

In April 2018, American actor Jordan Peele collaborated with the American online news media company BuzzFeed to use AI technology to create a fake video of Obama's speech.

The video uses Peele's speech as the source video and then transfers its movements to the image of Obama.

Obama's facial expressions and behavior in the video look very natural, with no signs of face-changing. If the producer Jordan Peele and BuzzFeed hadn't personally revealed the truth, many people would probably have been deceived.

The production team said that it took them only 60 hours in total to make the video, using Adobe After Effects and AI face-changing tool FakeApp.

They said they made and released this video.It is to appeal and remind everyone not to trust what they see or hear on the Internet, especially such Deepfake videos.

With the help of AI technology and various free face-changing and dubbing software, the threshold for forging videos has been lowered, and some technical experts are afraid of the possible consequences of these technologies.

"If anyone uses this technology to create realistic fake videos to spread false information, or even the media and party teams use it to carry out false propaganda, the consequences will be disastrous."

This is not an unfounded worry.

In February this year, in the Delhi state assembly election in India, Manoj Tiwari, one of the candidates of the Bharatiya Janata Party (BJP), used video fraud technology to "speak" a language he did not know in order to win over voters of minority languages.

This video achieved good results. Most people could not see any flaws in it, so it was not only not suspected at the beginning, but also received a very enthusiastic response, which won more votes for the candidate.

In the end, careful netizens still doubted the authenticity of the video. Later, the technology media Vice confirmed that it was indeed a Deepfake video, and it was produced by the Indian People’s Party itself and in collaboration with a public relations company (see details)."Indian parliamentary election, candidates use DeepFake to forge dialect videos to canvass votes").

Therefore, Jean-Claude Goldenstein, CEO of Creopoint, the American business intelligence company mentioned above, once expressed deep concerns about this US election.

He said:"There are many more fake videos than you can imagine, and what's even scarier is that the technology and tools for making these videos are developing faster than the speed of cracking them." Goldenstein even said that "the presidential election should be called a fake video election."

How to eliminate the consequences of Deepfake: Strengthen detection and legislation

Fortunately, Deepfake not only did not cause any trouble in this year's US election, but it was not even a supporting role. Some professionals analyzed that this may be attributed to the following two main reasons.

Social platforms prepare for a rainy day: precision strikes

In the months leading up to the election, mainstream social media platforms have taken more public steps to review and identify content and promptly delete false content such as fake videos.

Facebook:

Last September, Facebook launched a Deepfake Detection Challenge,The purpose is to use the developed tools to detect fake videos by governments, media, businesses, etc.

In January of this year, Facebook published a blog post saying that it would delete Deepfake videos and content containing misleading information before the election.

Twitter:

In February, Twitter announced a new policy on synthetic and manipulated media, saying it would crack down on media content that has been severely altered or fabricated, including editing, cropping, dubbing, or any fictional images of real people. Twitter will label such content with a warning label.

for example,Previously, a user who supports Trump posted a video that used Deepfake technology to make Biden look a little demented.He said, "Can you imagine Biden becoming the president of the United States? He can't answer a simple question, or even make a simple statement?" and was subsequently slapped with a warning label by Twitter.

Scientific research institutions actively work to improve detection algorithms

In order to prevent the election from being interfered with by Deepfakes, major research institutions and technology giants are also actively developing algorithms to help combat Deepfake content.

Google:

Last September, Google released an open-source database of 3,000 AI-generated videos that were produced using a variety of publicly available algorithms. Google created this dataset with the goal of creating a large number of examples to help train and test Deepfake automatic detection tools.

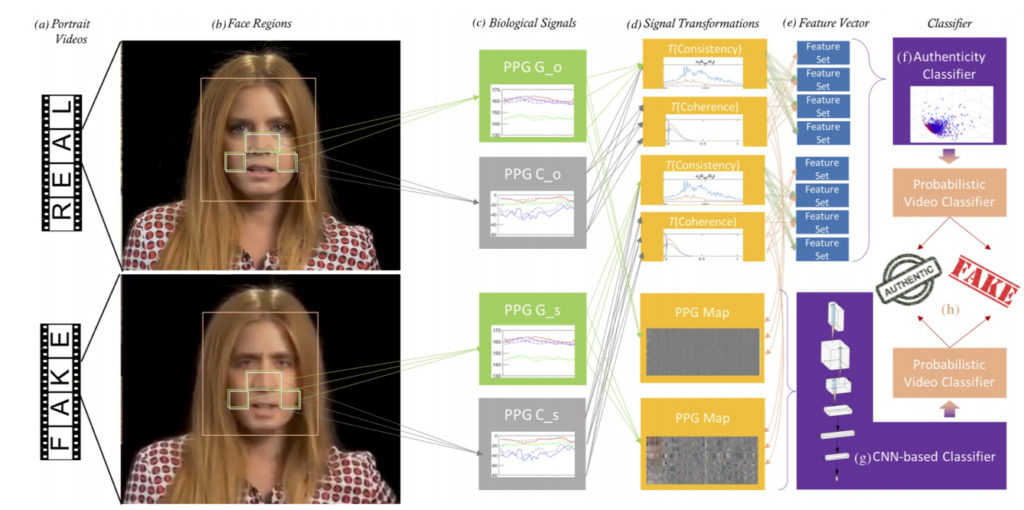

Intel & Binghamton University:

In September this year, researchers from Binghamton University and Intel developed an algorithm thatIt claims that it can use the heartbeat signal in the video to detect whether the video is fake, with an accuracy rate of up to 90%.Not only that, the algorithm can also discover the specific generation models behind Deepfake videos (such as Face2Face, FaceSwap, etc.).

Microsoft:

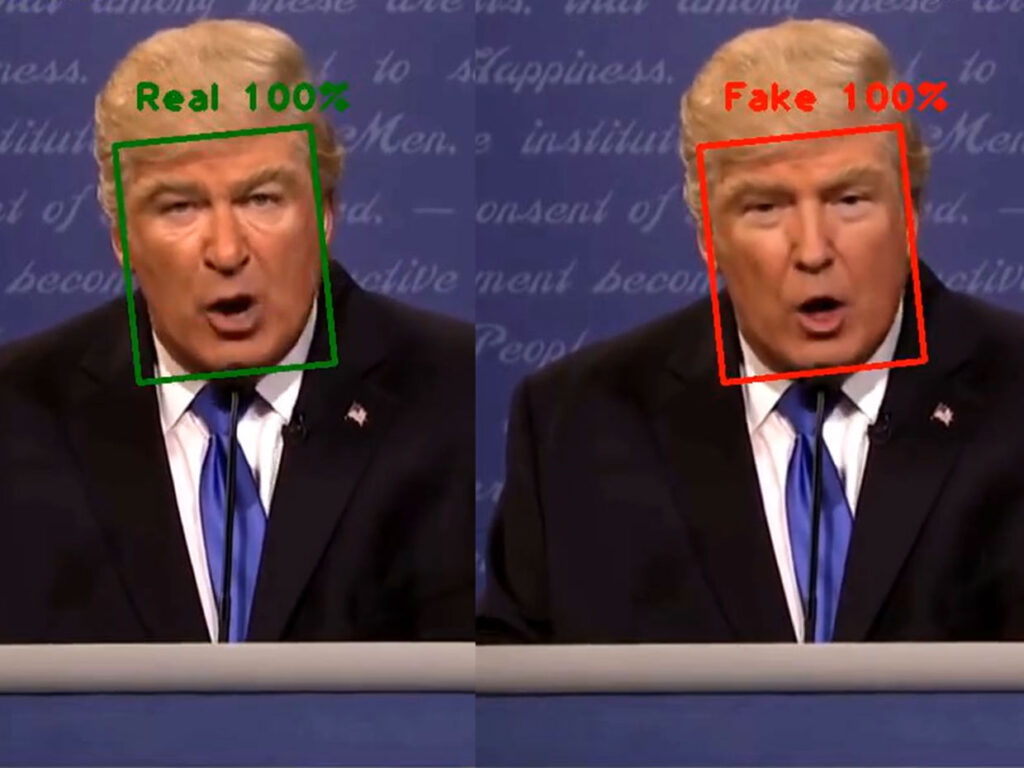

In September of this year, on the eve of the election, Microsoft released a Deepfake detection tool called "Video Authenticator", which indicates whether a video is Deepfake content by displaying a confidence score or the "percentage" of manipulation and modification.

Therefore, as social and media platforms become more and more capable of identifying fake content, such videos will eventually be nipped in the bud.

At the same time, the previous legal gaps are gradually being improved, and holding those who forge videos accountable for the adverse consequences will also make everything clear.

For example, the Civil Code of my country, which was voted and passed in May this year, clearly states that the use of technology to tamper with videos is an infringement of the right to portrait, and it is clearly written into Article 1019 of the Code (see"AI face-changing, voice tampering, etc. are clearly written into the new version of the Civil Code").

Article 1019 [Negative power of portrait rights] No organization or individual may vilify, deface, Or using information technology to forge or infringe upon others' portrait rights.No one may produce, use, or disclose the portrait of a portrait right holder without the consent of the portrait right holder, except as otherwise provided by law.

Under the dual supervision of technology and law, the producers and disseminators of fake videos may pay a higher price for their actions. If they want to get involved in politics, it may not be so easy.

At this point, the question we raised at the beginning of the article has also been answered. Deepfake did not cause any waves in this year's US election, let alone interfere in the election, so netizens were just worrying too much. However, in the future, relevant supervision should not be relaxed, and we still need to prevent technology from doing evil.

-- over--