Command Palette

Search for a command to run...

The Final Exam Is Not Here Yet, but the Algorithm Says I Will Definitely Fail Physics

College physics is a basic and compulsory course for science and engineering students, but it is also daunting for many students because of its difficulty. Researchers have proposed using AI algorithms to predict which students are at risk of failing physics courses, so that teachers can better provide teaching guidance and adjust the allocation of educational resources.

It has to be said that the predictive capabilities of algorithms are becoming increasingly powerful, from predicting whether a couple will quarrel to predicting when earthquakes, floods, etc. will occur.

Algorithms can now even predict whether you’re going to fail your physics class.

This is a recent study published on arxiv.org by scholars from West Virginia University and California Institute of Technology.

They published an interesting paper:"Using Machine Learning to Identify the Most At-Risk Students in Physics Classes"

The paper states that through machine learning algorithms, the graduation grades of students in basic physics courses can be evaluated. The predictive model classifies students into grades A, B, C, D, F and W (withdrawal).

Note: The grading grades and percentage scores adopted by most colleges and universities in the United States are roughly as follows: A: 90+; B: 80+; C: 70+; D: 60+; F: Failure; W: Withdrawal (abbreviation for Withdrawal).

Predicted results: Sound the alarm, you can still save it

Remember the panic of being dominated by university physics?

For many science and engineering students, university physics is as difficult as advanced mathematics and is one of the most daunting subjects.

A foreign study shows that among students who once majored in engineering and science (collectively known as STEM) but eventually changed their major or failed to obtain a degree,A small half of them did so because their major courses, such as physics and mathematics, were too difficult.

The attrition rate of STEM students, especially those in basic disciplines, is increasing year by year. At the same time, society's demand for them remains high, resulting in a significant talent gap.

Therefore, researchers from West Virginia University and California Institute of Technology proposed thatLet’s use AI algorithms to save these students.

They believe that machine learning algorithms can be used to identify students who are at risk of failing a course. In this way, teachers can provide targeted guidance based on the predicted results, thereby improving students' pass rates and understanding their mastery of the subject in a timely manner.

Algorithm: Refer to past performance to predict future results

Sample extraction

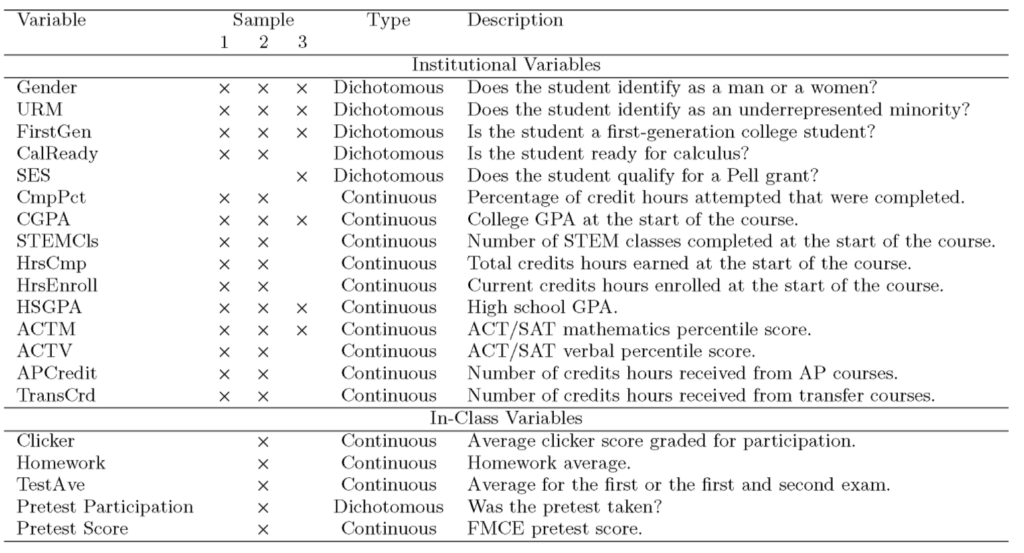

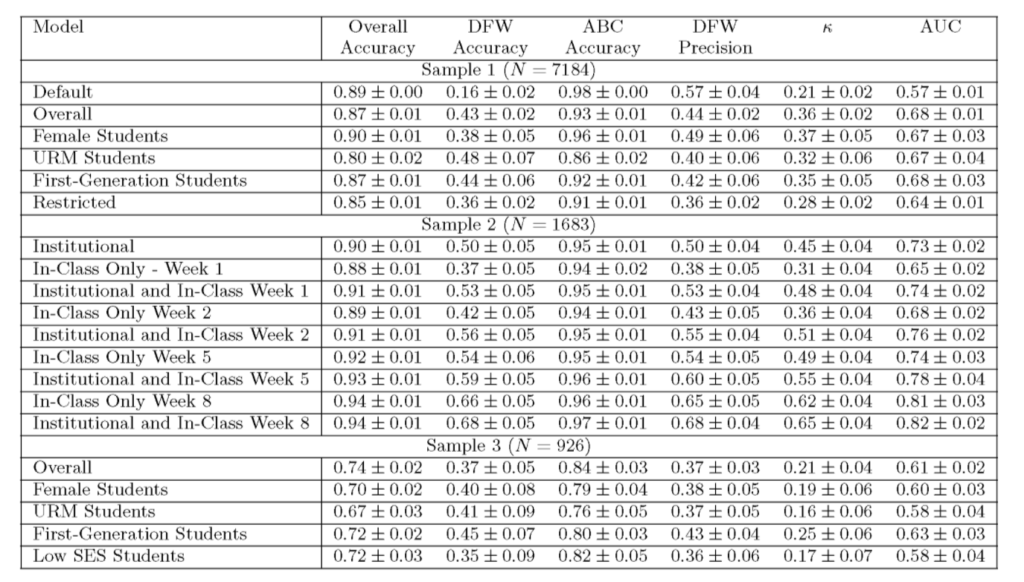

The researchers used three samples from two universities to train an AI algorithm to predict student performance.

The sample data includes:Students’ ACT (American College Entrance Examination) scores, college GPA, and data collected in physics classes (such as homework grades and test scores).

Among them, Samples 1 and 2 were from students majoring in physical science and engineering at a university in the eastern United States.

Sample 1:All students who completed the college physics 1 course from 2000 to 2018 were included, with a sample size of 7184.

Sample 2: The sample size is 1,683 people from the fall semester of 2016 to the spring semester of 2019. The sample includes classroom performance data, such as the average number of answers, the average grade of homework, and the semester exam scores.

Sample 3:The data were collected from an introductory mechanics course throughout the academic year of 2017. Sample three was collected at another university, which is located in the western United States.

variable

The variables used in this study are all from within the university and the class. At the same time, some demographic information such as gender and ethnicity are also included.

Random Forest Algorithm Prediction

In the study,A random forest machine learning algorithm was used to predict students’ final grades in an introductory physics course.The algorithm will finally divide the students into those who get A, B or C (classified as ABC students) and those who get D, F or W (classified as DFW potential failure students).

To understand the performance of the algorithm, they divided the dataset into a test and training dataset. The training dataset was used to develop the classification model to train the classifier.

The test dataset is used to characterize the model performance.

After the classification model predicts the test results for each student in the test dataset, the predictions are compared to the actual results.

Result: Awkward, accuracy 57 %

After adjusting and verifying the model, the researchers came up with a prediction result, but the accuracy rate is not very optimistic...

They pointed out that in the prediction results for the entire sample,For samples with more female and minority students, DFW accuracy is lower.This would require model adjustments for demographics, they noted.

On the first sampleThe trained algorithm only predicts "DFW-type students" with an accuracy of 16%.The researchers analyzed that this may be due to the low proportion of students with DFW scores in the training set (12%).

In sample 1,The best performance of the model was only 57%, which is only slightly better than random chance.

The results are low in accuracy and the model is controversial

Faced with this result, they believe that this type of machine learning classification model may be a powerful tool for educators and students who are struggling to learn.It can better guide educational intervention and the allocation of educational resources.

Netizen: But... isn’t 57% a bit low?

However, some critics believe thatTechnology like this could lead to biased or misleading predictions that could harm students.

Studies have consistently shown that even when trained on large corpora, artificial intelligence can still have biases when it comes to predicting complex outcomes.

Previously, Amazon's internal AI recruitment tool was disabled because it showed bias against women.

Therefore, people are also worried that this kind of grade prediction algorithm will not only fail to improve the retention rate of STEM students, but will exacerbate inequality.

Of course, all the results are just predictions. As for exams, 30% is determined by fate, 70% depends on hard work, and the remaining 90% depends on the teacher's mood.

-- over--