Command Palette

Search for a command to run...

5 Billion Views, 17 Million People Participated in the "Transformation Comics", the Douyin Team Accepted an Interview With CSDN

In recent years, various photo editing, beauty, and special effects applications have been popular among users. Recently, Douyin’s latest “transformation into comics” special effects have become a hot search. What are the key technologies behind the explosion?

Editor: NeuroXiaoxi

The content is compiled from CSDN and ByteFan (link attached at the end of the article)

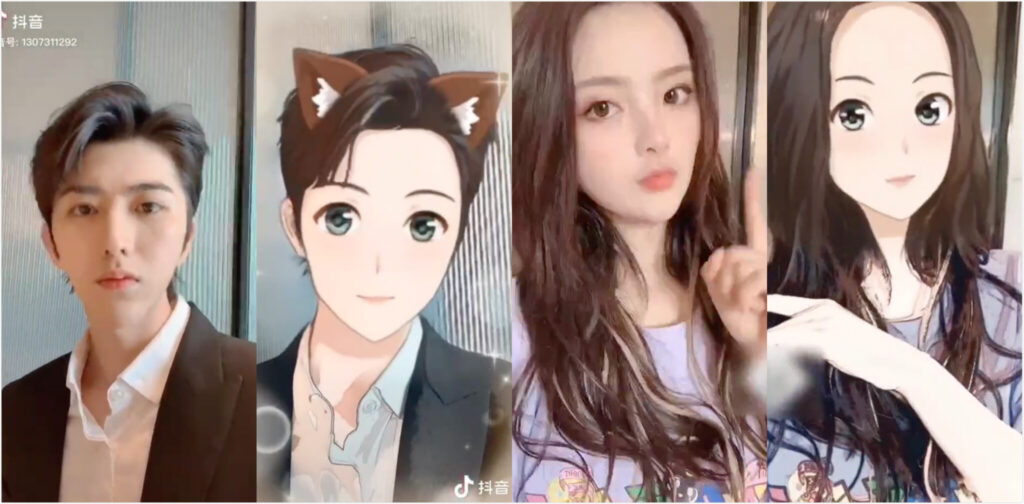

Recently, a "transformation into comics" special effect on TikTok has become popular. From passers-by to celebrities, everyone can't help but try it out.

In just one second, you can see yourself in the two-dimensional world, with big watery eyes and fair skin, looking full of energy.

As of now, more than 17.7 million users on Douyin have created videos using the "Transformation into Comics" special effects, and this series of videos has accumulated 5.67 billion views.

Inspiration from a year ago, using GAN to break through

Although this special effect is easy to use and the transformation only takes one second, the success is actually the result of long-term research and development and polishing by the Douyin video team.

In 2018, ByteDance established a special imaging team.It supports the polishing of the entire product series including Tik Tok, Volcano, and Qingyan, including long-term exploration of real-person stylized gameplay, and strives to continuously create special effects that hit users.

Technology media CSDN interviewed the relevant team as soon as possible, and we quoted part of it:

The inspiration for the "Transformation Comics" project launched this time came from a brainstorming meeting about a year ago.

I learned that during a brainstorming session,The idea of "turning a real person into a cartoon face in seconds" was brought up.The idea excited the team.

In September 2019, the Douyin video team quickly brought in colleagues from R&D and design to participate.

The main technology of the comic transformation special effects launched by Douyin this time is still GAN, but there are also differences compared with the past.The team added new attempts based on GAN.

In fact, before the final technology selection, Douyin's real-time comic special effects compared a large number of current generation technology methods, including methods for generating comics, such as ugatit, and methods for other tasks, such as MUNIT.

However, the research found thatThere are some problems with the current GANs used for tasks such as comic generation and style transfer.

First, the training is unstable. Second, even a small adjustment of the hyperparameters may have a significant impact on the results. Furthermore, it is easy to encounter the problem of gradient disappearance.

In this regard,Douyin's improvement plan is to try multiple losses, including WGAN, LSGAN, etc.But there is still no silver bullet so far, so it is necessary to monitor the changes in gradients during the experiment.

Douyin's technical team also said that in the process of exploring comic video technology, the technical team repeatedly ran into obstacles in the initial attempts. In the early preliminary research, the output version was quite different from the picture version, and the performance did not meet the standards.

After several unsatisfactory attempts, the team began to doubt the feasibility of real-time comics.

But fortunately, after several failed attempts, someone pointed out the key when summarizing the experience:The previous models had a single structure, and the advantages and disadvantages of different models were not completely consistent.

then,The technical team tried the model grafting method, using different modules to splice new models, which greatly improved the quality of comic generation.

After the quality standards were met, the model was trimmed by calculating the importance of each layer, and finally the structure of the real-time model was determined.

The product team also participated in the model optimization, summarizing the quantitative relationship between parameters and effects, and optimizing the model by fine-tuning parameters. Finally, this hit product was born.

There are many difficulties in real-time transformation into comics. How to overcome them?

One of the most attractive things about "Transformation Comics" is its real-time transformation.

So, compared with static image processing, what is the difficulty in achieving real-time comic processing, especially on mobile phones?

The Douyin technical team said that real-time video comic processing is still quite difficult, for example:

- First, the computational complexity of the model itself needs to be very small. To achieve a good comic effect with limited computational complexity, it is necessary to make full use of the value of each operation.

- Secondly, Douyin has a large number of users, and the performance of the models used by users varies greatly, so it is necessary to specially develop complex and customized model delivery strategies.

In order to meet the needs of users at different levels, Douyin has developed a complex model distribution strategy and realized customized model distribution, which ultimately ensured the successful launch of real-time comics and met the requirements of real-time comics in terms of effect and performance.

also,Tik Tok comics special effects use ByteNN, ByteDance’s self-developed inference engine.This inference engine, which is designed for the rapid implementation of edge-side algorithms, not only supports the general computing capabilities of the CPU and GPU, but also fully leverages the acceleration capabilities of the manufacturer's NPU/DSP hardware, ensuring that real-time comics can stably support TikTok's massive user base.

Of course, the current algorithm for this real-time comic effects still has room for optimization for some special scenarios. In subsequent iterations, we will also start from both the model itself and the inference engine to improve the model effect while optimizing the inference performance.

ByteDance Imaging Team: Comic filters must be both realistic and beautiful

In recent years, special effects that transform images into cartoons and hand-painted styles have emerged one after another.How to stand out and become a hit is the difficult problem faced by the team.

According to Da Peng from ByteDance's imaging team, the most important thing is,The goal is to bring surprise and resonance to users.

On the one hand, the special effects team of "Transformation Comics"It achieves both "likeness" and "beauty".On the other hand, through continuous improvement of technology,Real-time transformation effects of thousands of faces for thousands of people are achieved.

Yu Chen from the project team said, "We defined two major characteristics: 'exquisite beauty' and 'extreme likeness'. We must fully retain the user's characteristics and make the image look like the real person, while also generating the unique artistic beauty of comics."

In addition, the team combined the strengths of the images in Japanese, Chinese, and Korean comics to design the final version of the comics, and its aesthetics have been widely recognized.

In terms of gameplay, the team finally selected 6 creative props, such as sliding hands, nodding, and other transformation methods, to improve the interactive experience and take into account the needs of users of different ages, levels and preferences.

GAN: An important magic weapon in the field of image generation

Let’s talk about the basic technology of this hit product - GAN (Generative Adversarial Networks).

In recent years, research based on GAN has been in full swing. Behind every image generation and conversion research result that goes viral, GAN technology is almost indispensable.

In 2014, Ian Goodfellow and his team published a paper titled "Generative Adversarial Networks" in which they pioneered a deep learning model called GAN.

The main structure of the GAN model includes the generator G (Generator) and the discriminator D (Discriminator).The training of this model is in a state of adversarial game.

Using the idea of adversarial game, during the training process, the goal of the generator G is to generate as real pictures as possible to deceive the discriminator D. And the goal of D is to distinguish the pictures generated by G from the real pictures as much as possible. In this way, G and D constitute a dynamic "game process".

The final result of the game? Under ideal conditions, G can generate images that are so realistic that they look fake.

In layman's terms, G is like a forger of an artwork, trying every possible way to fool the discriminator D and ultimately obtaining a work that is difficult to distinguish between the genuine and the fake.

In recent years, GAN has been widely used. For example, it is used to generate anime characters:

Image conversion using CycleGAN:

StyleGAN, proposed by NVIDIA in 2018, generates fake portraits:

Sources:

https://mp.weixin.qq.com/s/lLfp8F6G2uHxYpCMCF1Tmw

https://mp.weixin.qq.com/s/WeZD__I7Y98Fg18pEZ9L9g

-- over--