Command Palette

Search for a command to run...

Duke University Proposes an AI Algorithm to Save Poor Quality Mosaics and Turn Them Into high-definition Images in Seconds

What is it like to convert a "mosaic" pixel-level headshot into a high-definition photo? The AI algorithm proposed by Duke University can not only "remove mosaics", but also be as detailed as every wrinkle and every hair. Do you want to try it?

In this era of pursuing high-definition image quality, our tolerance for poor image quality is getting lower and lower.

If you search for "low resolution" and "poor image quality" on Zhihu, you will see a large number of questions such as "How to convert low-resolution photos into high-resolution photos", "How to repair photos with low clarity", and "How to save poor image quality".

So, what is it like to turn a pixelated picture into a high-definition picture in seconds? Researchers at Duke University use AI algorithms to tell you.

The project is now available on GitHub:https://github.com/adamian98/pulse

Unprecedented, "Mosaic" instantly becomes high-definition

Researchers at Duke University have come up with an AI algorithm called PULSE (Photo Upsampling via Latent Space Exploration).

The algorithm can transform blurry, unrecognizable images of faces into computer-generated images with finer and more realistic details than ever before.

If you use the previous method to make a blurry "headshot" clearer, you can only scale the photo to eight times its original resolution at most.

But a team from Duke University has come up with a new approach.In just a few seconds,You can enlarge a 16×16 pixel low-resolution (LR) image by 64 times to a 1024 x 1024 pixel high-resolution (HR) image.

Their AI tools "imagine" features that don't exist.Even details that cannot be seen in the original LR photos, such as pores, fine lines, eyelashes, hair and stubble, can be seen clearly after being processed by its algorithm.

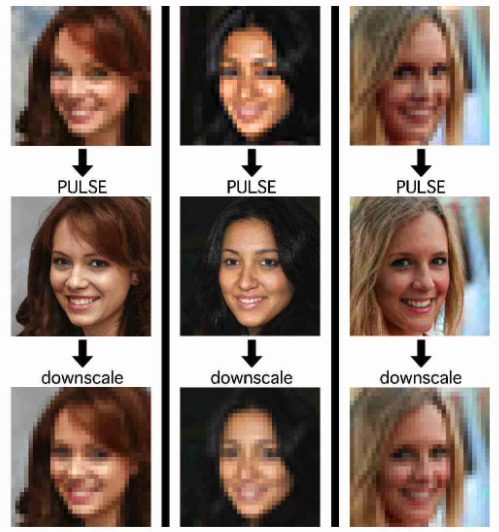

Let's look at a specific example:

“Never before has it been possible to create super-resolution images with such a large amount of detail using so few pixels,” said Cynthia Rudin, a computer scientist at Duke University who led the team.

In terms of practical applications, Sachit Menon, co-author of the paper, said: "In these studies, we only used the face as a proof of concept.

But in theory, the technology is universal and could improve everything from medicine and microscopy to astronomy and satellite imagery.”

Breaking traditional operations to achieve the best results

Although there have been many similar methods of converting low-definition to high-definition, this is the first time in the industry that a 64-fold pixel magnification level has been achieved.

Traditional method: pixel matching, prone to bugs

When dealing with such problems, traditional methods generally take the LR image and "guess" how many additional pixels are needed, and then try to match the corresponding pixels in the previously processed HR image to the LR image.

The result of simply matching pixels is that areas such as hair and skin textures will have pixel mismatches.

Moreover, this method will ignore the perceptual details such as photosensitivity in HR images, so there will be problems with smoothness and photosensitivity, and the results will still appear blurry or unrealistic.

New method: low-definition image "linking"

The new method proposed by the Duke University team can be said to have opened up new ideas.

After getting an LR image, the PULSE system does not slowly add new details.Instead, it traverses the HR images generated by AI, compares the LR images corresponding to these HR images with the original image, and finds the closest one.

To put it in an analogy, it is equivalent to doing a "connect the dots" game with the LR images, finding the most similar LR version, and then working backwards. The HR image corresponding to this LR image is the final output result.

The team used a generative adversarial network (GAN).It consists of two neural networks, a generator and a discriminator, trained on the same dataset of photos.

The generator simulates the faces it was trained on, providing an AI-created face, while the discriminator takes that output and determines whether it’s realistic enough to be mistaken for a fake.

With experience, the generator gets better and better, until the discriminator can no longer tell the difference.

They used some real images for the experiment, and the effect comparison is shown in the figure below:

Although the generated high-resolution image still has some gap with the original image, it is much clearer than previous methods.

Evaluation: Outperforms other methods, scores close to real photos

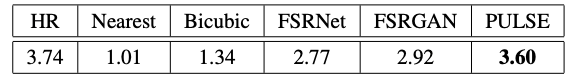

The team evaluated its algorithm on the well-known high-resolution face dataset CelebA HQ, performing these experiments with scaling factors of 64×, 32×, and 8×.

The researchers asked 40 people to rate 1,440 images generated by PULSE and five other scaling methods on a scale of 1 to 5.PULSE performed best, scoring almost as high as real high-quality photos.

HR is an actual high-definition portrait dataset, and its score is only 0.14 higher than PULSE.

Team members said that PULSE can create realistic images from noisy, low-quality inputs, even if the original image is unrecognizable, such as eyes and mouths. This is something that other methods cannot do.

However, the system cannot yet be used for identity recognition, the researchers said: "It cannot transform an out-of-focus, unrecognizable photo taken by a security camera into a clear image of a real person.It just generates new faces that don’t exist but look real.”

In terms of specific application scenarios, in addition to the above-mentioned applications, this technology may be used in medicine and astronomy in the future. For the general public, with this black technology, old photos taken N years ago can be turned into high-definition ones. For editors, it is a great blessing, as they no longer have to worry about finding high-definition pictures.

Warm reminder: The researchers will also introduce their method at the ongoing CVPR 2020 (Conference on Computer Vision and Pattern Recognition), so you can pay attention to it:

http://cvpr2020.thecvf.com/program/tutorials

Paper address:

https://arxiv.org/pdf/2003.03808.pdf

References:

https://www.sciencedaily.com/releases/2020/06/200612111409.htm

-- over--