Command Palette

Search for a command to run...

American Podcast "Exponential Perspectives" Interviewed Fei-Fei Li: Epidemic, AI Ethics, and Talent Training

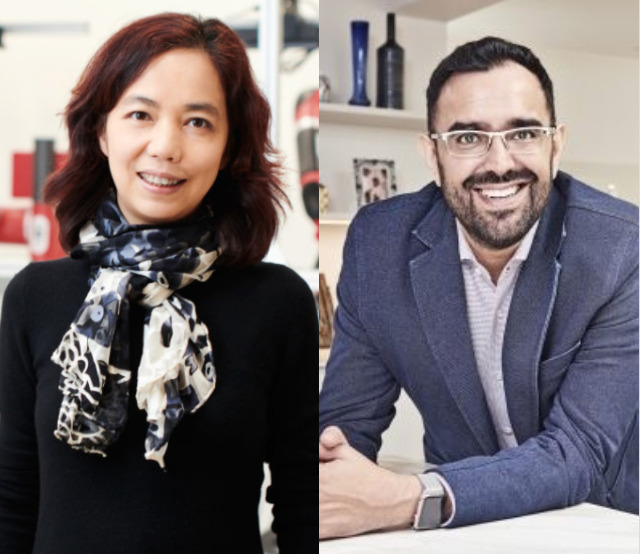

Fei-Fei Li recently participated in the podcast "Exponential View" produced by Harvard Business Review. As a guest, she was interviewed by technology media person Azeem Azhar. She introduced HAI Laboratory's recent research in medical AI and discussed the privacy and ethical issues of artificial intelligence.

Fei-Fei Li, a professor of computer science at Stanford University, a member of the U.S. National Academy of Engineering, and a recognized goddess in the industry, recently appeared on the "Exponential View" podcast.

The Exponential View podcast is a technology-themed podcast produced jointly by media person Azeem Azhar and Harvard Business Review.

Estonian President Kersti Kaljulaid, who has been vigorously promoting blockchain technology in the country, Microsoft President Brad Smith, Nasdaq CEO Adena Friedman and others are all recent guests of the podcast.

Fei-Fei Li and anchor Azeem Azhar had an in-depth discussion on AI technology and applications, from the vision of AI to the current focus on AI healthcare, to the privacy and ethical issues facing AI technology.

The 30-minute conversation was filled with valuable information. Let's take a look at what the AI goddess has been thinking about recently and how she views the hot issues of artificial intelligence technology.

The following is the too-long version:

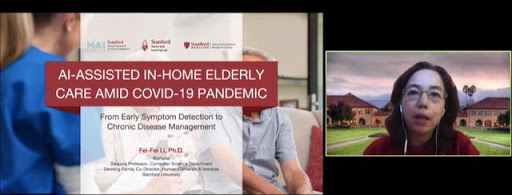

1. AI technology can help older people live more independently and healthily, and detect early signs of COVID-19 infection

The use of AI-driven smart sensor technology may be effective in reducing infection and mortality rates of COVID-19 among the elderly.

Using non-contact sensors such as depth sensors and thermal sensors, body temperature can be measured and changes in the elderly’s eating patterns, toileting patterns, sleeping patterns, etc. can be monitored, as well as early detection of loneliness and dementia, etc.

2. When developing technology, we must also respect and protect privacy

Every step of technological development, especially human-centered technology, needs to consider issues of privacy, respect, and dignity in the process. Even if this brings greater difficulty and challenges to technology, it cannot ignore many humanistic factors.

3. Before students learn code and algorithms, let them integrate into life first

In the early days of AI technology development, technical experts did not consider the impact that the rapid development of technology would have on today's human society.

Based on historical experience, Fei-Fei Li believes that technology and humanities should be more closely linked in the future. For example, the curriculum design of Stanford HAI integrates both science and humanities, including courses on pure technology content, as well as AI principles, algorithm politics, ethics, etc.

4. Machines’ values reflect human values, so humans have moral responsibilities

The values of machines are a reflection of human values. The development and application of technology depends on humans, so humans have moral responsibilities. Technologists should look at issues from the perspective of all stakeholders, not just their own perspective, to eliminate bias in technology.

5. The birth of ImageNet: from the right method and path

ImageNet has had a huge impact not only on computer vision, but also on the entire field of artificial intelligence. Fei-Fei Li said that the birth of ImageNet was due to the team's correct definition of methods and key paths based on existing research at the time.

6. The arrival of AGI (strong artificial intelligence) is natural and will promote mutual development with humans

Strong artificial intelligence has been in demand since the birth of AI, so its arrival is inevitable. In order to prevent AGI from being limited by the boundaries of human intelligence, scientists should be allowed to make bolder attempts, constantly innovate and break through their own limits, and promote and inspire each other with AGI to achieve two-way development.

We have translated and organized the content of this podcast. The following is the full version.

Fei-Fei Li: The pandemic has allowed me to use AI to help and protect the elderly

Azeem Azhar: Hi, my name is Azeem Azhar, and you are listening to the Exponential Views podcast.

Dr. Fei-Fei Li is a well-known researcher in the field of artificial intelligence. She is currently the first Sequoia Chair Professor of Computer Science at Stanford University and co-director of the Human-Centered Artificial Intelligence Institute (HAI). She is best known for initiating the ImageNet project.

I believe that ImageNet is one of the catalysts and driving forces behind the boom in investment, research and application of artificial intelligence over the past eight years.

Earlier this year, she was elected to the National Academy of Engineering, one of the highest professional honors in her field. Feifei, it's great to have you here today, thank you for taking the time.

Fei-Fei Li: Thank you, it’s so exciting. I really like your show too.

Azeem Azhar:I'm in London, where are you? We can't go out now because of the quarantine.

Fei-Fei Li: I'm at Stanford University. Yes, we are all "locked down" and I really miss working with young people every day.

Azeem Azhar:We know that your research lab is all about machine learning and artificial intelligence. Some of these skills seem very relevant to addressing COVID-19 and some of the impacts it has. How is your lab involved in this?

Fei-Fei Li: In fact, as early as eight years ago, we realized that computer vision and smart sensors and devices had entered a stage where we could start solving some real-world problems, especially health care issues that I am very interested in.

therefore,We've been experimenting with some research on contactless sensors.Try to understand human behavior as it relates to clinical outcomes.

One of the main areas that has caught our attention is the ageing of the world's population -How can we help older people live more independently and healthier lives?So our research is relevant to both clinical and family support.

Specifically, it includes temperature measurement, changes in eating patterns, toileting patterns, sleeping patterns, early detection of loneliness, dementia, etc.

So when the COVID-19 outbreak hit, I paid attention to the issue of elderly care very early on. Because I have two elderly parents, I was also worried about them.

From the data, we are horrified to see that people who live alone have greater vulnerability and mortality, because not only are they more vulnerable from an immune system perspective, but more of them have compromised underlying health conditions because they cannot get to a clinic to see a doctor.

We wondered if we could accelerate this technology and bring it to the homes and communities of the elderly, so that we could help detect COVID-19 early, such as changes in body temperature and signs of infection.

Azeem Azhar: So can you tell us what kind of sensors you are working on? What kind of data is needed for this work?

Fei-Fei Li: There are two types of sensors that we are currently using and piloting.

One is a depth sensor,For example, when we play XBox video games, we use this sensor, which can obtain distance information without seeing the player.

The other is a thermal sensor,It's looking at temperature changes or behavior. If you sit on the couch for a long time without moving, your eating frequency and fluid intake will go into sleep mode, and this sensor will be able to monitor that.

The trade-off between technological development and privacy issues

Azeem Azhar: I've seen similar projects, for example, everyone likes HD cameras, whose high resolution allows people to see subtle facial expressions.

But with this high-definition technology comes the possibility that it can be invasive and can be misused. What parameters do you look for in your sensor research?

Fei-Fei Li: Any sensor we study will deal with privacy issues and respect for humans, and we are working with ethicists and legal scholars to study privacy issues.

Due to privacy concerns, depth sensors lose data and high-fidelity, high-resolution pixel shading data. So, how do we make up for this problem?

Our lab is conducting computer vision research that can understand the details of human posture without RGB high-definition camera data.

Azeem Azhar: Is there a tradeoff between the fidelity and quality of the data you can get and the privacy intrusiveness? Is that a necessary tradeoff? Is it a fundamental axiomatic system of tradeoffs?

Fei-Fei Li: That’s a very good question. I think it’s always a consideration.

Every step of our technological development, especially human-centered technology,Privacy, respect, dignity, it shouldn't be an afterthought. So, from that perspective, we're going to compromise. If we can't use certain information, it will bring greater challenges and more opportunities to technology.

Fei-Fei Li: My students need to understand both technology and context

Azeem Azhar: You are currently at the Stanford Institute for Human-Centered Artificial Intelligence (HAI). Can you tell us how it is different from your previous team or other more traditional AI institutions as an AI research institute?

Fei-Fei Li: What excites me here is that from the very beginning, we have ambition in our genes.It is to make our institute (HAI) a truly interdisciplinary research and education institution.

Our co-founders come from various fields such as computer science, philosophy, economics, law, ethics, medicine, etc. 20 years ago, when I was a doctoral student, I would never have dreamed that my own curiosity would become a force for change in the humanities and society in the future.

So, when I realized that, I personally felt a huge sense of responsibility.

Azeem Azhar: How would you explain some of the consequences of the disconnect that has always existed between science labs and engineering labs, especially computer science, and what humans characterize?

Fei-Fei Li: This is also a process of my personal growth. I remember in 2000, when I was a first-year doctoral student at Caltech, the first research paper I read was a groundbreaking paper on face detection.

My advisor said that this paper is a great machine learning paper. It shows that real-time face detection can be performed using very slow CPU chips.

Looking back now, when my mentors and classmates read my paper, no one, not even myself, ever considered how it related to human privacy.

This shows that this was not taken into consideration in the early stages of the development of these technologies.But I didn’t think about it in the past. Is it my problem?Probably not.

We never dreamed how big the impact of this technology would be, but today we are seeing the consequences.

Azeem Azhar: Yes, this is a very important observation. It reminds me of a famous speech by CP Snow (British scientist and novelist) in 1959 called "The Two Cultures".

He talked about how the average scholar knew Shakespeare but knew nothing about the second law of thermodynamics. And the scholar who knew the second law or thermodynamics knew nothing about Shakespeare. Without common knowledge, we cannot think intelligently.

Today, you seem to be building a bridge between these two cultures.

Fei-Fei Li: To me, this is a double helix.We believe that the next generation of students should be "bilingual" - learning both technology and humanities.

One thing that has struck me over the past few years in Silicon Valley is that young technologists have told me that they are not being educated on this.

When they hear on the news now, or even see products made by their own companies, facing these issues of impact on humanity, they are so horrified that they don’t even know to think about what their role is and how to make the world a better place.

Azeem Azhar: So my experience is,Try not to think of students as researchers, but as product managers, developers, and entrepreneurs.

I would like to introduce some courses in HAI, which are really scientific. For example, knowledge graph, theoretical neuroscience, machine learning and causal reasoning. Then on the other hand, there are politics of algorithms, ethics, public policy, technological change, digital civil society, and designing artificial intelligence to cultivate human well-being.

So, I'm curious, what innovative ways do you have to combine these two very unrelated disciplinary areas?

Fei-Fei Li: Most of my students are masters and doctoral students from computer science backgrounds. When they join our AI healthcare team, there is only one basic requirement -Before discussing codes and algorithms, let’s first immerse ourselves in the daily lives of medical staff.

They need to enter the ICU, wards, operating rooms and even medical staff/patients' homes to understand these people's lifestyles and have face-to-face contact with their families.

So this is just one small example, but HAI is doing this in all areas.

Is technology neutral?

Azeem Azhar: I hear two arguments. Some people say that technology is ethically neutral, like a planet orbiting a star.

But others say that technology is in some sense path-dependent, evolving within particular structures and contacts, biases, privileges, and perspectives. Therefore, technology is never neutral.

Which of these two views do you think is more correct?

Fei-Fei Li: So stars are not made by humans, but technology is.

So I do believe that there is a saying:There is no independent machine value. The value of a machine is the value of a human being.Scientific laws have their own logic and beauty, without human bias. However, the invention and application of technology are very dependent on people, and we all have this moral responsibility.

Azeem Azhar: For example, when you founded this institute, you had a background from Stanford and you yourself are a multicultural person. So how do you view Stanford and other backgrounds?

Fei-Fei Li: I would say it’s a sense of responsibility. From the early days of Stanford University leadership, we recognized the importance of a sense of responsibility.

That's why HAI has so much support. Because we realize thatOur role is not just to innovate in technology, but to use technology to bring prosperity to human society., including art, music, humanities, as well as social sciences, medicine, education, etc.

ImageNet: Revolutionizing Image Recognition

Azeem Azhar: Let's talk about your project ImageNet. I think it is very critical to the current wave of investment and application of artificial intelligence. ImageNet reminds people how important data is to the development of AI. Have you ever thought that this may be the impact of your starting this in 2006?

Fei-Fei Li: I feel more excited about the process of completing the ImageNet project. Like most other scientists, I have a pursuit and curiosity for knowledge, not just focusing on how much impact our ideas will have.

Azeem Azhar: It's amazing. Essentially, you do a lot of heavy lifting to classify images to create a clean dataset that people can apply their algorithms to.

In the years before 2012, approximately $300 million was invested in artificial intelligence startups each year, and ImageNet had a huge impact on the artificial intelligence entrepreneurial environment.

Fei-Fei Li: First of all, I am humbled to receive these awards and thank you for attributing the credit to ImageNet. I think history and time will ultimately judge our contributions, but we are indeed very proud of this work.

Azeem Azhar: Looking back at the development of image recognition technology in 2011 and 2012, it was far from being as advanced as today's image recognition. As a scientist in this field, how do you interpret the changes over the years?

Fei-Fei Li:ImageNet was born out of the desire to revolutionize image recognition.The ideas we proposed at the time were not much different from many scientific discoveries. We very much hoped to establish a North Star that could truly promote visual intelligence research, allowing us to define how to solve large-scale object classification and solve this problem.

We did succeed in finding this solution, but of course, we stood on the shoulders of giants, not out of thin air. This was because of the research in cognitive neuroscience and computer vision over the past 30 years.

General artificial intelligence (AGI) in the eyes of Fei-Fei Li

Azeem Azhar: General artificial intelligence, or strong artificial intelligence, has been mentioned a lot recently by the public and the media. What does AGI mean to you?

Fei-Fei Li: When I first read "Can Machines Think?" by Turing, the founder of artificial intelligence, the concept of AGI (strong artificial intelligence) has been in demand since the beginning of artificial intelligence.So I think the birth of strong artificial intelligence is natural.

Azeem Azhar: I'm curious about that.When we think about AGI (strong artificial intelligence), will we fall into the trap of anthropocentrism?For example, the first is that we realize artificial intelligence through engineering methods; the second is that we push machines to understand our rules, which leads to the boundary of machine intelligence, which is actually the boundary of human intelligence.

Fei-Fei Li: So, I think scientists should be allowed to make bolder attempts. When Newton was observing the stars, humans had not yet used electricity, but we should respect all the efforts made in the historical process.

What excites me even more from the perspective of human intelligence is thatOur work integrates artificial intelligence, brain science, and cognitive science.So one of the three principles of HAI at Stanford University is: intelligence inspired by humans. These developments are also two-way. We advance our understanding of human cognition and the human brain, and learn more from them to improve our development of artificial intelligence.

Azeem Azhar: Algorithms can do a lot more than humans, but it’s still early days. When you look out 15, 20, 30 years from now, where do you think our AI systems’ decision-making capabilities will be?

Fei-Fei Li: First of all, curiosity about science is the driving force behind our continued push to create innovative intelligent machines. If we want to make machine intelligence closer to human intelligence, we, as humans, have humanity and can help machines interact better with humans. If machines can understand humans, they can think like humans.

But again, this development cannot be without boundaries.The way humans have always innovated is to break through their own limits.For example, we are not as fast as cars or horse-drawn carriages, but we are able to create these tools to expand and enhance our capabilities, or even exceed our capabilities.

Sometimes innovation is not just about breakthroughs, it is about replicating human-like capabilities and solving problems by replacing human labor. But no matter which aspect it is, as we advance this technology, there must be boundaries.

Azeem Azhar: Thank you, Dr. Fei-Fei Li, for taking the time to be on our show.

-- over--