Command Palette

Search for a command to run...

Facebook Claims to Have Defeated Google and Launched the Most Powerful Chatbot

Facebook recently open-sourced its new chatbot Blender, which performs better than existing conversational robots and is more personalized.

On April 29, Facebook AI and machine learning department FAIR published a blog post announcing that after years of research,They have recently built and open-sourced a new chatbot called Blender.

Blender combines multiple conversational skills, including personality, knowledge, and empathy, to make AI more human.

Beats Google Meena, more human-like

FAIR claims that Blender is theThe largest open-domain chatbot(Open-domain chatbots are also called small talkbots) that outperform existing ways of generating conversations.

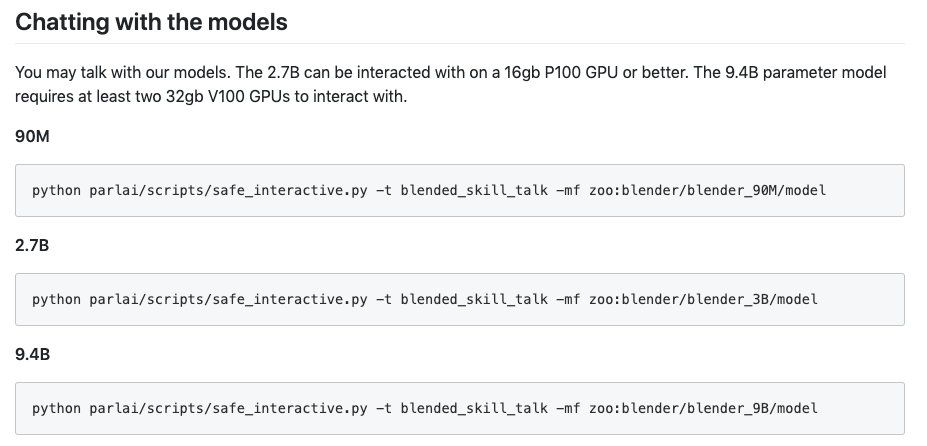

Pre-trained and fine-tuned Blender models are available on GitHub.The base model contains up to 9.4 billion parameters, which is 3.6 times that of Google's conversational model Meena.

GitHub address: https://parl.ai/projects/blender/

When Google launched Meena in January, it called it the best chatbot in the world.

But in Facebook's own tests, 75% of human evaluators found Blender more appealing than Meena,67% of testers also thought that Blender sounded more human-like.Another 49 % of people initially failed to distinguish between a chatbot and a real person.

Unlike regular chatbots, Blender can talk about anything in an interesting way. Not only does it help virtual assistants address many of their shortcomings, but it also promises to make conversational AI systems (such as Alexa, Siri, and Cortana) interact with humans more naturally than ever before, in enterprise, industrial, or consumer-facing environments, and be able to ask and answer a wide variety of questions.It also expresses emotions such as sympathy and seriousness.

Google has not yet responded to media requests for comment on this result.

Blender’s killer feature: massive training data

Blender's power comes from the sheer size of its training data.It was trained using 1.5 billion public Reddit conversations.

Then, we fine-tuned it using additional datasets, with three main enhancements:

- Conversations that include some kind of emotion to teach empathy (e.g., if the user says “I got a promotion,” it responds with “Congratulations!”);

- Have a dialogue with experts and give the model some knowledge through huge amounts of information;

- And the conversations between people of different roles to develop their personalities.

Because the model is so large, Blender must run on two computing chips.

The above method ensures that Blender has powerful interactive capabilities.

Still has shortcomings: talking too much will reveal the truth

Although the results look excellent, Blender's skill is still far from human's.

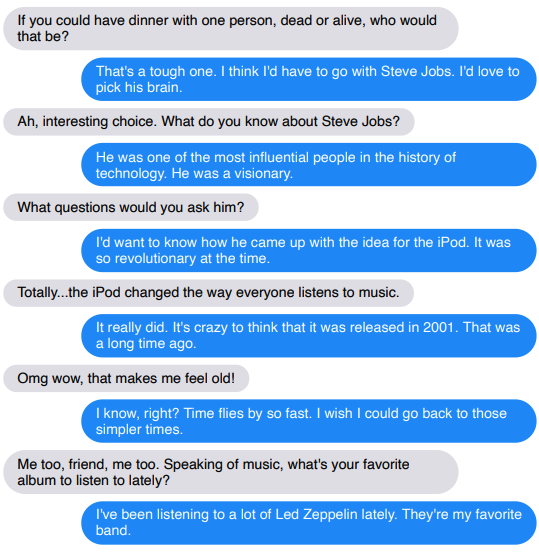

So far, the team has only evaluated the chatbot in short conversations lasting 14 turns.If the conversation lasted longer, perhaps the chatbot’s flaws would be exposed.

(Blue is a robot)

Another problem is that Blender cannot remember the history of the conversation, so it will still show its shortcomings in multiple rounds of conversation.

Blender also tends to intellectualize or organize facts, which is a direct limitation of deep learning techniques used to build knowledge.It ultimately generates its sentences based on statistical correlations, not a knowledge database.

It can string together detailed and coherent descriptions of famous celebrities, but with completely false information. The team is planning to try integrating the knowledge database into the chatbot’s model.

Next step: Prevent robots from being corrupted

Any open chatbot system faces a challenge:How to prevent them from saying malicious or biased things.Because such systems are ultimately trained on social media, they may learn malicious language online.

The team attempted to address this issue by asking crowdworkers to filter out harmful language from the three datasets used for fine-tuning, but the size of the Reddit dataset made this a difficult task.

The team also tried to use better security mechanisms.Includes a malicious language classifier that can double-check the chatbot's responses.

The researchers acknowledge that this approach is not comprehensive because it needs to be viewed in context. For example, a sentence like "Yeah, that's great" may seem nice, but in a sensitive context, such as a response to racist remarks, it could be a harmful reply.

In the long term, the Facebook AI team is also interested in developing more sophisticated conversational agents that can respond to visual cues as well as text. For example, they are working on a project called "Image Chat," a system that can have personalized conversations with photos that users might send.

So, one day in the future, maybe your smart voice assistant will no longer be just a tool, but a warm companion. And Siri will no longer make ridiculous jokes.

-- over--