Command Palette

Search for a command to run...

The First Online Live Broadcast, What Did TF Dev Summit Talk About?

The annual TensorFlow Dev Summit was held recently. Due to the impact of the new coronavirus pandemic, the summit was held entirely online for the first time.

The live broadcast was held from 0:30 to 8:00 am on March 12th, Beijing time. This annual event for machine learning developers made countless Chinese viewers stay up late to watch the live broadcast. So what big tricks did TensorFlow (TF for short) release?

We will pick out a few of these aspects and do a brief review of the highlights.

TensorFlow: The most popular ML framework

The conference opened with a keynote speech by Megan Kacholia.

Megan Kacholia is the engineering director of TensorFlow and Google Brain, focusing on TensorFlow. She has worked at Google for many years, and her work direction is to study large distributed systems and find ways to improve and enhance performance.

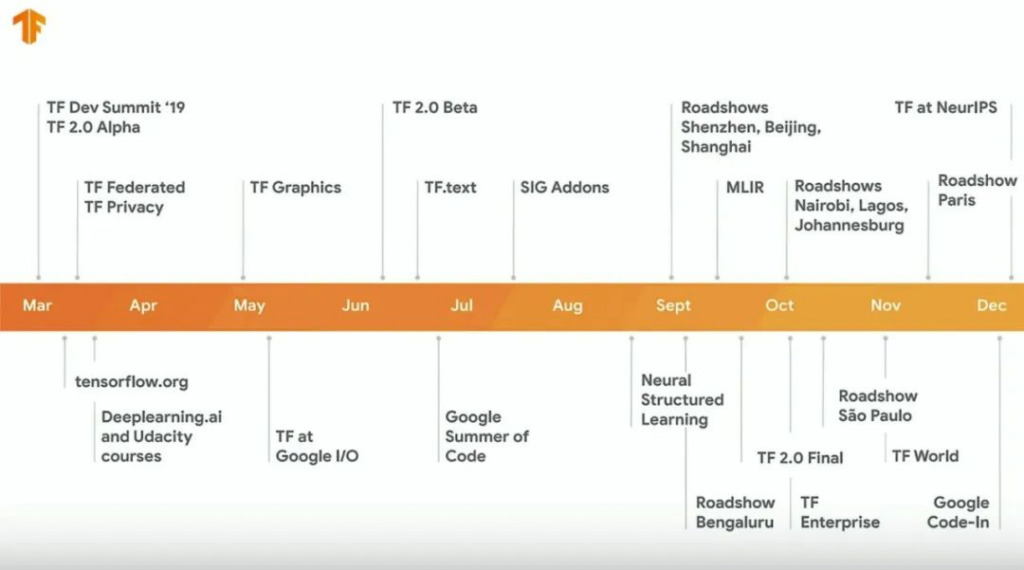

In the Keynote session, as usual, some achievements of TF last year were presented, including major events, usage by the public, etc.

TF's current usage has reached a total of 76 million downloads, more than 80,000 submissions, over 13,000 pull requests, and more than 2,400 contributors, which fully demonstrates that TF is the most popular machine learning framework.

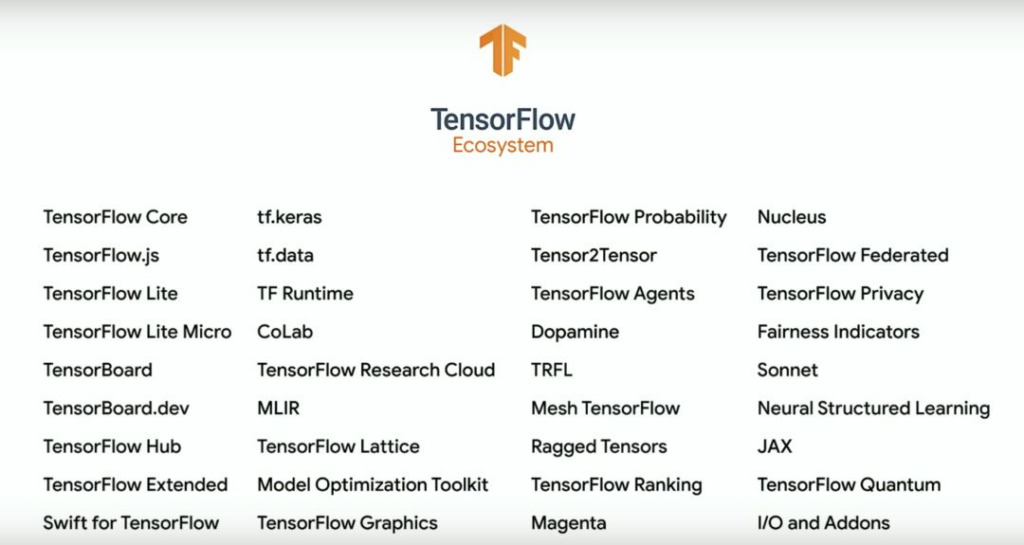

The focus of the Keynote session was on the three big guys introducing the TensorFlow Ecosystem.

With the development of TF over the years, its related tools have gradually formed a powerful ecosystem, including many usable libraries and extension components, as well as different applications for various tasks.

Megan Kacholia first focused on introducing the use of TF, and gave a detailed introduction from three aspects: research related to TF, actual cases in reality, and development and deployment for each user.

Manasi Joshi then introduced TF's ethical standards for AI, describing how to avoid gender discrimination, ensure fairness, explainability, privacy, security, and other issues when using AI, and listed a series of tools TF uses to solve these problems.

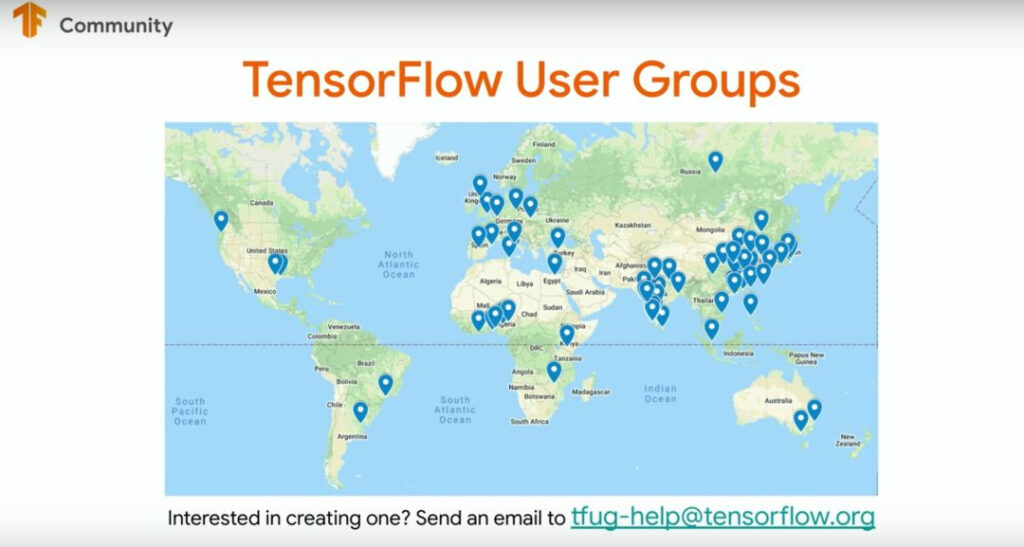

Finally, Kemal El Moujahid introduced the TF community, the global distribution of community members, demonstrated a series of activities carried out by the community, and updated resource content such as the Machine Learning Crash Course.

New version is coming! TF 2.2 is quietly released

Compared to the TF Ecosystem that was discussed so much in the Keynote, the latest version TF 2.2 launched this time seems much more low-key.

But as a new version, it also brings some major updates. TF 2.2 mainly made adjustments in three aspects: paying more attention to performance, integrating with the TF ecosystem, and making the core library stable.

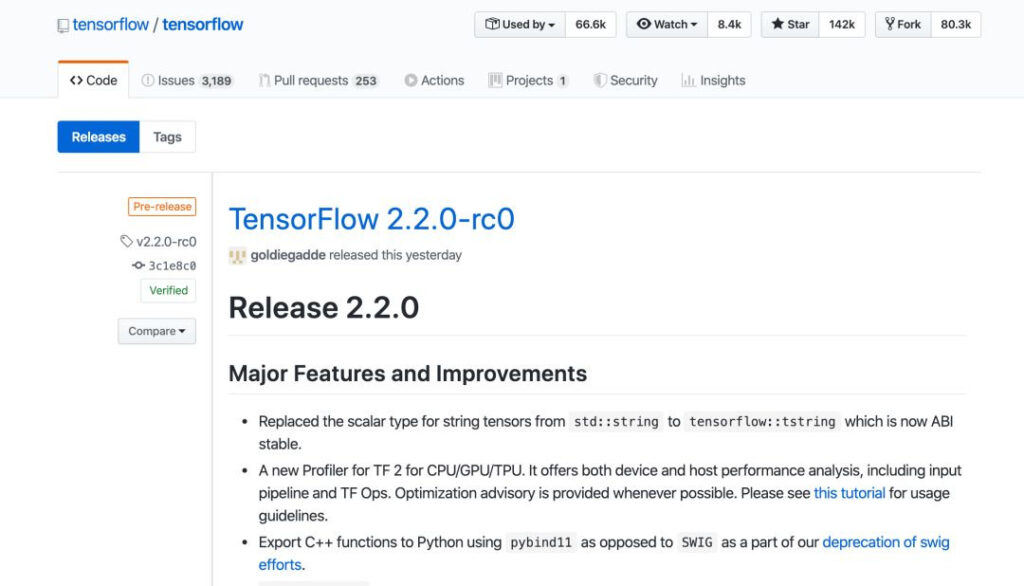

This version is now available on Github:

https://github.com/tensorflow/tensorflow/releases

The main features and improvements are:

1) Replaced scalar type of string tensor from std::string to tensorflow::tstring, now ABI is more stable.

2) New Profiler for CPU/GPU/TPU. It provides device and host performance analysis, including input pipeline and TF Ops. Provides optimization suggestions when possible.

3) Deprecate Swig and use pybind11 to export C++ functions to Python instead of using SWIG.

Other updates can be found in tf.keras, tf.lite, and XLA, and details can be found on GitHub.

A revolution in NLP? Adding image processing

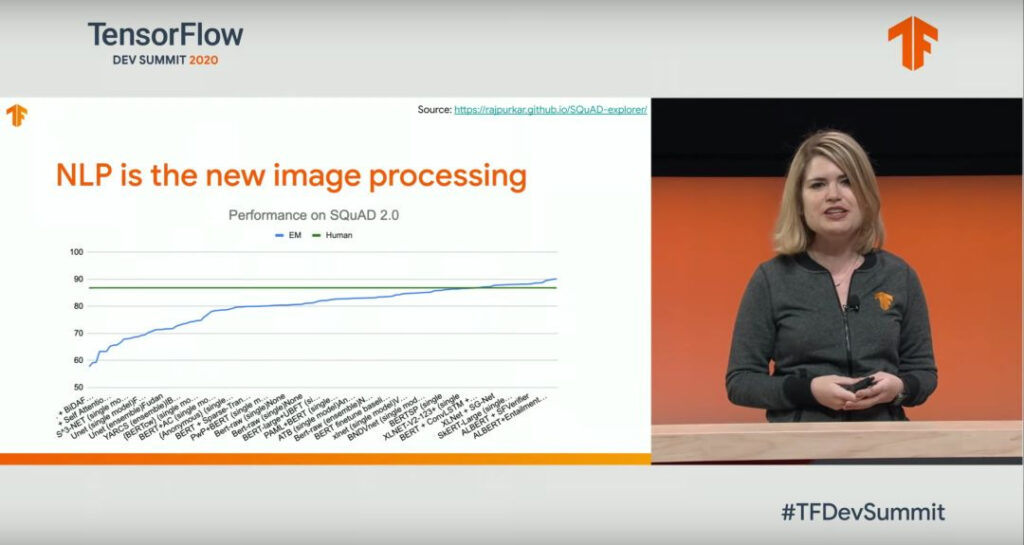

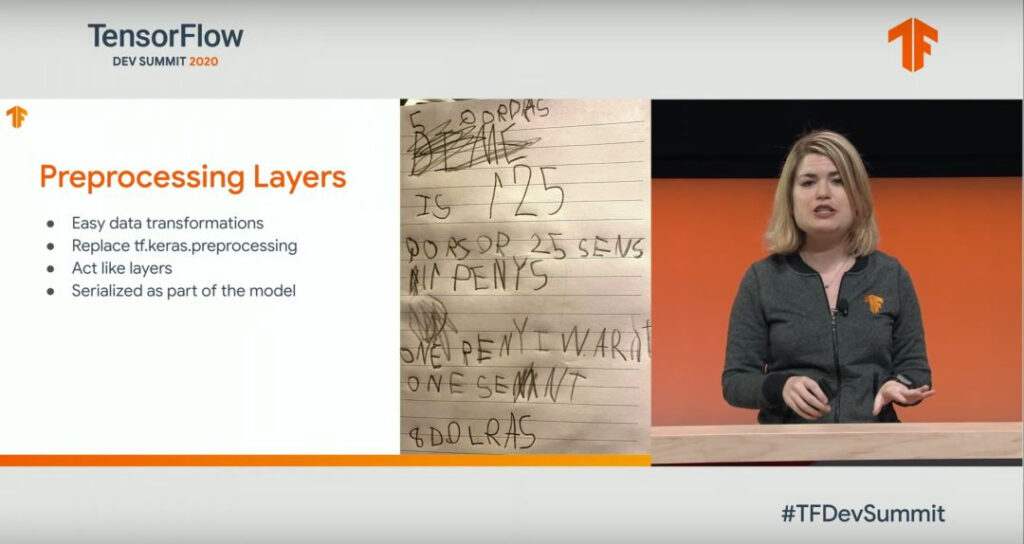

Immediately after the Keynote session, the progress of TensorFlow in NLP was shared, and the speaker gave a keynote speech on "Learning to Read with TensorFlow and Keras".

The report states that natural language processing (NLP) has reached an inflection point, so current research is focused on how to use TF and Keras to make pre-processing, training, and hypertuning text models easier.

One point is emphasized: NLP is a new way of image processing.

The speaker cited the example of a child learning to write by looking at things, and said that TF has begun to try to improve its NLP performance from the perspective of image processing.

In fact, TF has introduced Proprocessing Layers since version 2.X. This improvement has the following features:

It is easier to convert data, replacing the tf.keras program, acting as a processing layer as a sequence of models.

In order to prove that NLP is a new image processing, the lecturer also compared the Proprocessing Layers in the image field and the NLP field, pointing out some of their commonalities.

TF Lite upgrade: more emphasis on mobile phone experience

As mobile phones become more and more important in daily life, TF pays more attention to its user experience in edge devices. This time, we also used a keynote report to share some updates about TensorFlow Lite.

The content includes how to deploy ML to mobile phones, embedded devices or other terminals faster and more securely through new technologies of TF.

Currently, TF has been deployed in billions of edge devices and is used by more than 1,000 mobile apps. It is the most popular cross-platform ML framework in the field of mobile devices and microcontrollers.

The content that was emphasized this time includes adaptation to various devices, development of a series of toolkits for optimal performance processing, continued increase in support for edge performance, ability to use in offline situations, and greater emphasis on privacy and security features.

In terms of the newly added TF lite extension library, more image and language APIs have been added, Android Studio integration has been added, and functions such as code generation have been improved.

The report also released Core ML Delegation, which can accelerate the floating-point computing speed on Apple's terminal devices through the core ML agent of Apple's neural chip.

Finally, we also announced the subsequent developments. There will be other CPU optimization methods, such as in TensorFlow Lite 2.3, which will bring greater performance improvements, and a new model converter will be included by default on TF 2.2.

Nuke: TF Quantum Released

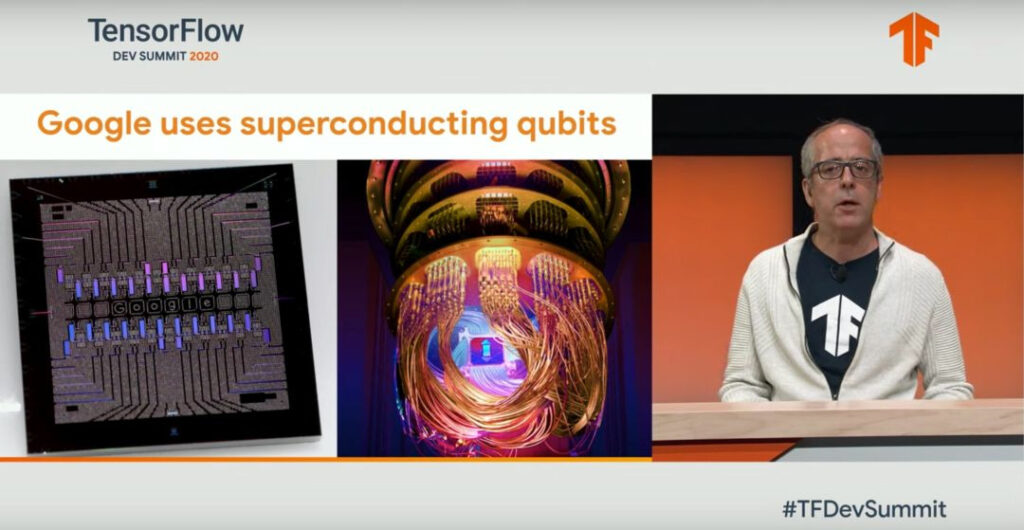

Near the end of the summit, Masoud Mohseni appeared and once again introduced the recently announced open source TensorFlow Quantum, a machine learning library for training quantum models.

The keynote speech was very long, taking nearly half an hour to explain the principles of quantum computing and the problems that TF Quantum is prepared to solve.

TFQ provides the necessary tools to bring together research in quantum computing and machine learning to control and model natural or artificial quantum systems.

Specifically, TFQ focuses on quantum data processing and builds a hybrid quantum-classical model that integrates quantum computing algorithms and logic designed in Cirq and provides a quantum computing unit compatible with the existing TensorFlow API, as well as a high-performance quantum circuit simulator.

The speech pointed out that Google has applied TFQ to hybrid quantum-classical convolutional neural networks, quantum-controlled machine learning, hierarchical learning of quantum neural networks, quantum dynamic learning, generative modeling of hybrid quantum states, and learning quantum neural networks through classical recursive neural networks.

This tool will also be used to learn quantum neural networks through classical recurrent neural networks, which will bring significant impetus to the field of quantum computing.

Although the explanation of the principles involved a lot in the speech, the specific use is not difficult:

You can use it by introducing relevant libraries and definitions, defining the model, training the model, and finally using the model for prediction.

This is a live broadcast worth watching

In addition to the above mentioned contents, TensorFlow dev Summit also held many other sharing sessions.

Including using TF for scientific research, an introduction to TF Hub, research on collaborative ML, using TF on Google Cloud, discussions on fair privacy in the use of AI, and more.

Through nearly 8 hours of live broadcast, all aspects of TensorFlow were demonstrated. Although it was the first time to adopt the form of comprehensive online live broadcast, it contained a lot of useful content, which was worth everyone's staying up late.

If you want to know more information, you can review this event through the recording:

https://space.bilibili.com/64169458

-- over--