Command Palette

Search for a command to run...

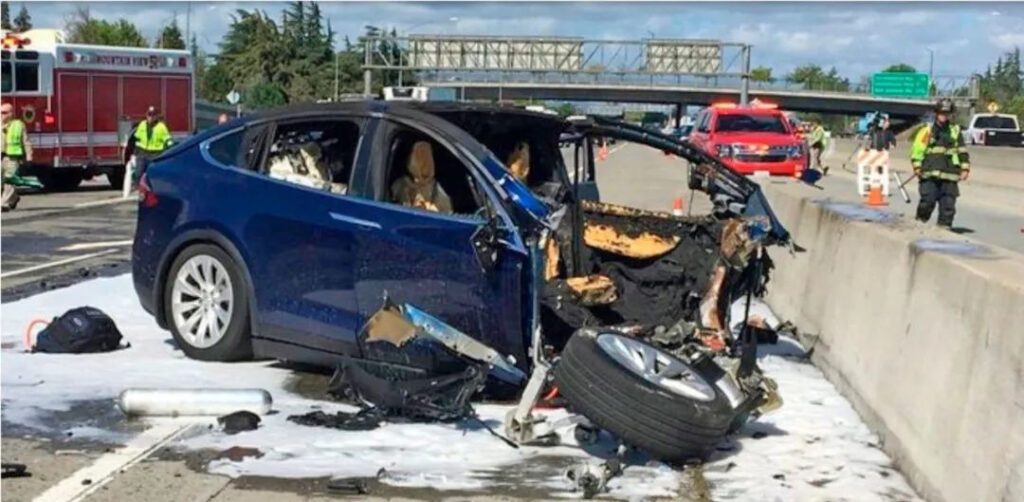

Tesla's Fatal Car Accident Two Years Ago, Accident Report Finally Released

Recently, a serious car accident involving Tesla in 2018 ushered in the results of an investigation at a public hearing. This time, the specific cause of the car accident was explained, and the shortcomings of the automatic Tesla autopilot system and other factors that led to the accident were explained.

Tesla's autopilot system has once again become the focus of attention in the United States.

On February 25, the National Transportation Safety Board, NTSB, held a public hearing in Washington to release its report on the 2018 fatal Tesla Model X crash, in which an Apple engineer died.

The specific content involves the safety issues caused by the automatic driving assistance technology Autopilot in this casualty case, as well as the subsequent investigation results.

The vehicle was in autonomous driving at the time of the accident

The accident occurred in March 2018, when Walter Huang (Huang Weilun, referred to as Huang), a Chinese engineer at Apple, was driving a Model X on a highway in Mountain View, California. The vehicle deviated from the lane and accelerated into the isolation barrier in the road.

Huang was rescued and taken to hospital after the accident, but died a few hours later due to his serious injuries. The vehicle's autonomous driving system has raised subsequent liability issues.

After reviewing the vehicle's data logs, Tesla issued a statement confirming that the Model X was in Autopilot control at the time of the accident, but also pointed out from the data that the owner's hands left the steering wheel before the accident.

The National Transportation Safety Board (NTSB) (an independent U.S. government investigation agency responsible for investigating civil transportation accidents) took over the matter, investigated the specific causes of the accident, and finally issued an investigation report recently.

Tesla: The security system needs to be strengthened

At the hearing, NTSB Chairman Robert Sumwalt said that the finding that shocked him the most was Tesla's "lack of system safeguards."

The NTSB believes that the visual processing system of the car driven by Huang failed to maintain the correct driving path when encountering an unexpected situation and turned incorrectly towards the steel safety guardrail and concrete wall. The system did not detect the safety barrier and did not even seem to have such an intention.

In addition, the autonomous driving system did not respond in time when danger approached. The car's collision warning system did not sound an alarm, and the automatic braking system was not activated.

Another problem is that Tesla did not provide adequate means to monitor the driver's distraction. In this incident, the driver took his hands off the steering wheel several times, but only two visual alarms and one auditory alarm were issued 15 minutes before the accident, and no more reminders were given afterwards.

But the NTSB also pointed out that Tesla was not the only cause of the accident. In addition to the imperfection of the autonomous driving system, some other reasons were also exposed.

Multiple reasons led to the tragedy

The NTSB said that Huang's death was the result of a combination of factors, and Autopilot was just one of them.

Reason 1: Driver distraction

The report showed that the driver was not holding the steering wheel for 34 seconds out of the 60 seconds before the accident, and in the last 6 seconds before the impact, the driver's hands were off the steering wheel and there was no sign of braking or evasive action.

The investigation also found that at the time of the incident, a game on the car owner's mobile phone was activated, and it seemed that he was playing a mobile game at the time, and the data recorded that he had been sending and receiving text messages during that period.

Reason 2: Facility damage

The road's barriers were also a key factor; Huang's car should have been protected by a collision absorber when it hit the concrete barriers.

But the NTSB determined that the shock absorber was damaged in another vehicle crash at the same location a week before the accident, and that Caltrans had not repaired it before the accident.

Reason 3: Inadequate review

Some of the NTSB's criticism was also directed at the nation's top highway safety regulator, the National Highway Traffic Safety Administration (NHTSA), which is part of the Department of Transportation.

The NTSB board said NHTSA has enforcement authority to recall vehicles with technical defects but has been failing to do so.

When it comes to self-driving technology, NHTSA has provided little oversight of the technology and has ignored recommendations to improve the safety of these systems.

Reason 4: Employer’s negligence

Finally, as Huang's employer, Apple was also found liable. The NTSB pointed out that the "Do Not Disturb While Driving" feature on Apple phones is optional, not a default setting.

He also pointed out that companies including Apple have no mandatory measures to urge employees not to use smartphones while driving.

There are still many difficulties in autonomous driving

Although some investigative conclusions were made, the NTSB does not have the legal authority to enforce these recommendations, and it will be up to other regulatory enforcement agencies to choose whether to adopt these conclusions.

Sumwalt made it clear that this car accident was not an isolated incident, and as humans and intelligent systems become increasingly connected, safety issues will be a severe test.

In the United States, where there are millions of highway accidents each year, the NTSB said it is currently investigating 17 crashes, three of which involved Tesla's Autopilot technology, while NHTSA said it is investigating at least 14 crashes involving Autopilot.

Maybe this is not a good thing for Stella and Musk.

-- over--