Command Palette

Search for a command to run...

Facebook, Which Has Been Repeatedly Involved in Scandals, Tries to Use AI Bots to Control employees' Mouths

Public relations crises have always been a major problem in corporate management. As companies expand in size and influence, unifying employees' external statements has also been put on the agenda. From organizing all-staff meetings and studying news drafts within the group, AI has now become a powerful tool for internal employee training.

When talking about public relations crises, many readers' first reaction is to post and delete a large number of posts online. In fact, public opinion monitoring is just one of the daily tasks of a company's public relations department.

As companies grow in size and influence, the role of their public relations departments becomes critical. When faced with a crisis, it is also important to speak out accurately and quickly, and to unify the company's voice to avoid secondary damage.

Ele.me: The PR disaster caused by “forgetting to renew”

On March 15, 2016, CCTV's 315 Gala exposed a number of unscrupulous workshops on the food delivery platform Ele.me.

The report directly pointed out that there were many problems with irregular management on the Ele.me platform, including guiding merchants to fabricate addresses, upload false entity photos, and even allowing unlicensed black workshops to move in.

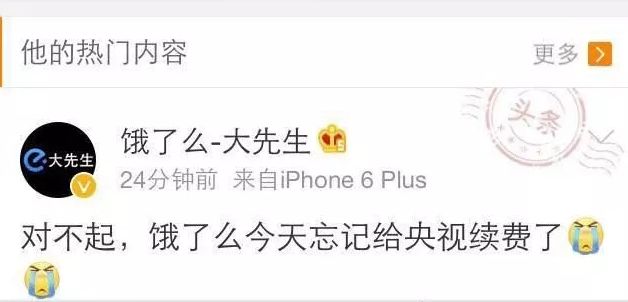

As soon as the report came out, before the official Weibo account had time to issue a statement, an Ele.me employee took the lead in posting on Weibo: Sorry, Ele.me forgot to renew its subscription with CCTV today.

The certified Weibo identity of this outspoken "Mr. Big" is a senior marketing manager for online food ordering at Ele.me.

A single stone can cause a thousand ripples. Mr. Da's actions quickly fermented on the Internet. Netizens began to criticize Ele.me before they even finished watching the 315 Gala.

Instead of admitting the mistake and remedying it quickly, they immediately passed the buck and criticized CCTV? The direct consequence was that Ele.me had a more serious public relations crisis because of the inappropriate remarks made by the "excellent" employee Mr. Da.

Liam Bot: Using AI to teach employees to answer in the same way

Facebook has encountered many "public relations crises" in the past two years.

From the Cambridge Analytica incident last year that leaked user privacy and received a $5 billion fine, to this year's Chinese employee jumping off a building under heavy pressure and the blockchain project Libra being rejected by various countries.

Zuckerberg, the eighth richest man in the world, raised the banner of "connecting the world" and led Facebook through the quagmire of public opinion, making it difficult to move forward.

Zuckerberg and Facebook's public relations department are not the only ones under pressure. Grassroots employees have said that they don't know how to respond to sensitive questions from relatives and friends.

Once a company encounters a public relations crisis, the public relations team will actively provide advice to the management to respond to inquiries from the government and the media, and the chances of senior management saying the wrong thing or expressing the wrong attitude will be greatly reduced.

But grassroots employees are not so safe. If the public relations team is not managed properly, any expression of discomfort from grassroots employees may become the fuel for a "national crusade."

Facebook is aware of the urgency of the issue and has developed an AI question-and-answer robot, Liam Bot, to help employees deal with controversial issues involving the company's position.

According to the New York Times, the answers given by Liam Bot are provided by Facebook's public relations department. Based on the public speeches of Zuckerberg and other executives, Liam Bot can also output a series of opinions and answers to a certain question, guiding employees to answer tricky questions between relatives and friends during holiday gatherings.

Facebook began internal testing of Liam Bot this spring and released it on Thanksgiving Eve, guiding employees to answer questions covering hate speech, Facebook's attitude and practices toward fake news, user privacy leaks, election interference, and other issues. It can be said that the company is really concerned about its employees.

For example, when employees are asked how Facebook handles hate speech, Liam Bot will provide employees with four different responses, including:

① Facebook has teamed up with relevant experts to study solutions

② The company is developing artificial intelligence technology to detect and deal with hate speech in a timely manner

③ The company hired censors to help monitor content

④ Solving this problem requires the improvement of relevant laws

In addition, Liam Bot will also suggest employees to quote Facebook Quarterly ReportAnd other data to enhance the persuasiveness.

Is it politically correct to unify the public relations stance?

In response to Liam Bot, a large number of comments have emerged on social networks.be opposed tosound.

Translation: If Facebook really cared about the opinions of its employees' families, it would regulate its own behavior instead of trying to train its employees like parrots.

翻译:Happy holidays to all the Facebook employees, but it's never a good thing when your company wants to train you to say good things about it.

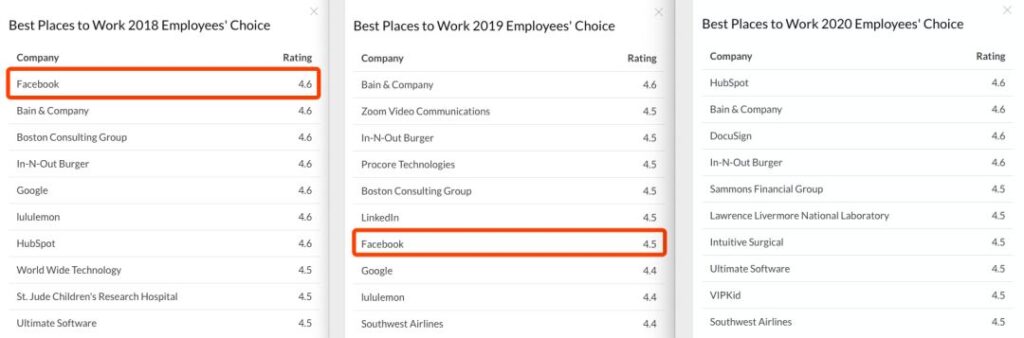

Glassdoor recently released the most ideal companies for 2020 as selected by employees. From ranking first in 2018, to ranking seventh in 2019, and then falling out of the top ten in 2020, Facebook’s image in the eyes of its employees has been on a downward spiral.

As netizens said, compared to unifying employees' external statements through AI Chatbot, it would be better to spend more energy dealing with hate speech and controlling user information leakage.

-- over--