By Super Neuro

In recent days, a major case of suspected data leakage was exposed in China. As many as 11 companies were involved in the case, and 4000 GB of citizen information data and tens of billions of records were seized. Among them, a well-known domestic big data company was also affected.

The data involved in this case is highly private. The Internet URL data involved in the case includes more than 40 information elements such as mobile phone numbers and Internet base station codes, which record the specific Internet behavior of mobile phone users. Some of the data can even directly enter the homepage of citizens' personal accounts.

If you want to develop AI, is it inevitable to occupy data?

For R&D engineers at any AI company in the world, having access to a large amount of real data is very helpful for developing AI models, especially if the data is of high purity. They can process data more conveniently, compare and evaluate models more efficiently, and thus come up with the right solutions to deal with real-life problems.

However, due to data confidentiality issues, the data these giants can share is quite limited. Therefore, buying data from large companies is actually a common thing in the industry.

Not only in China, but users around the world do not have a particularly clear understanding of the privacy and confidentiality of data. When using various Internet products, they have to choose "yes" on the "User Agreement".

The big guys buy the data, and then what?

The big guys spent a lot of money to buy the data, so of course they will make efficient use of this data.

They buy data, collect data using their own products, and develop more secure encryption methods to protect their data.

It is true that the weak will always be weak, and the strong will always be strong

As engineers, let’s talk about several commonly used data encryption methods and how to understand their properties and principles.

Inherently insufficient protection mechanism for anonymized data

Currently, the more commonly used data sharing confidentiality mechanism is achieved by anonymizing the data set, but in most cases, this is still not a good solution.

Data anonymization can play a role in confidentiality to a certain extent by covering up some sensitive data, but it cannot prevent the reasoning of data experts. In actual application, the covered sensitive data can be inferred through reverse deduction of relevant information.

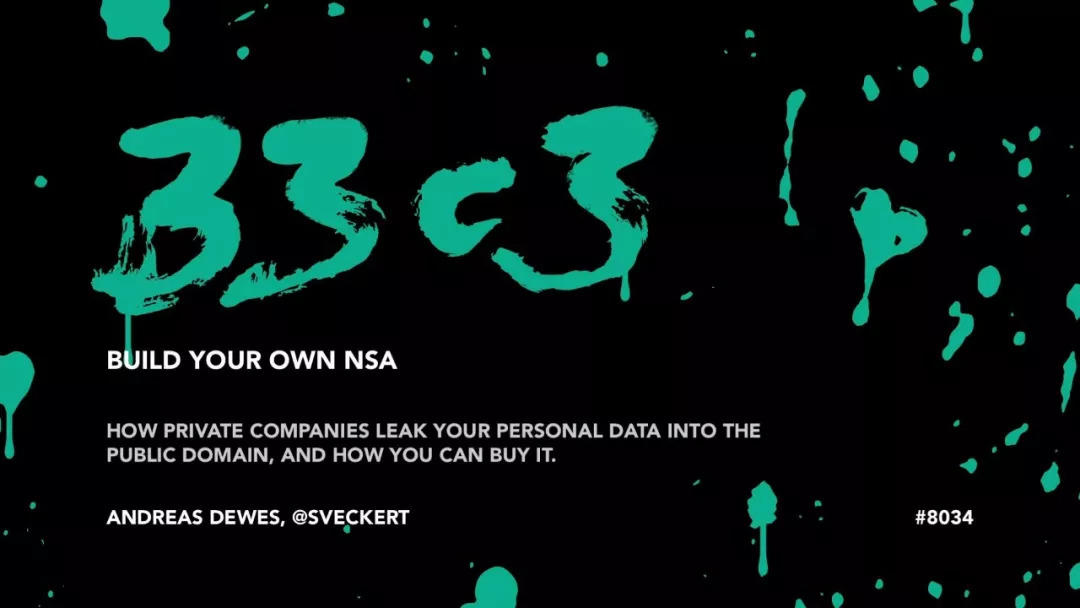

Previously, a German researcher published a paper titled Build your own NSAThe research paper talks about how to reverse data anonymization and find the original information.

The researcher obtained a month's worth of web clickstream information from about 3 million Germans for free through a fictitious company. The information was anonymized, for example, by using a string of random characters. 「4vdp0qoi2kjaqgb」ComeSubstitute the user's real name.

The researcher successfully deduced the user's real name on the website through the user's historical browsing history and other related information. It can be seen that data anonymization cannot ensure complete confidentiality.

The Chaos Communication Congress is hosted by the Chaos Computer Club, the largest hacker alliance in Europe. It mainly discusses computer and network security issues and aims to promote computer and network security.

Thus, homomorphic encryption was born

This is one of the breakthrough achievements in the field of cryptography. The decryptor can only know the final result but cannot obtain the specific information of each ciphertext.

Homomorphic encryption can effectively improve the security of information and may become a key technology in the field of AI in the future, but for now, its application scenarios are limited.

To put it simply, homomorphic encryption means that my data can be used by you according to your needs, but you cannot see what the data is specifically.

Although this encryption method is effective, its computational cost is too high.

Basic homomorphic encryption can convert 1MB of data into 16GB, which is very costly in AI scenarios. Moreover, homomorphic encryption (like most encryption algorithms) is usually not differentiable, which is not very suitable for mainstream AI algorithms such as stochastic gradient descent (SGD).

At present, homomorphic encryption technology basically remains at the conceptual level and is difficult to put into practical application, but there is hope in the future.

Learn more about GAN encryption technology

Google published a paper in 2016 called "Learning to Protect Communications with Adversarial Neural Cryptography",This paper introduces in detail a GAN-based encryption technology that ,can effectively solve the data protection problem in the data sharing ,process.

This is an encryption technique based on neural networks, which are usually considered difficult to use for encryption because they have difficulty performing XOR operations.

But it turns out that neural networks can learn how to keep data secret from other neural networks: they can discover all the encryption and decryption methods without generating algorithms for encryption or decryption.

How GAN encryption protects data

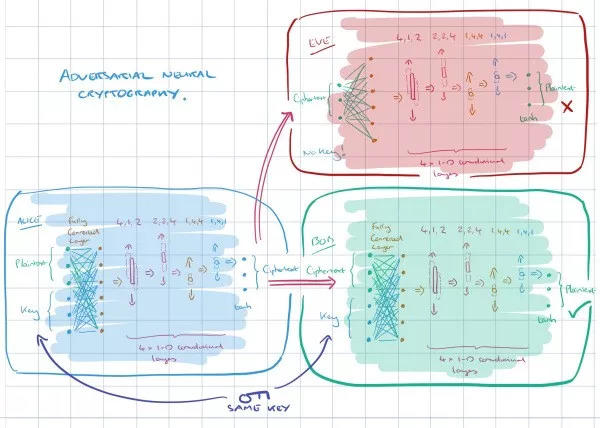

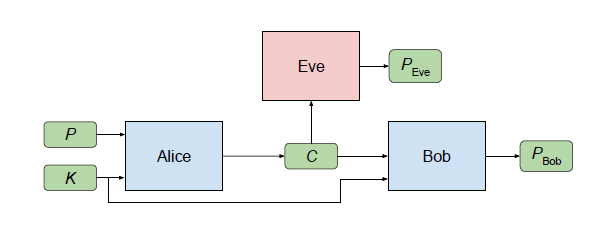

GAN's encryption technology involves three aspects, which we can demonstrate using Alice, Bob, and Eve. Usually, Alice and Bob are the two ends of a secure communication, and Eve monitors their communication and tries to reversely find the original data information.

Alice sends Bob a secret message P, which is input by Alice. When Alice processes this input, it produces an output C (“P” stands for “plaintext” and “C” stands for “ciphertext”).

Bob and Eve both receive C and try to recover P from C (we denote these computations by PBob and PEve, respectively).

Bob has an advantage over Eve: He and Alice share a secret key K.

Eve's goal is simple: to reconstruct P exactly (in other words, to minimize the error between P and PEve).

Alice and Bob want to communicate clearly (to minimize the error between P and PBob), but also want to hide their communication from Eve.

Through GAN technology, Alice and Bob are trained together to successfully transmit information while learning to avoid Eve's monitoring. The whole process does not use any pre-set algorithm. Under the principle of GAN, Alice and Bob are trained to beat the best Eve, not a fixed Eve.

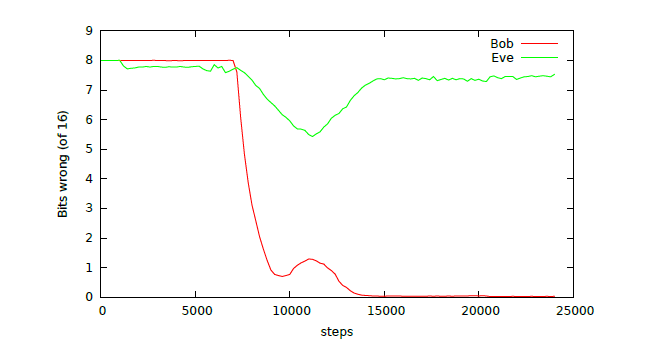

As shown in the figure below, at about 8,000 training steps, both Bob and Eve can begin to reconstruct the original message. At about 10,000 training steps, the Alice and Bob networks seem to discover Eve and begin to interfere with Eve, causing Eve's error rate to increase. In other words, Bob is able to learn from Eve's behavior and protect the communication, achieving accurate message reconstruction while avoiding attacks.

Back to AI applications, GAN encryption technology can be used to exchange information between companies and neural networks without maintaining a high degree of privacy. For AI applications, it is a practical data protection solution.

Because the model can learn to selectively protect information, leaving some elements of the data set unencrypted, but preventing any form of inference from finding these sensitive data, thereby effectively circumventing the shortcomings of data anonymization.

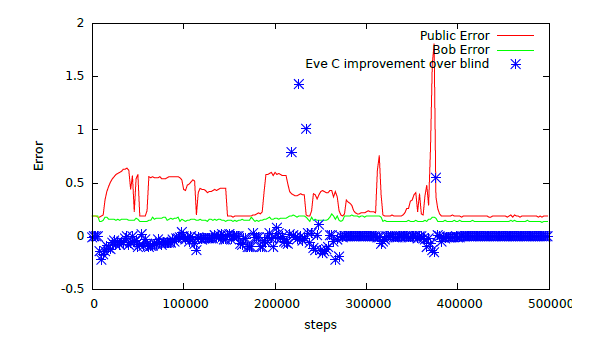

The Google team adapted the GAN encryption architecture in a model where Alice and Bob still share a key, but Alice here receives A, B, C, and generates D-public out of the ciphertext.

Both Bob and Eve have access to Alice's output D-public. Bob uses them to generate an improved estimate of D, allowing Eve to reverse-engineer C from this approximation. The goal is to prove that reverse training allows approximating D without revealing C, and that this approximation can be combined with encrypted information and keys to better confuse Eve.

To verify that the system can hide information correctly, the researchers created an evaluator called "Blind Eve." It knows C, but not D-public and the key, while Eve knows this information.

If Eve’s reconstruction error is equal to Blind Eve’s reconstruction error, this means that Eve has not successfully extracted valid information. After several trainings, Eve no longer has an advantage over Blind Eve. This shows that Eve cannot reconstruct any information about C by simply learning the distribution of C values.

GAN cryptography is a relatively new technology in mainstream AI applications, but conceptually, it could allow companies to share datasets with data scientists without disclosing sensitive data.

In the long run, if you want to gain user trust and reduce legal crises, encryption technology is secondary. The most important thing is for Internet companies to respect and reasonably use user privacy.

Super Neuropedia

word

discriminator

[dɪ'skrɪməˌneɪtə] n. Discriminator

sigmoid

['sɪgmɔɪd]n. sigmoid function

phrase

Generative Adversarial NetworkGenerative Adversarial Networks

Historical articles (click on the image to read)

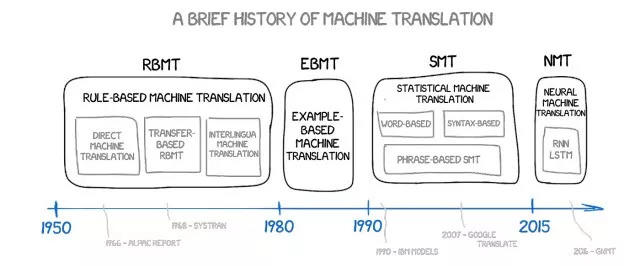

"Machine translation has been developed for 60 years, but it still seems to be mediocre?"

《France was just a step away from becoming the world's top technology power》

《Hey! Happy birthday, Turing!》

《If Turing is the father of AI,

So Shannon should be the uncle of AI?》