Command Palette

Search for a command to run...

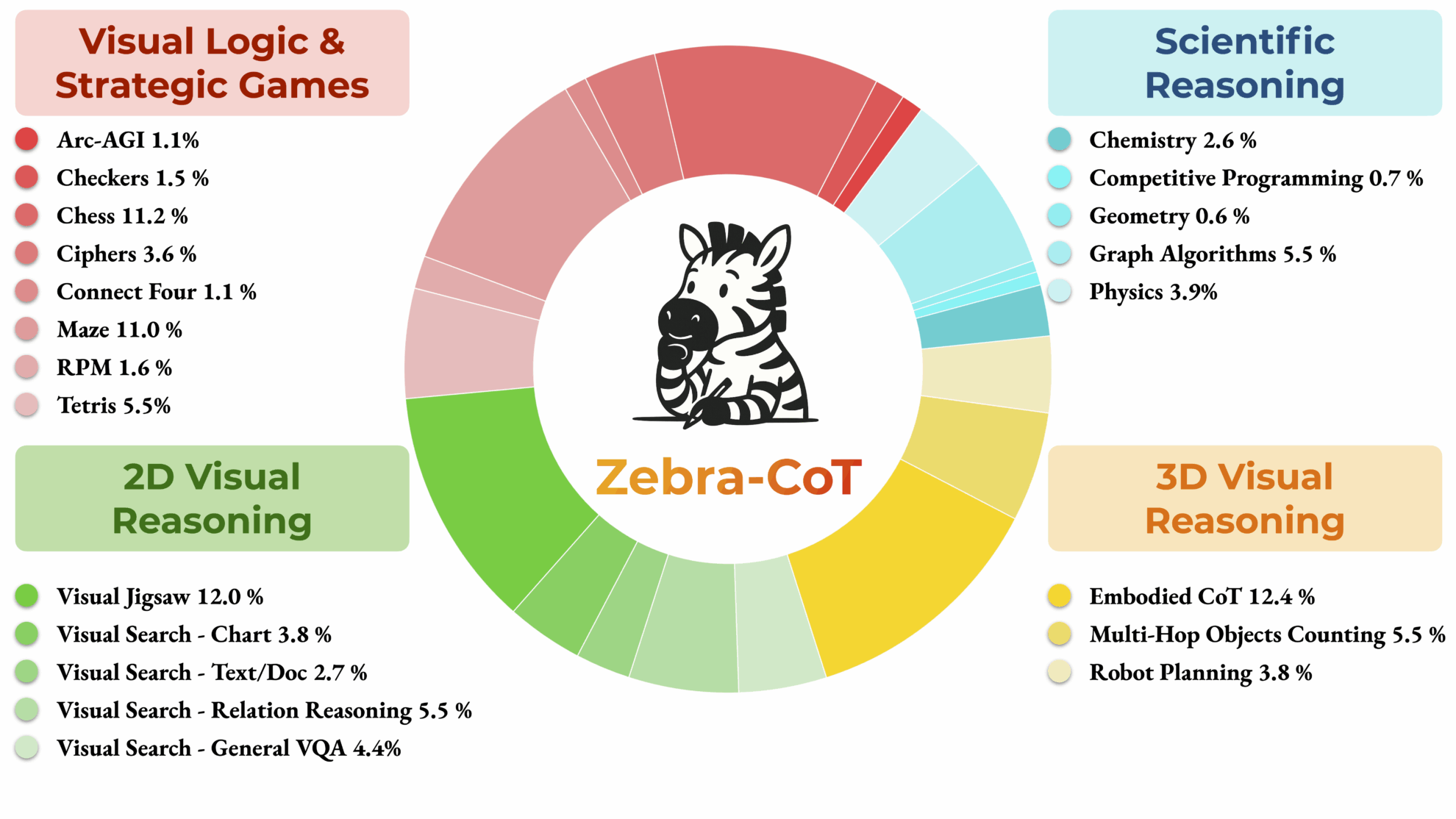

Zebra-CoT Text-to-Image Inference Dataset

Zebra-CoT is a visual language reasoning dataset jointly released by Columbia University, University of Maryland, University of Southern California and New York University in 2025. The related paper results are "Zebra-CoT: A Dataset for Interleaved Vision Language Reasoning", which aims to promote the model to better understand the logical relationship between images and texts, and is widely used in fields such as visual question answering and image description generation to help improve reasoning ability and accuracy.

The dataset contains 182,384 samples covering 4 main categories: scientific reasoning, 2D visual reasoning, 3D visual reasoning, and visual logic and strategy games. These samples contain logically coherent interleaved text-image reasoning traces.

Dataset structure:

- Problem Description: A text description of the problem.

- Question Image: Depending on the nature of the question, this may be accompanied by zero or more images.

- Reasoning images: There are at least one or more visual aids that support the intermediate reasoning steps in the problem-solving process.

- Textual Reasoning Track: A series of textual reflections and corresponding visual sketches or diagram placeholders.

- Final answer: solution to the problem.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.