Command Palette

Search for a command to run...

M2RAG Multimodal Evaluation Benchmark Dataset

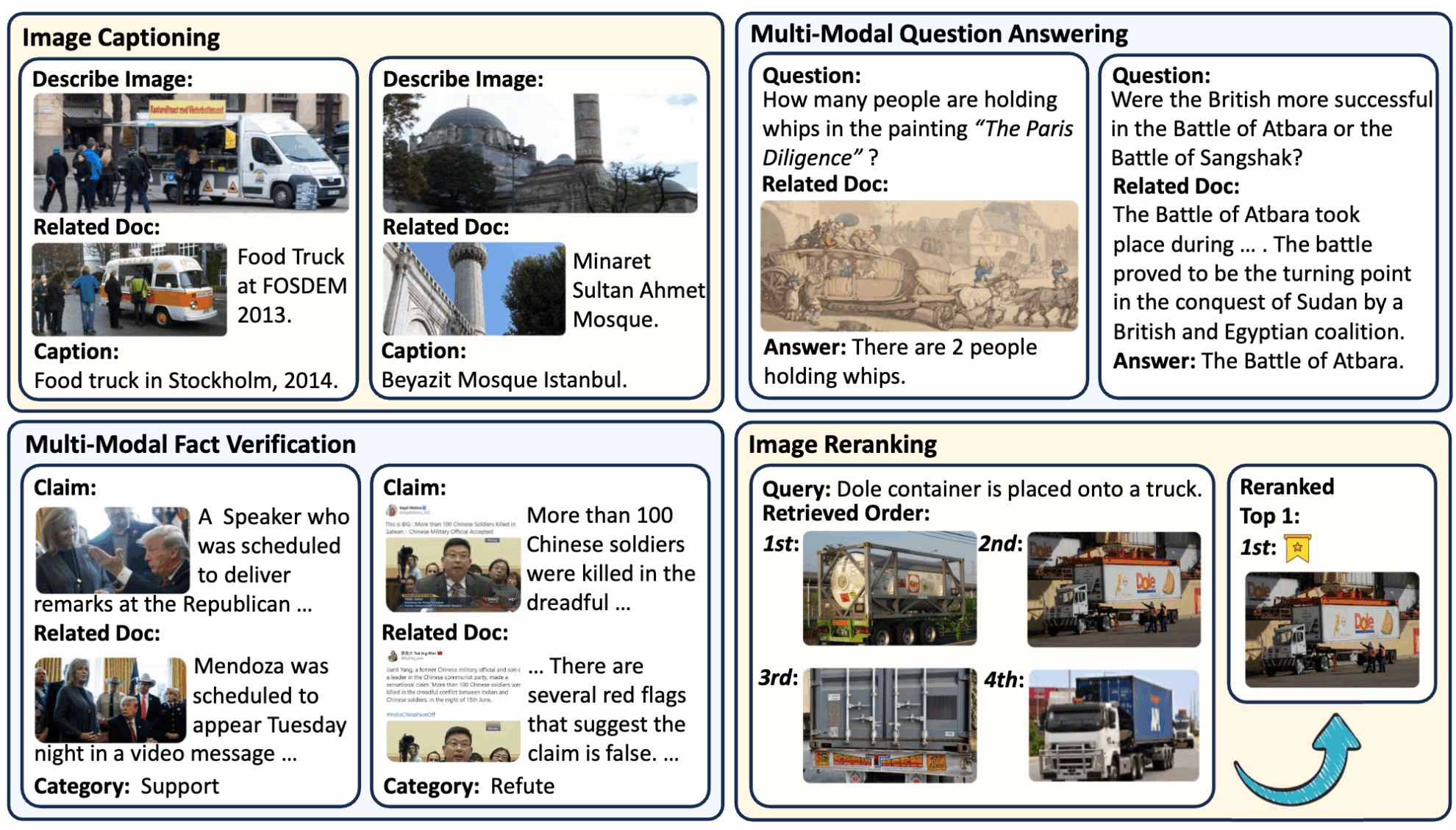

M2RAG is a multimodal dataset for evaluating the capabilities of multimodal large language models (MLLMs) in multimodal retrieval scenarios. It aims to evaluate the ability of MLLMs to use multimodal retrieval document knowledge in tasks such as image description, multimodal question answering, fact verification, and image re-ranking.Benchmarking Retrieval-Augmented Generation in Multi-Modal Contexts".

This dataset combines image and text data to simulate information retrieval and generation tasks in real scenarios, such as news event analysis and visual question answering. It focuses on evaluating the ability of MLLMs to use retrieved document knowledge in multimodal contexts, including understanding of image content, image-text association reasoning, and fact judgment.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.