Command Palette

Search for a command to run...

TacQuad Multimodal Multisensor Tactile Dataset

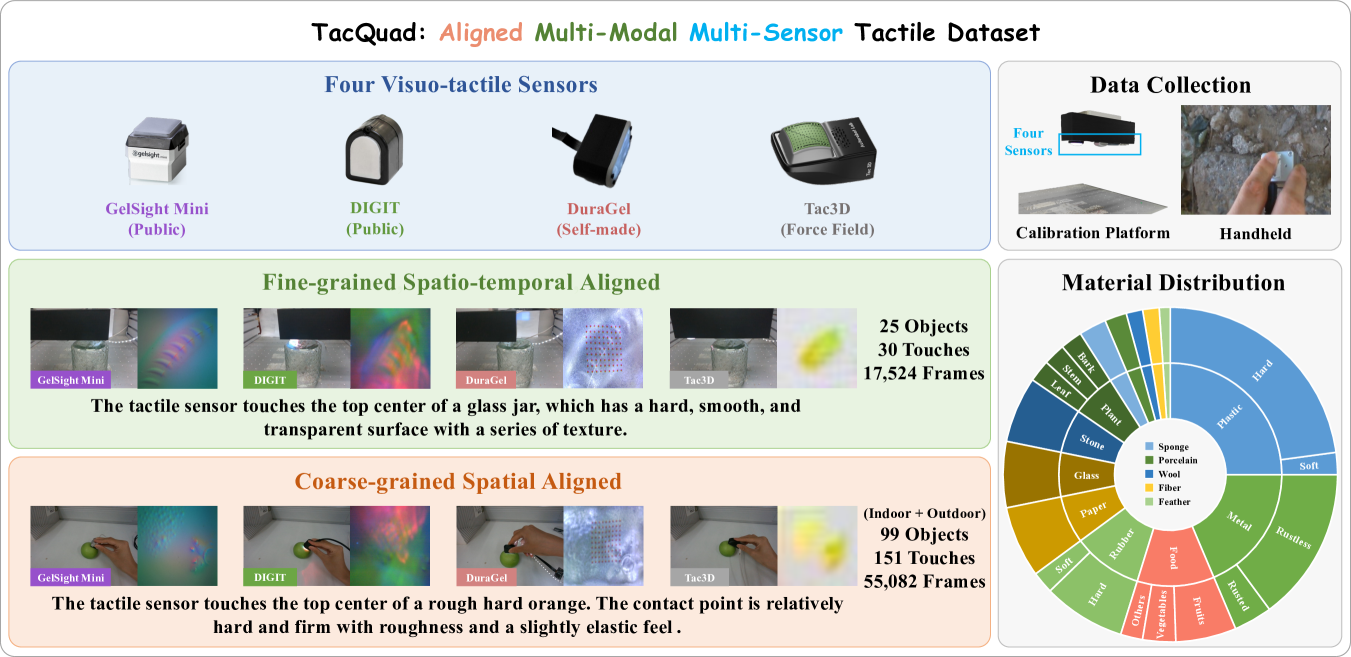

TacQuad is an aligned multimodal multi-sensor tactile dataset collected from 4 types of visual tactile sensors (GelSight Mini, DIGIT, DuraGel and Tac3D). The dataset was released in 2025 by a research team from Renmin University of China, Wuhan University of Science and Technology and Beijing University of Posts and Telecommunications. The related paper results are "AnyTouch: Learning Unified Static-Dynamic Representation across Multiple Visuo-tactile Sensors".

It provides a more comprehensive solution to the low standardization of visual tactile sensors by providing multi-sensor aligned data with text and visual images. This explicitly enables the model to learn semantic-level tactile properties and sensor-independent features, thus forming a unified multi-sensor representation space through a data-driven approach. This dataset includes two paired data subsets with different alignment levels:

- Fine-grained spatiotemporal alignment of data:This data is collected by four sensors pressing the same position of the same object at the same speed. It contains a total of 17,524 contact frames from 25 objects and can be used for fine-grained tasks such as cross-sensor generation.

- Coarse-grained spatial alignment of data:This data set is manually collected with four sensors pressing the same location on the same object, but temporal alignment is not guaranteed. It contains 55,082 contact frames from 99 objects, including both indoor and outdoor scenes, which can be used for cross-sensor matching tasks.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.