Command Palette

Search for a command to run...

GAIA Visual Language Remote Sensing Image Understanding Dataset

Date

Size

Publish URL

Paper URL

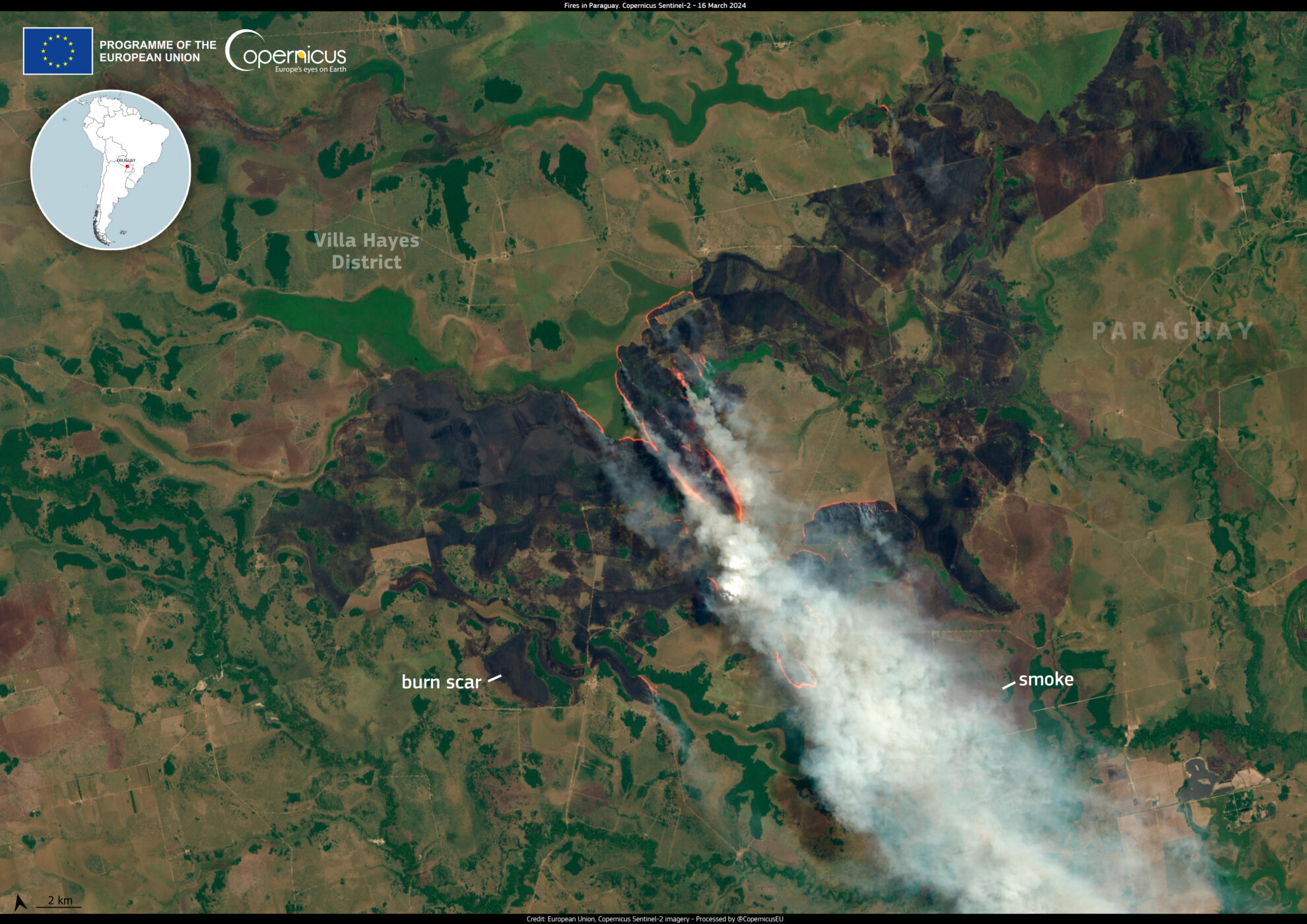

GAIA is a global, multimodal, multiscale vision-language dataset for remote sensing image analysis that aims to bridge the gap between remote sensing (RS) imagery and natural language understanding. It was published in 2025 by researchers from the National Technical University of Athens, Harokopio University of Athens, and Technical University of Munich.GAIA: A Global, Multi-modal, Multi-scale Vision-Language Dataset for Remote Sensing Image Analysis". It provides 205,150 image-text pairs (41,030 images, each with 5 synthetic descriptions) to advance the development of remote sensing-specific visual-language models (VLMs). The dataset covers 25 years of Earth observation data (1998-2024) covering a diverse range of geographic areas, satellite missions, and remote sensing modalities.

Dataset structure

GAIA has been partitioned into training (70%), test (20%), and validation (10%) sets that are stratified in time and space. The partitions of the dataset are provided as JSON files compatible with the img2dataset tool. This approach allows researchers to seamlessly access and reconstruct the dataset for research purposes.

Each entry contains a set of web-scraped data (e.g., image source, image description, copyright notice), extracted data (e.g., location, tags, resolution, satellite, sensor, modality), or synthetically generated data (e.g., latitude, longitude, description).

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.