Command Palette

Search for a command to run...

MORE Multimodal Object-Entity Relation Extraction Dataset

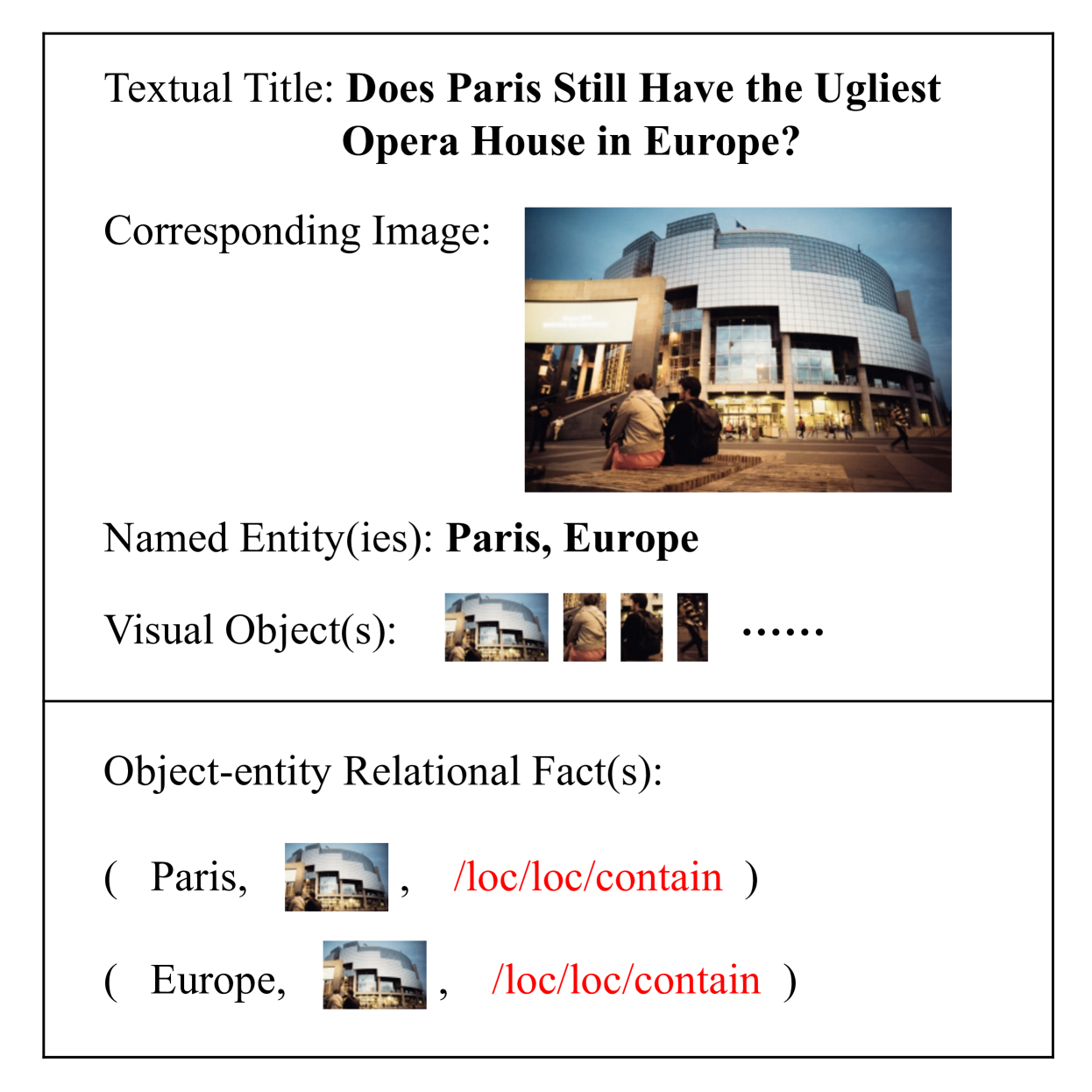

MORE (A Multimodal Object-Entity Relation Extraction Dataset) is a multimodal object-entity relationship extraction dataset proposed by the National Key Laboratory of Nanjing University in 2024. The related paper results are "MORE: A Multimodal Object-Entity Relation Extraction Dataset with a Benchmark Evaluation".

This dataset combines text and image information to provide a complex challenge for machine learning models, namely how to accurately extract entities from text and establish correct relationships with visual objects in images. The MORE dataset contains 21 different relationship types, covering 20,264 multimodal relationship facts, which are annotated on 3,559 pairs of text captions and corresponding images. Each fact in the dataset involves entities identified from text and objects detected from images, which requires the model to not only understand the text content, but also be able to recognize and understand the image content. In addition, the dataset contains 13,520 visual objects, with an average of 3.8 objects per image.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.