Command Palette

Search for a command to run...

MMEvalPro Multimodal Benchmark Evaluation Dataset

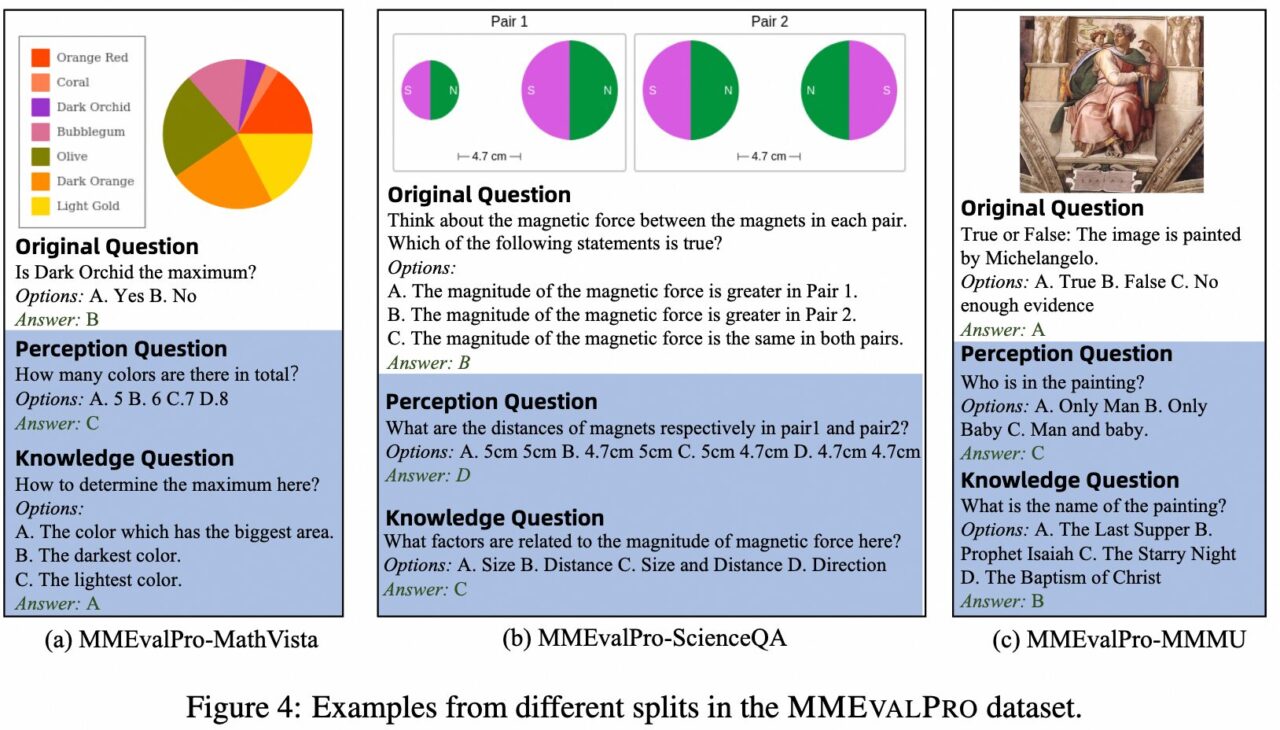

MMEvalPro is a multimodal large model (LMMs) evaluation benchmark proposed in 2024 by a research team from Peking University, the Chinese Academy of Medical Sciences, the Chinese University of Hong Kong, and Alibaba. It aims to provide a more reliable and efficient evaluation method and solve the problems existing in existing multimodal evaluation benchmarks. Existing benchmarks have systematic biases when evaluating LMMs. Even large language models (LLMs) without visual perception can achieve non-trivial performance on these benchmarks, which weakens the credibility of these evaluations. MMEvalPro improves on existing evaluation methods by adding two "anchor" questions (a perception question and a knowledge question), forming a "question triplet" that tests different aspects of the model's multimodal understanding.

MEvalPro's main evaluation metric is "Genuine Accuracy", which requires that the model must correctly answer all questions in a triple to receive a score. This process includes multiple stages of review and quality checks to ensure that the questions are clear, relevant, and challenging. The final benchmark contains 2,138 question triplets, a total of 6,414 different questions covering different topics and difficulty levels.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.