Command Palette

Search for a command to run...

MedTrinity-25M Large-scale Multimodal Medical Dataset

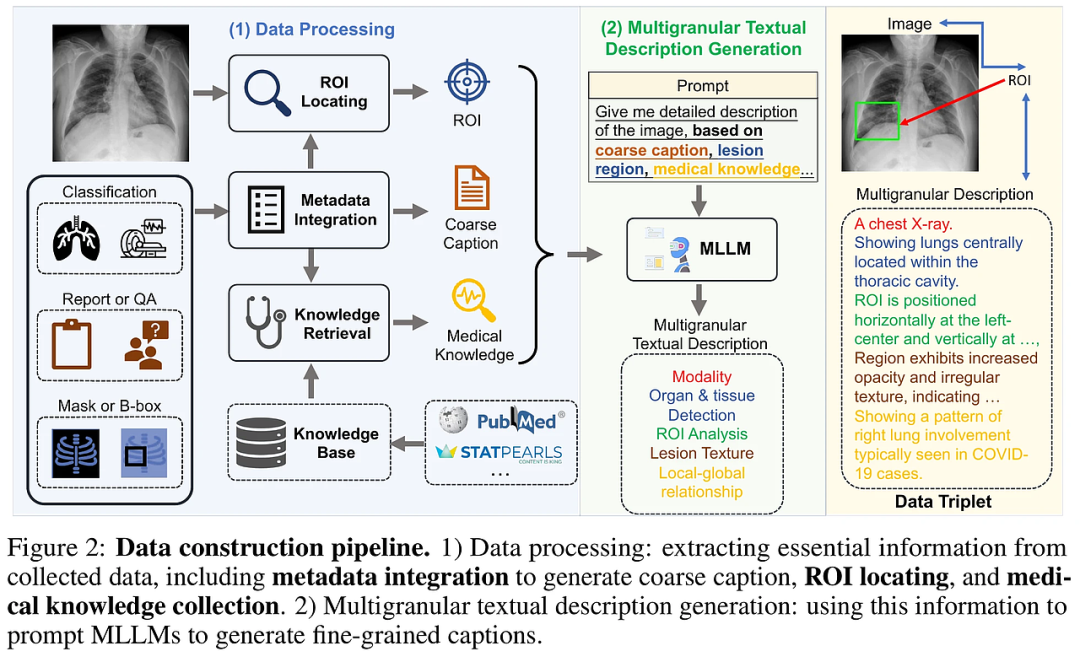

This dataset is a large-scale multimodal medical dataset jointly launched by research teams from Huazhong University of Science and Technology, University of California, Santa Cruz, Harvard University, and Stanford University in 2024. The relevant paper results are "MedTrinity-25M: A Large-scale Multimodal Dataset with Multigranular Annotations for Medicine".

MedTrinity-25M contains more than 25 million medical images, covering 10 imaging modes, and more than 65 diseases are annotated. This dataset not only contains rich global and local annotations, but also integrates multi-level information annotations in multiple modalities (such as CT, MRI, X-ray, etc.). These annotations include disease or lesion type, imaging modality, region-specific descriptions, and relationships between organs. By preprocessing and integrating data from more than 90 different sources, the research team developed a unique automated data construction process for generating multi-level visual and text annotations. This method breaks through the traditional limitations of relying on paired images and texts and realizes the automatic generation of annotations. This dataset will provide great support for multimodal tasks such as medical image processing, report generation, classification, and segmentation, while promoting the pre-training of medical-based artificial intelligence models.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.