Command Palette

Search for a command to run...

Multi Modal Self Instruct Multimodal Benchmark Dataset

Date

Size

Publish URL

Paper URL

License

CC BY-SA 4.0

Tags

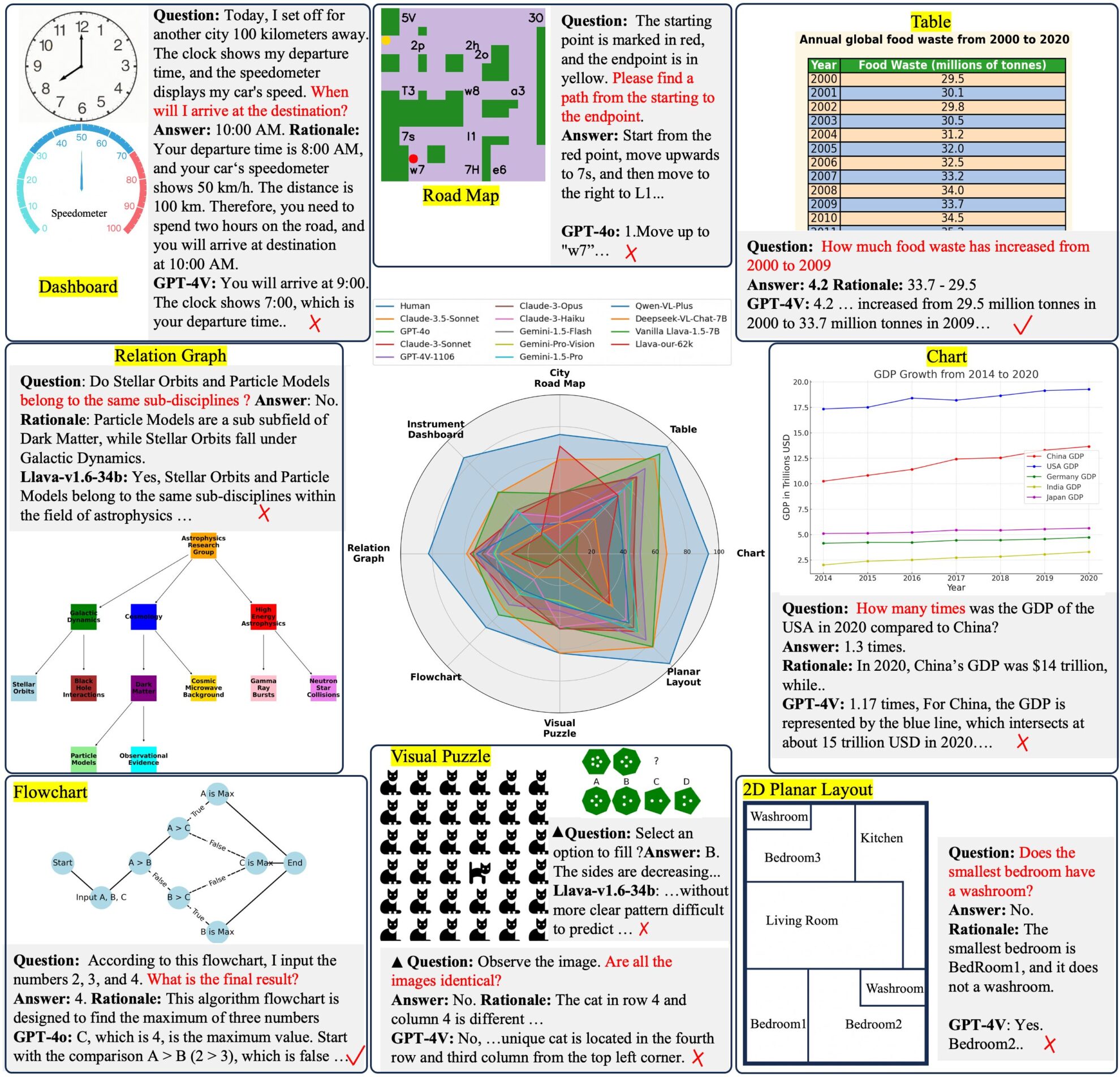

This dataset was jointly launched by Zhejiang University, the Institute of Software of the Chinese Academy of Sciences, ShanghaiTech University and other institutions in 2024. The relevant paper results are "Multimodal Self-Instruct: Synthetic Abstract Image and Visual Reasoning Instruction Using Language Model".

The dataset contains a total of 11,193 abstract images with relevant questions, covering 8 major categories including dashboards, roadmaps, charts, tables, flowcharts, relationship diagrams, visual puzzles and 2D floor plans, in addition to an additional 62,476 data for fine-tuning the model.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.