Command Palette

Search for a command to run...

EgoExoLearn Cross-perspective Skill Learning Dataset

Date

Size

Publish URL

Paper URL

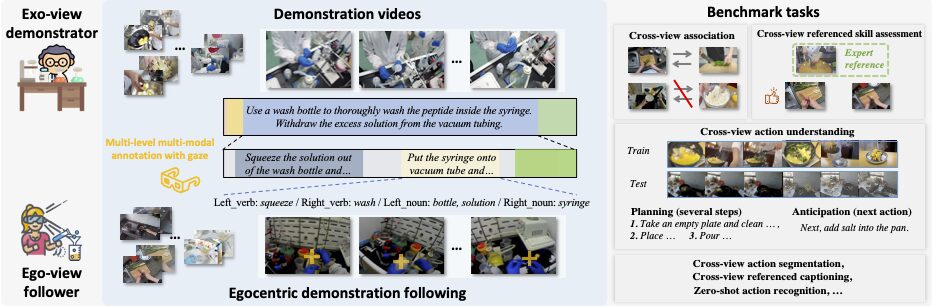

The dataset was jointly released by leading institutions such as Shanghai Artificial Intelligence Laboratory, Nanjing University, and Shenzhen Institutes of Advanced Technology of the Chinese Academy of Sciences, together with students and researchers from many universities including the University of Tokyo, Fudan University, Zhejiang University, and the University of Science and Technology of China. EgoExoLearn aims to give robots the ability to learn new actions by observing others.

The uniqueness of the EgoExoLearn dataset is that it collects video footage from both first-person and third-person perspectives.The first-person video records the entire process of people learning the third-person demonstration actions. This perspective conversion and fusion provides valuable data resources for machines to imitate human learning patterns.

The dataset is constructed to cover not only various scenarios in daily life, but also complex operations in professional laboratories. EgoExoLearn contains a total of 120 hours of perspective and demonstration videos, aiming to enable machines to learn effectively in a variety of environments.

In addition to the videos, the researchers also recorded high-quality gaze data and provided detailed multimodal annotations. These data combined with the annotations built a platform that fully simulates the human learning process, which helps solve the problem of modeling asynchronous action processes from different perspectives.

In order to comprehensively evaluate the value of the EgoExoLearn dataset, the researchers proposed a series of benchmark tests, such as cross-perspective association, cross-perspective action planning, and cross-perspective reference skill evaluation, and conducted in-depth analysis. Looking forward, EgoExoLearn will become an important cornerstone of cross-perspective action bridging, providing solid support for robots to seamlessly learn human behavior in the real world.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.