Command Palette

Search for a command to run...

Inferix: Ein block-basierter, next-generation Inferenz-Engine für Weltsimulation

Inferix: Ein block-basierter, next-generation Inferenz-Engine für Weltsimulation

Zusammenfassung

Weltmodelle dienen als zentrale Simulatoren für Bereiche wie agente AI, embodied AI und Gaming und sind in der Lage, lange, physikalisch realistische und interaktive Videos von hoher Qualität zu generieren. Darüber hinaus könnten skalierte Versionen dieser Modelle emergente Fähigkeiten in visueller Wahrnehmung, Verständnis und Schlussfolgerung freisetzen und den Weg für eine neue Paradigmenverschiebung ebnen, die jenseits der derzeit dominierenden, auf LLMs basierenden Vision-Grundmodelle liegt. Ein entscheidender Durchbruch, der diese Modelle befähigt, ist das semi-autoregressive (block-diffusion) Decodierparadigma, das die Stärken von Diffusions- und autoregressiven Methoden vereint, indem es Video-Tokens in Blöcken durch Anwendung von Diffusion generiert, wobei jeweils auf vorherige Blöcke konditioniert wird. Dies führt zu kohärenteren und stabileren Video-Sequenzen. Insbesondere überwindet es die Beschränkungen herkömmlicher Video-Diffusionsmodelle, indem es die KV-Cache-Verwaltung im Stil von LLMs wieder einführt, was eine effiziente, variabel lange und qualitativ hochwertige Generierung ermöglicht.Daher wurde Inferix speziell als eine nächste Generation von Inferenz-Engines konzipiert, um durch optimierte semi-autoregressive Decodierprozesse immersives Welt-Synthese zu ermöglichen. Dieser fokussierte Ansatz auf Welt-Simulation unterscheidet Inferix deutlich von Systemen, die für hochgradig konkurrierende Szenarien (wie vLLM oder SGLang) entwickelt wurden, sowie von klassischen Video-Diffusionsmodellen (wie xDiTs). Inferix erweitert sein Angebot zudem um interaktives Video-Streaming und Profiling, was Echtzeit-Interaktion und realistische Simulation ermöglicht, um Welt-Dynamiken präzise zu modellieren. Zudem unterstützt es eine effiziente Benchmarking-Integration durch nahtlose Verknüpfung mit LV-Bench, einem neuen, fein granulären Evaluierungsbenchmark, der speziell für Szenarien der Minutenlangen Video-Generierung konzipiert ist. Wir hoffen, dass die Gemeinschaft gemeinsam an der Weiterentwicklung von Inferix arbeitet und die Erforschung von Weltmodellen voranbringt.

Summarization

The Inferix Team introduces Inferix, a next-generation inference engine for immersive world synthesis that employs optimized semi-autoregressive block-diffusion decoding and LLM-style KV Cache management to enable efficient, interactive, and long-form video generation distinct from standard high-concurrency or classic diffusion systems.

Introduction

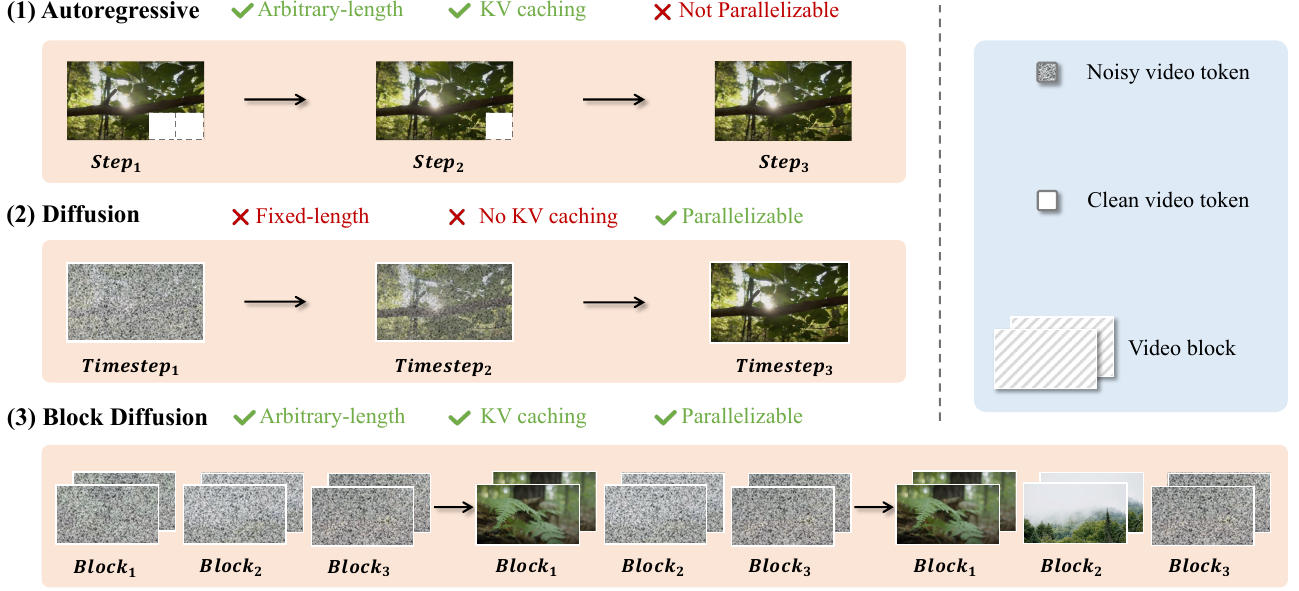

World models are rapidly advancing toward generating long-form, interactive video sequences, creating a critical need for specialized infrastructure capable of handling the immense computational and storage demands of immersive world synthesis. Current approaches face a distinct trade-off: Diffusion Transformers (DiT) offer high-quality, parallelized generation but suffer from inefficient decoding and fixed-length constraints, while Autoregressive (AR) models support variable lengths but often lag in visual quality and lack parallelization capabilities.

To bridge this gap, the authors introduce Inferix, a dedicated inference engine designed to enable efficient, arbitrary-length video generation. By adopting a "block diffusion" framework, the system interpolates between AR and diffusion paradigms, utilizing a semi-autoregressive decoding strategy that reintroduces LLM-style memory management to maintain high generation quality over extended sequences.

Key innovations include:

- Semi-Autoregressive Block Diffusion: The engine employs a generate-and-cache loop where attention mechanisms leverage a global KV cache to maintain context across generated blocks, ensuring long-range coherence without sacrificing diffusion quality.

- Advanced Memory Management: To address the storage bottlenecks of long-context simulation, the system integrates intelligent KV cache optimization techniques similar to PageAttention to minimize GPU memory usage.

- Scalable Production Features: The framework supports distributed synthesis for large-scale environments, continuous prompting for dynamic narrative control, and built-in real-time video streaming protocols.

Dataset

The authors introduce LV-Bench, a benchmark designed to address the challenges of generating minute-long videos. The dataset construction and usage involve the following components:

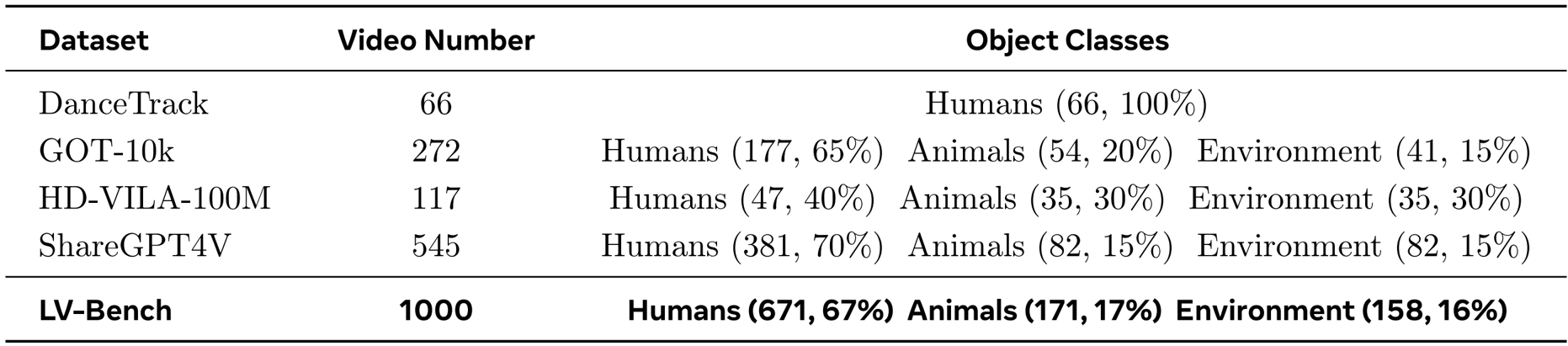

- Composition and Sources: The benchmark comprises 1,000 long-form videos collected from diverse open-source collections, specifically DanceTrack, GOT-10k, HD-VILA-100M, and ShareGPT4V.

- Selection Criteria: The team prioritized high-resolution content, strictly selecting videos that exceed 50 seconds in duration.

- Metadata Construction: To ensure linguistic diversity and temporal detail, the authors utilized GPT-4o as a data engine to generate granular captions every 2 to 3 seconds.

- Quality Assurance: A rigorous human-in-the-loop validation framework was applied across three stages: sourcing (filtering unsuitable clips), chunk segmentation (ensuring coherence and removing artifacts), and caption verification (refining AI-generated text). At least two independent reviewers validated each stage to maintain reliability.

- Model Usage: The final curated dataset is partitioned into an 80/20 split for training and evaluation purposes.

Method

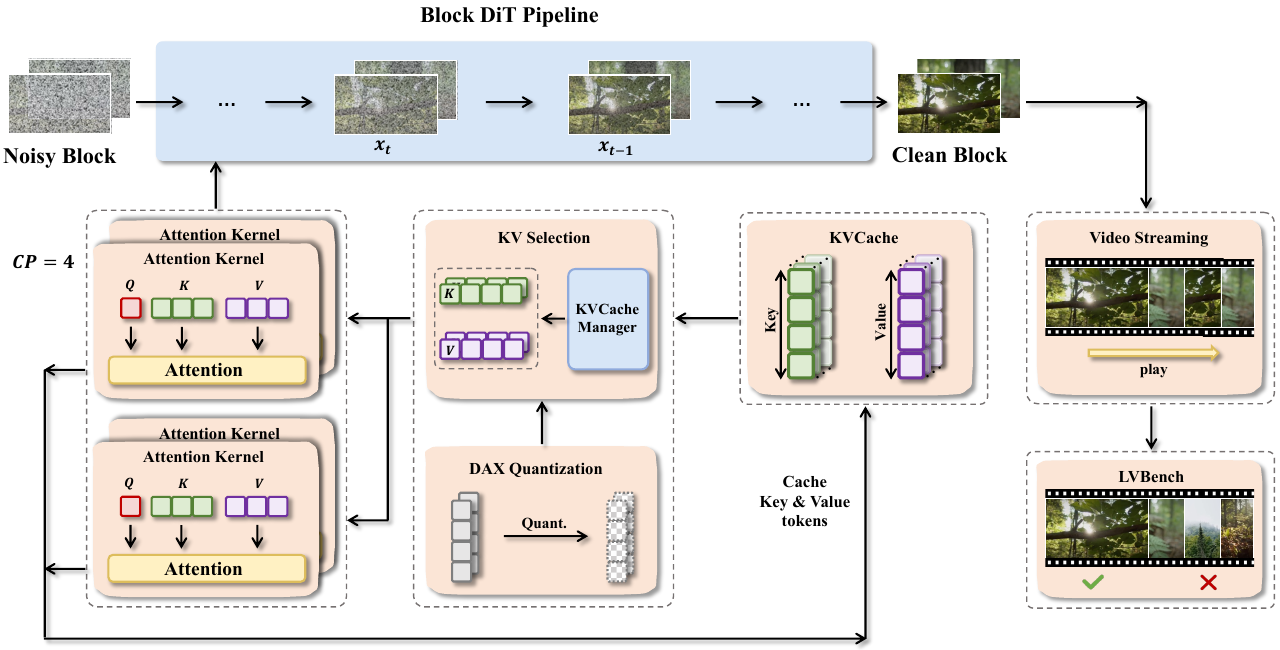

The authors leverage a modular and extensible framework designed to address the unique challenges of block diffusion models for long video generation. The core of the system, as illustrated in the framework diagram, is built around a generalized inference pipeline that abstracts common computational patterns across diverse models such as MAGI-1, CausVid, and Self Forcing. This pipeline orchestrates a sequence of interconnected components to enable efficient and scalable inference.

At the heart of the inference process is the Block DiT Pipeline, which processes video in discrete blocks. Each block undergoes a diffusion-based denoising process, where a noisy block is iteratively refined into a clean block. This process relies on attention mechanisms that require access to key-value (KV) pairs from previous steps. To manage these KV pairs efficiently, the framework employs a block-wise KV memory management system. This system supports flexible access patterns, including range-based chunked access and index-based selective fetch, ensuring scalability and extensibility for future model variants that may require sliding-window or selective global context.

To accelerate computation and reduce memory pressure, the framework integrates a suite of parallelism techniques. Ulysses-style sequence parallelism partitions independent attention heads across multiple GPUs, while Ring Attention distributes attention operations in a ring topology, enabling scalable computation over long sequences. The choice between these strategies is adaptive, based on model architecture and communication overhead, ensuring optimal resource utilization. The framework also incorporates DAX quantization, which reduces the precision of KV cache tokens to minimize memory footprint without significant loss in quality.

The system further supports real-time video streaming, allowing dynamic control over narrative generation through user-provided signals such as prompts or motion inputs. When a new prompt is introduced for a subsequent video chunk, the framework clears the cross-attention cache to prevent interference from prior contexts, ensuring coherent and prompt-aligned generation. This capability is complemented by a built-in performance profiler that provides near-zero-overhead, customizable, and easy-to-use instrumentation for monitoring resource utilization during inference.

Experiment

- Introduces Video Drift Error (VDE), a unified metric inspired by MAPE, designed to quantify relative quality changes and temporal degradation in long-form video generation.

- Establishes five specific VDE variants (Clarity, Motion, Aesthetic, Background, and Subject) to assess drift across different visual and dynamic aspects, where lower scores indicate stronger temporal consistency.

- Integrates these drift metrics with five complementary quality dimensions from VBench (Subject Consistency, Background Consistency, Motion Smoothness, Aesthetic Quality, and Image Quality) to form a comprehensive evaluation protocol.

The authors use the LV-Bench dataset, which contains 1000 videos and is composed of 671 human instances, 171 animal instances, and 158 environment instances, to evaluate long-form video generation. Results show that the dataset spans diverse object classes and video counts, providing a comprehensive benchmark for assessing temporal consistency and visual quality in long-horizon video generation.