Command Palette

Search for a command to run...

一键部署 GLM-4.1V-9B-Thinking

一、教程简介

GLM-4.1V-9B-Thinking 是由智谱 AI 联合清华大学团队于 2025 年 7 月 2 日发布的开源视觉语言模型,专为复杂认知任务设计,支持图像、视频、文档等多模态输入。基于 GLM-4-9B-0414 基座模型,GLM-4.1V-9B-Thinking,引入思考范式,通过课程采样强化学习 RLCS(Reinforcement Learning with Curriculum Sampling)全面提升模型能力,达到 10B 参数级别的视觉语言模型的最强性能,在 18 个榜单任务中持平甚至超过 8 倍参数量的 Qwen-2.5-VL-72B 。相关论文成果为 GLM-4.1V-Thinking: Towards Versatile Multimodal Reasoning with Scalable Reinforcement Learning 。

该教程算力资源采用单卡 RTX A6000,本教程支持文本对话,图片、视频、 PDF 、 PPT 理解。

二、效果展示

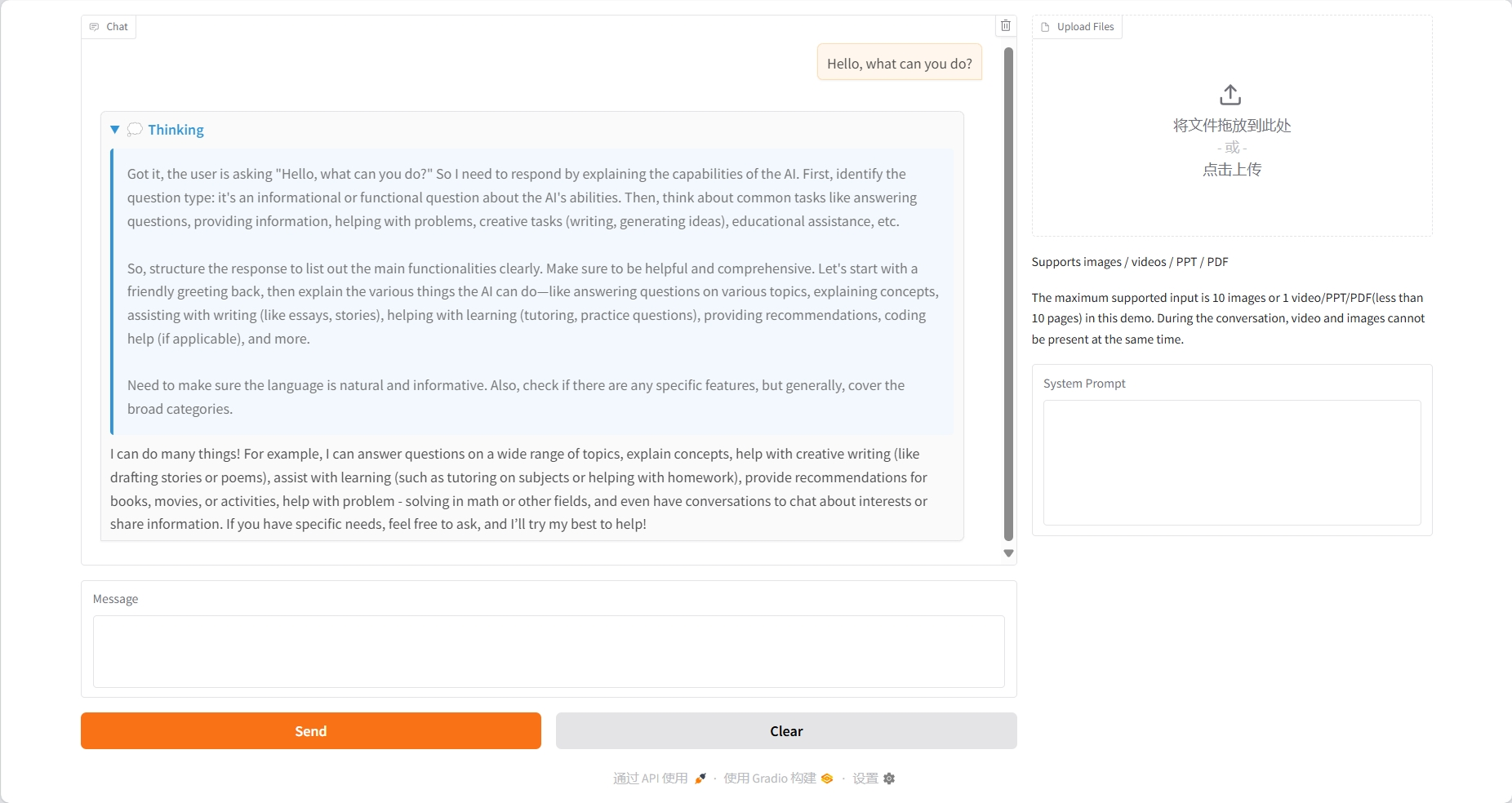

文本对话

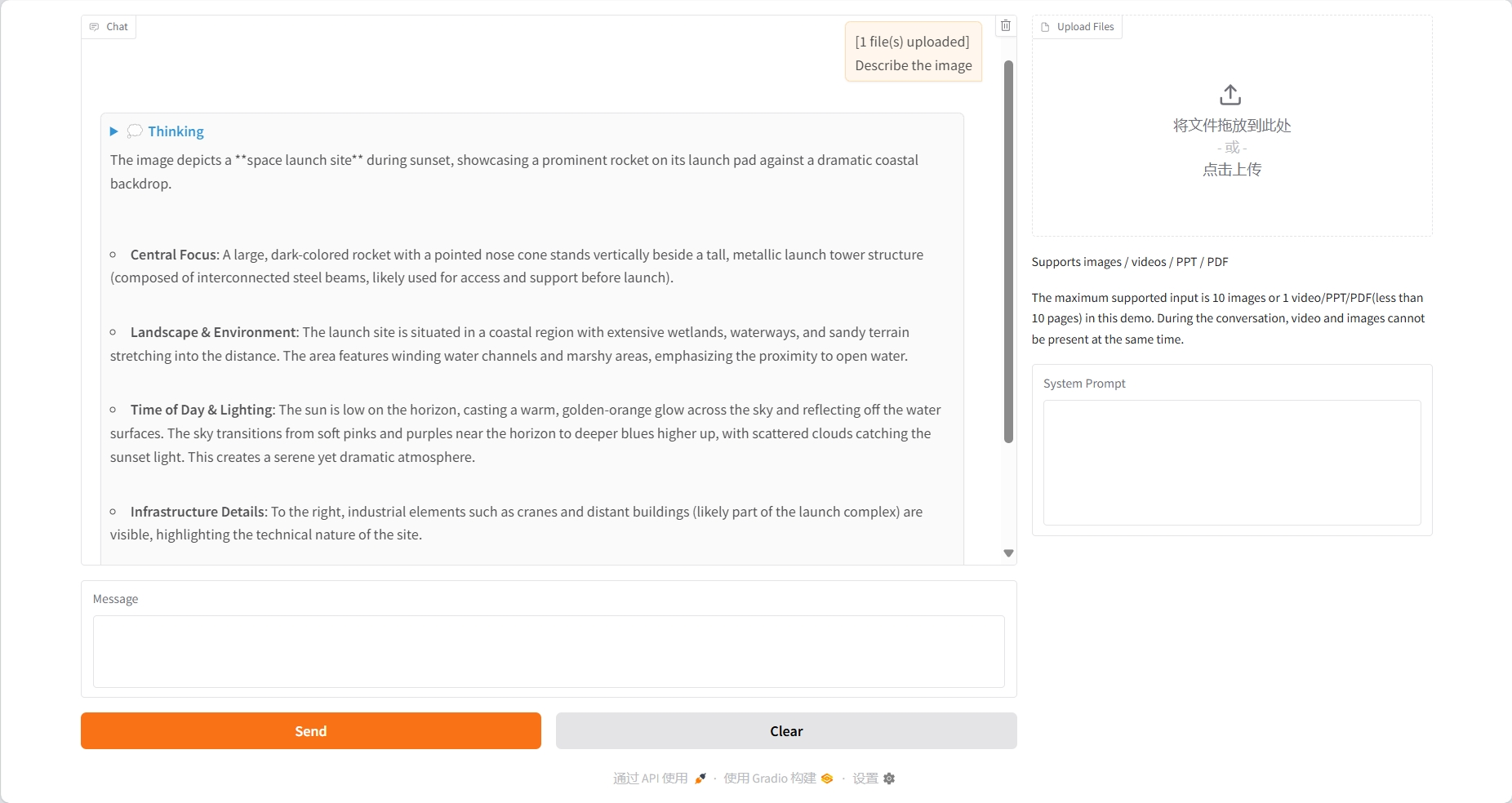

图片理解

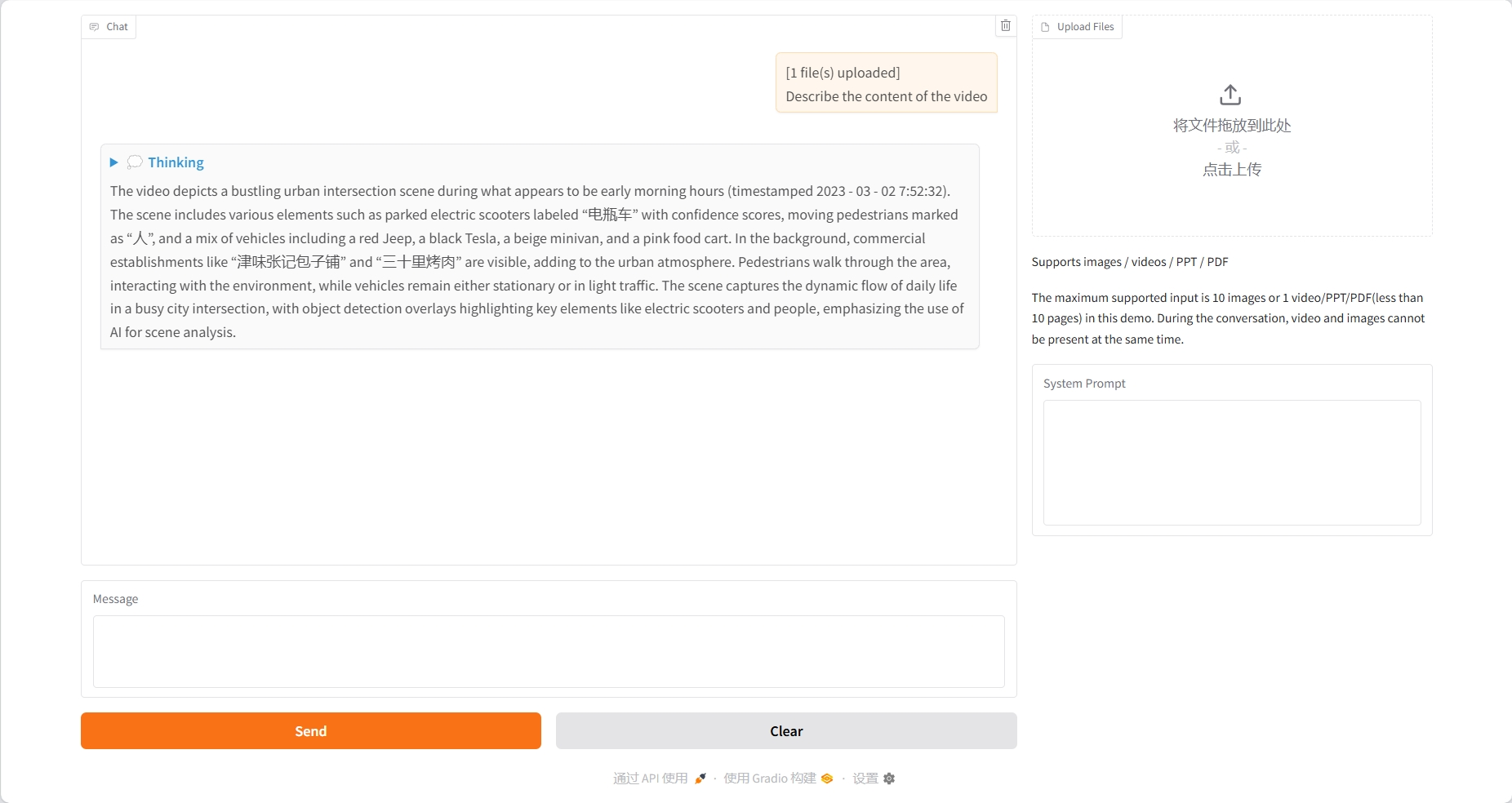

视频理解

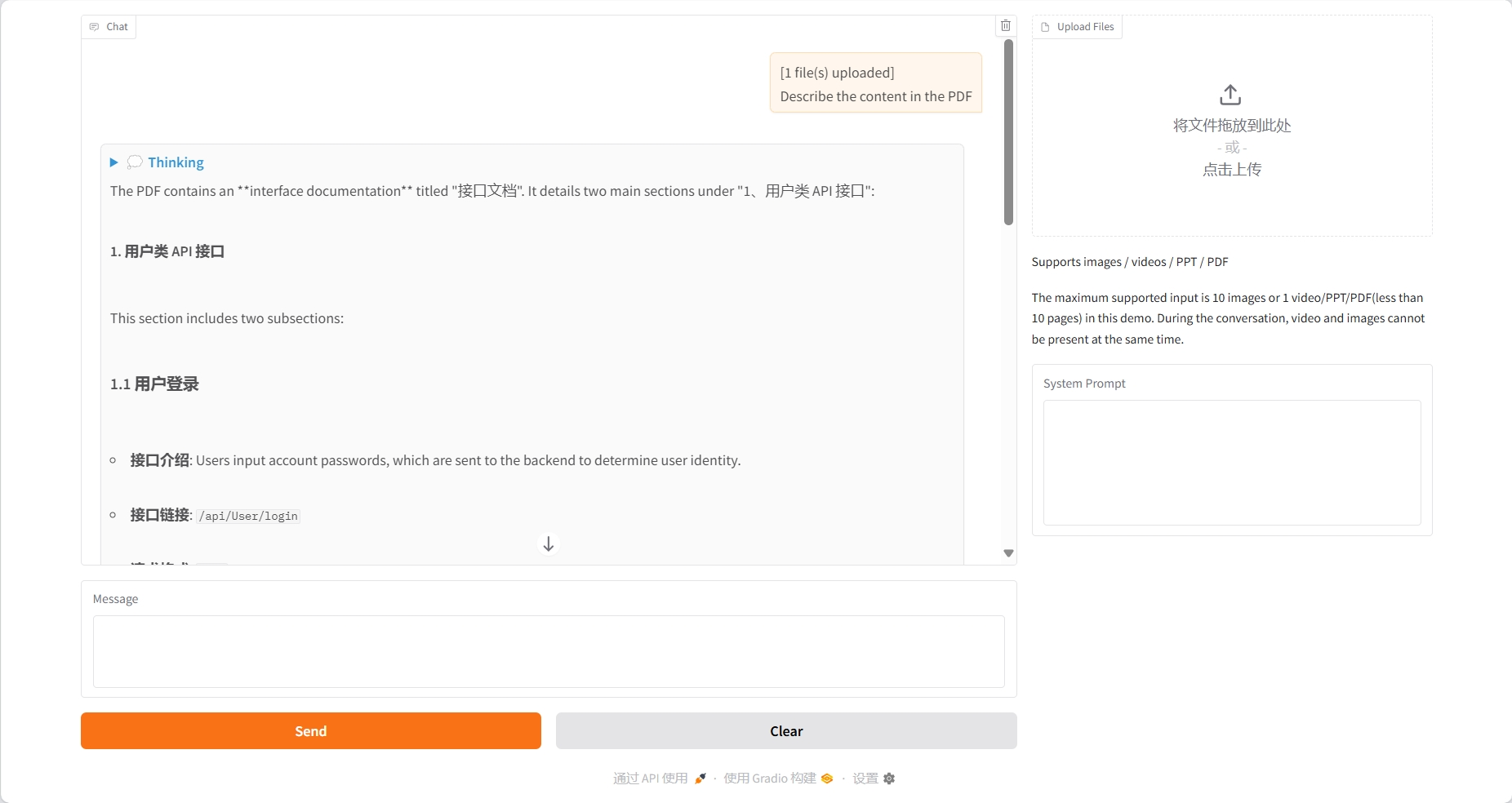

PDF 理解

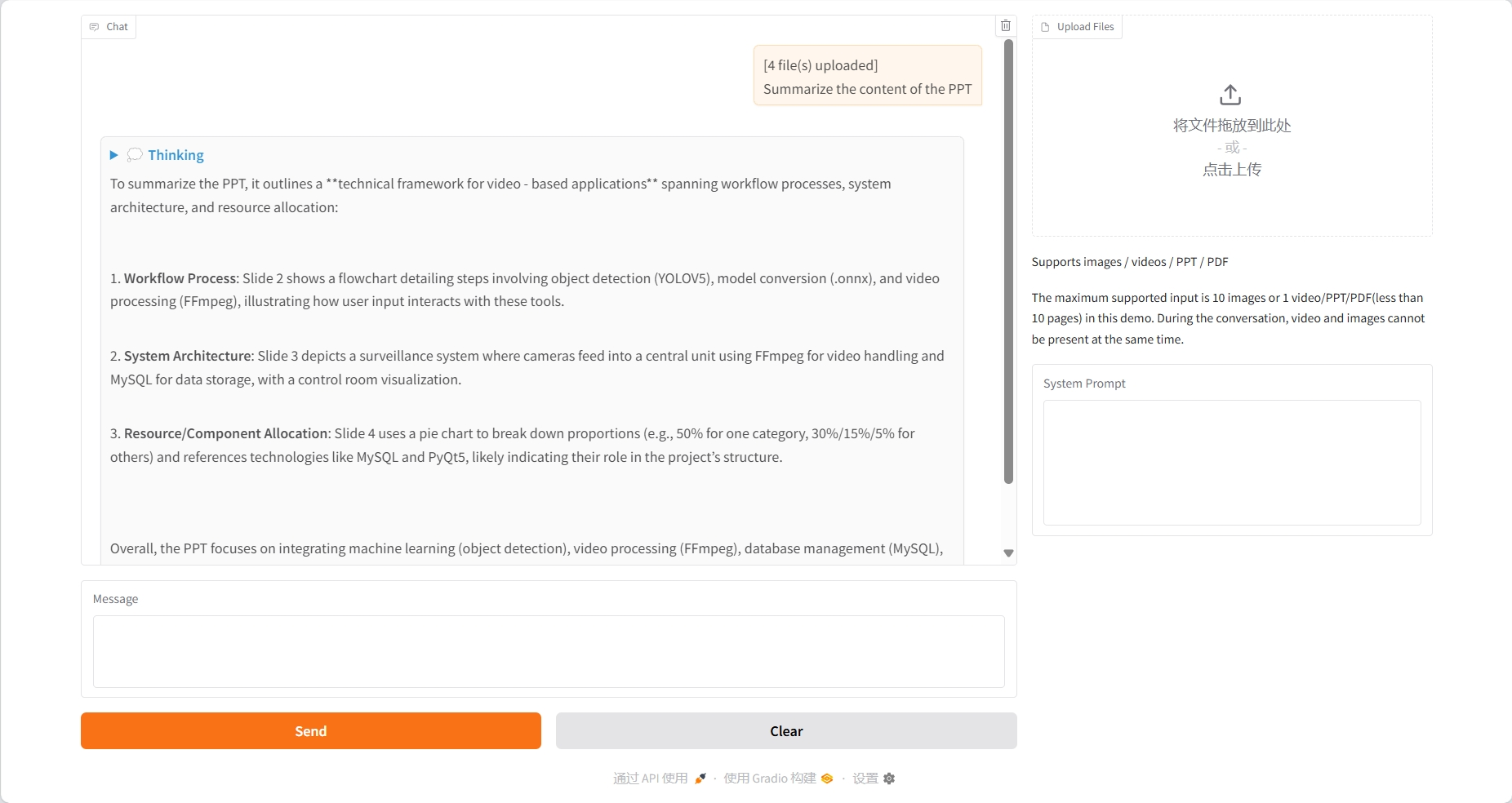

PPT 理解

三、运行步骤

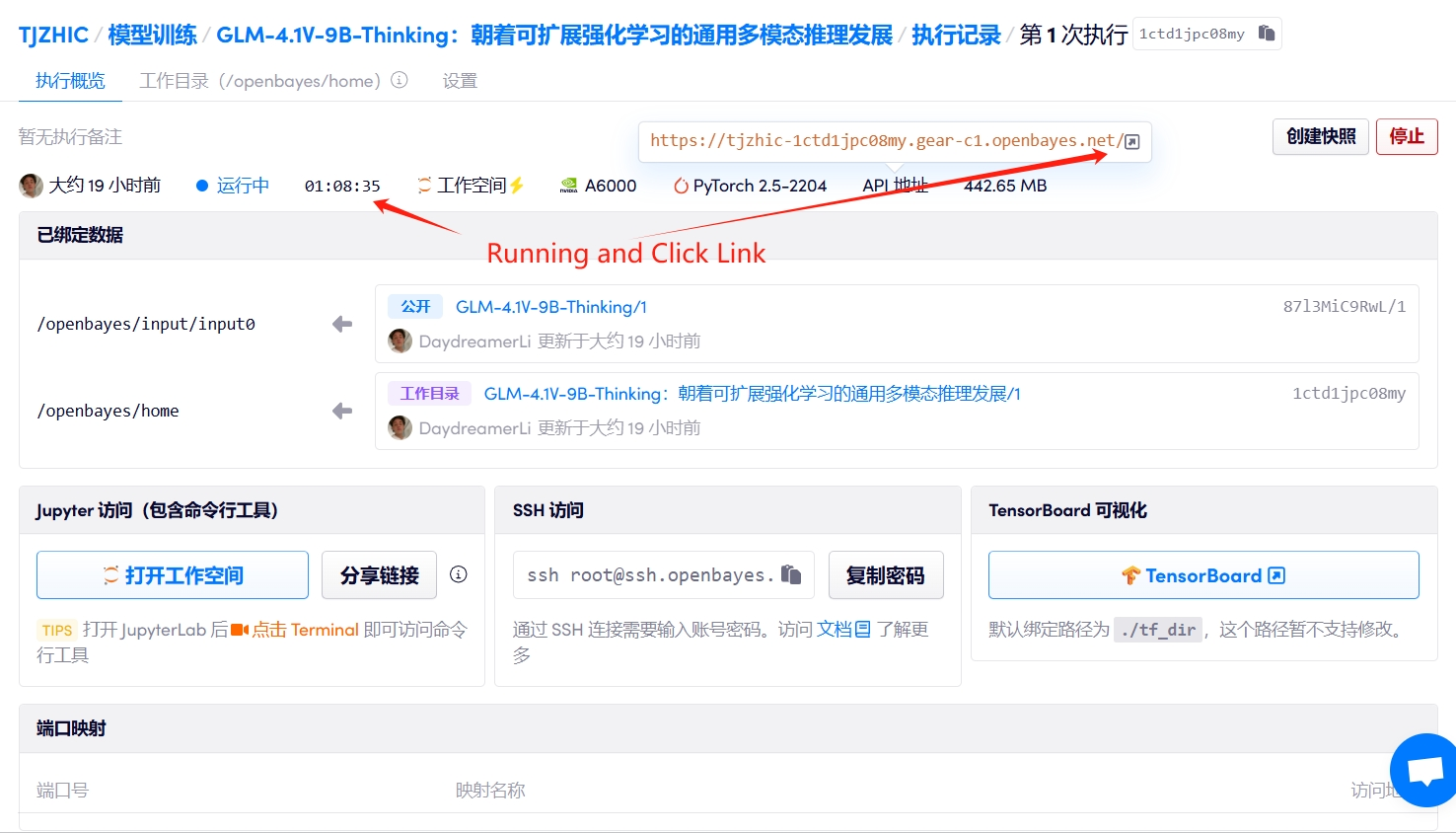

1. 启动容器

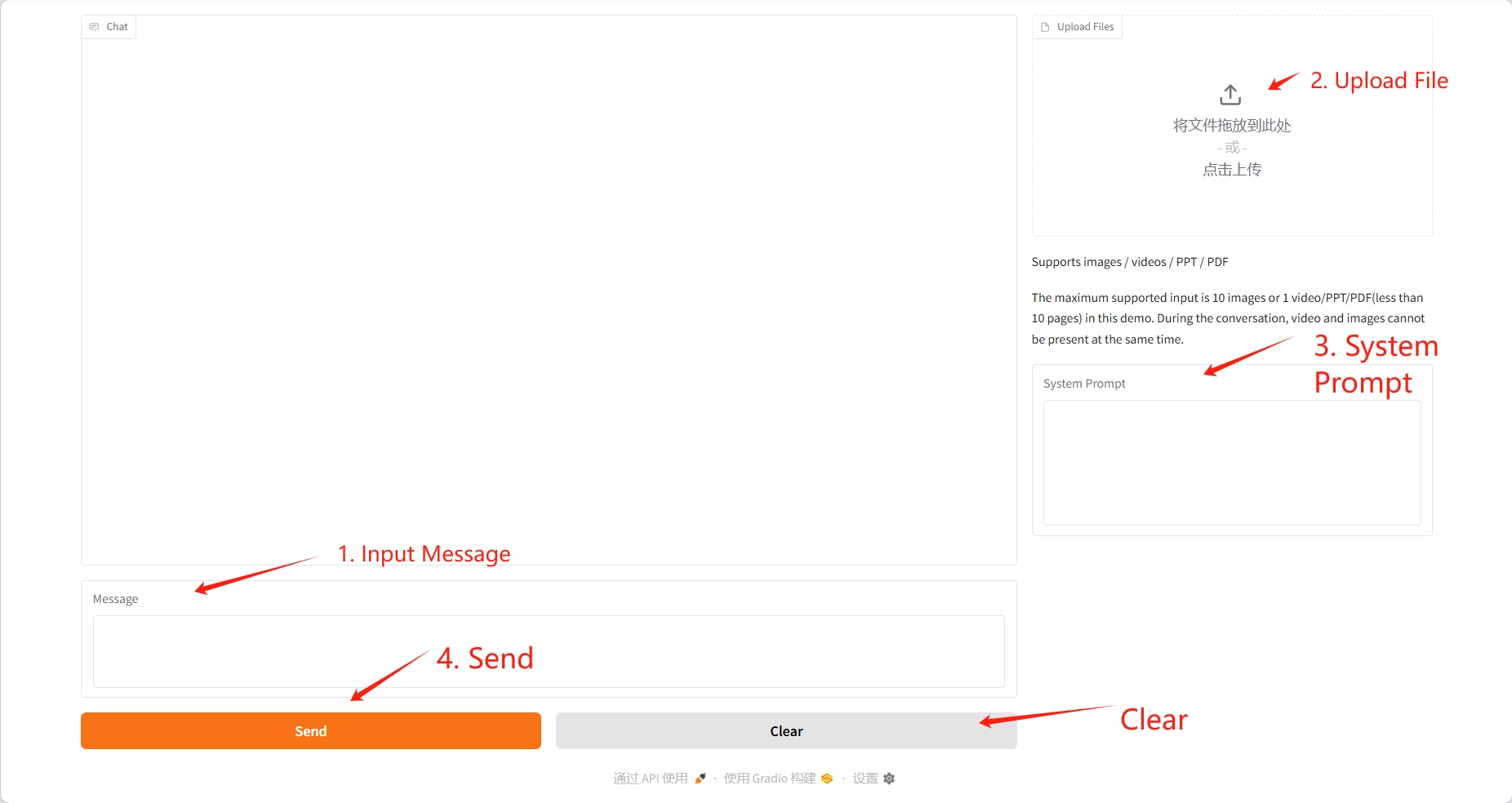

2. 使用步骤

若显示「Bad Gateway」,这表示模型正在初始化,由于模型较大,请等待约 2-3 分钟后刷新页面。

上传的视频最好不超过 10 秒,PDF 和 PPT 不超过 10 页,在对话过程中,视频和图片不能同时存在。建议每完成一次对话都进行清除操作。

四、交流探讨

🖌️ 如果大家看到优质项目,欢迎后台留言推荐!另外,我们还建立了教程交流群,欢迎小伙伴们扫码备注【SD 教程】入群探讨各类技术问题、分享应用效果↓

引用信息

本项目引用信息如下:

@misc{glmvteam2025glm41vthinkingversatilemultimodalreasoning,

title={GLM-4.1V-Thinking: Towards Versatile Multimodal Reasoning with Scalable Reinforcement Learning},

author={GLM-V Team and Wenyi Hong and Wenmeng Yu and Xiaotao Gu and Guo Wang and Guobing Gan and Haomiao Tang and Jiale Cheng and Ji Qi and Junhui Ji and Lihang Pan and Shuaiqi Duan and Weihan Wang and Yan Wang and Yean Cheng and Zehai He and Zhe Su and Zhen Yang and Ziyang Pan and Aohan Zeng and Baoxu Wang and Boyan Shi and Changyu Pang and Chenhui Zhang and Da Yin and Fan Yang and Guoqing Chen and Jiazheng Xu and Jiali Chen and Jing Chen and Jinhao Chen and Jinghao Lin and Jinjiang Wang and Junjie Chen and Leqi Lei and Letian Gong and Leyi Pan and Mingzhi Zhang and Qinkai Zheng and Sheng Yang and Shi Zhong and Shiyu Huang and Shuyuan Zhao and Siyan Xue and Shangqin Tu and Shengbiao Meng and Tianshu Zhang and Tianwei Luo and Tianxiang Hao and Wenkai Li and Wei Jia and Xin Lyu and Xuancheng Huang and Yanling Wang and Yadong Xue and Yanfeng Wang and Yifan An and Yifan Du and Yiming Shi and Yiheng Huang and Yilin Niu and Yuan Wang and Yuanchang Yue and Yuchen Li and Yutao Zhang and Yuxuan Zhang and Zhanxiao Du and Zhenyu Hou and Zhao Xue and Zhengxiao Du and Zihan Wang and Peng Zhang and Debing Liu and Bin Xu and Juanzi Li and Minlie Huang and Yuxiao Dong and Jie Tang},

year={2025},

eprint={2507.01006},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2507.01006},

}