Command Palette

Search for a command to run...

초록

제시해주신 영문 초록의 전문적인 한국어 번역입니다. 기술 논문의 문맥과 용어를 반영하여 학술적인 어조로 작성되었습니다.인간은 서로 다른 동역학(dynamics), 관측(observations), 보상 구조를 지닌 여러 세계 전반에 걸쳐 기저 규칙을 학습함으로써 다양한 환경에 자연스럽게 적응합니다. 반면, 기존의 에이전트들은 일반적으로 고정된 환경 분포를 암묵적으로 가정하고 단일 도메인 내에서 자가 진화(self-evolving)를 통해 성능을 개선하는 경향을 보입니다. 제어 가능하고 이질적인 환경에 대한 표준화된 모음이 없고, 에이전트의 학습 방식을 표현하는 통일된 방법이 부재하기 때문에 교차 환경 학습(cross-environment learning)은 대부분 측정되지 않은 영역으로 남아 있습니다.본 연구에서는 두 단계에 걸쳐 이러한 격차를 해결합니다. 첫째, 환경을 전이(transitions), 관측, 보상에 대한 인수분해 가능한 분포(factorizable distributions)로 간주하여 이질적인 세계를 저비용(평균 4.12달러)으로 생성할 수 있는 자동화 프레임워크인 AutoEnv를 제안합니다. AutoEnv를 사용하여 검증된 358개 레벨을 포함한 36개 환경의 데이터셋인 AutoEnv-36을 구축하였으며, 7개의 언어 모델이 12~49%의 정규화된 보상(normalized reward)을 달성하는 데 그쳐 AutoEnv-36의 난이도를 입증했습니다.둘째, 에이전트 학습을 개선 가능한 에이전트 컴포넌트에 적용되는 선택(Selection), 최적화(Optimization), 평가(Evaluation)의 3단계로 구동되는 컴포넌트 중심 프로세스로 공식화했습니다. 이 공식화를 바탕으로 8가지 학습 방법을 설계하고 AutoEnv-36에서 평가했습니다. 실증적으로 단일 학습 방법의 성능 이득은 환경의 수가 증가함에 따라 급격히 감소하는 것으로 나타났으며, 이는 고정된 학습 방법이 이질적인 환경 전반으로 확장되지 않음을 보여줍니다. 학습 방법을 환경에 적응적으로 선택하는 방식은 성능을 상당히 향상시키지만, 방법론의 탐색 공간이 확장됨에 따라 수확 체감(diminishing returns) 현상을 보였습니다.이러한 결과는 확장 가능한 교차 환경 일반화를 위한 에이전트 학습의 필요성과 현재의 한계를 동시에 강조하며, AutoEnv와 AutoEnv-36을 교차 환경 에이전트 학습 연구를 위한 테스트베드로 확립합니다. 코드는 https://github.com/FoundationAgents/AutoEnv 에서 확인할 수 있습니다.

Summarization

Researchers from HKUST (Guangzhou), DeepWisdom, et al. propose AutoEnv, an automated framework that treats environments as factorizable distributions to efficiently generate the heterogeneous AutoEnv-36 dataset, thereby establishing a unified testbed for measuring and formalizing agent learning scalability across diverse domains.

Introduction

Developing intelligent agents that can adapt across diverse environments, much like humans transition from board games to real-world tasks, remains a critical challenge in AI. While recent language agents perform well in specific domains like coding or web search, their success relies heavily on human-designed training data and narrow rule sets. Progress is currently stalled by two infrastructure gaps: the lack of an extensible collection of environments with heterogeneous rules, and the absence of a unified framework to represent how agents learn. Existing benchmarks rely on small, manually crafted sets that fail to test cross-environment generalization, while learning methods remain siloed as ad-hoc scripts that are difficult to compare systematically.

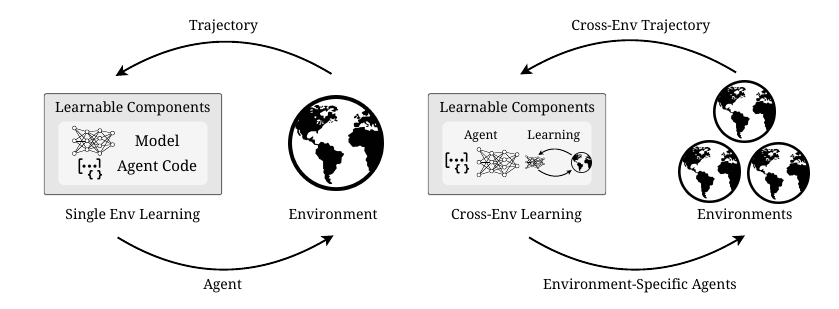

To bridge this gap, the authors introduce AutoEnv, an automated framework that generates diverse executable environments, alongside a formal component-centric definition of agentic learning.

The key innovations include:

- Automated Environment Generation: The system uses a multi-layer abstraction process to create AutoEnv-36, a dataset of 36 distinct environments and 358 validated levels at a low average cost of $4.12 per environment.

- Unified Learning Formalism: The researchers define agentic learning as a structured process involving Selection, Optimization, and Evaluation, allowing distinct strategies (such as prompt optimization or code updates) to be compared within a single configuration space.

- Cross-Environment Analysis: Empirical results demonstrate that fixed learning strategies yield diminishing returns as environment diversity increases, highlighting the necessity for adaptive methods that tailor learning components to specific rule distributions.

Dataset

Dataset Composition and Sources

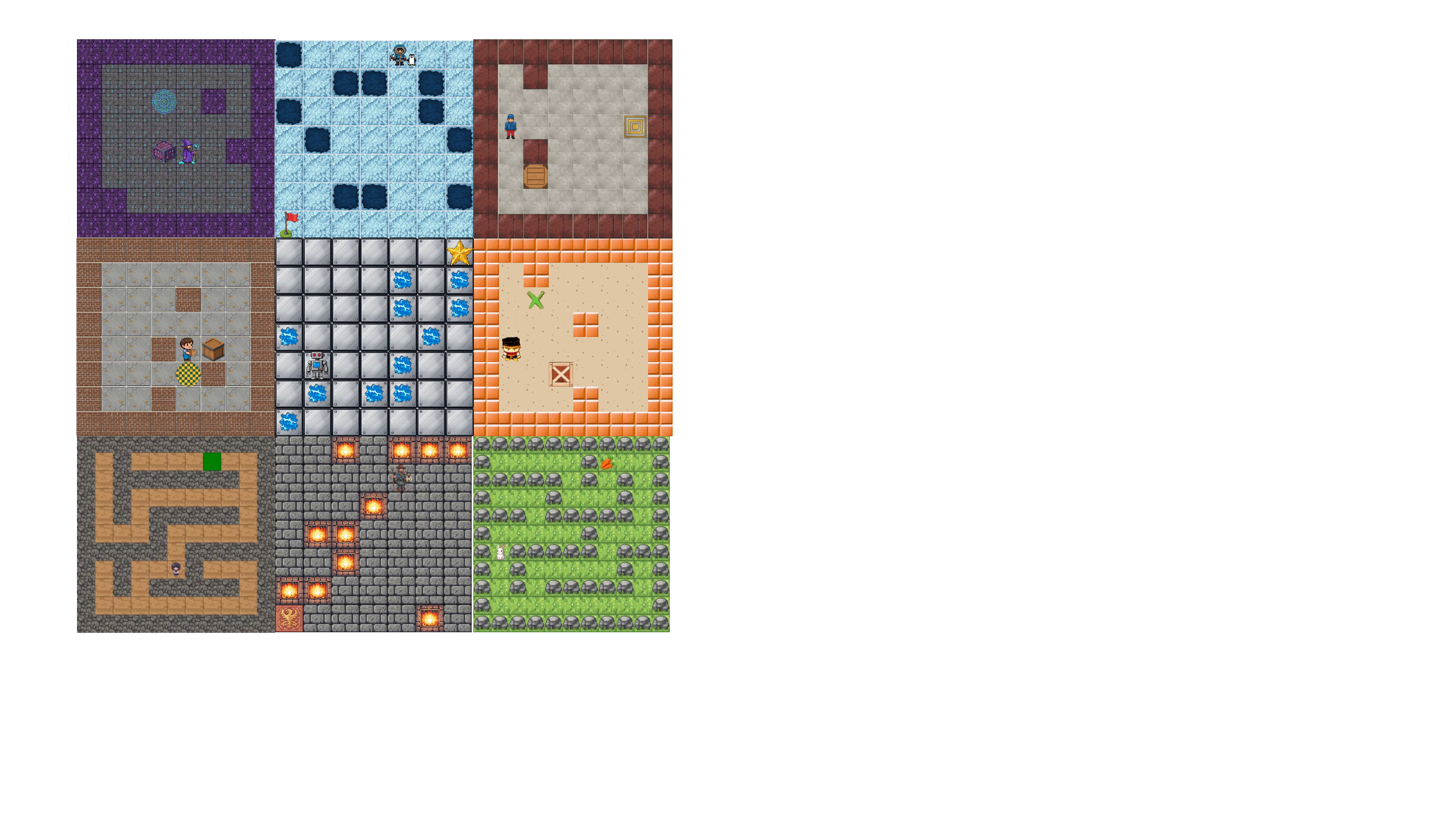

- The authors introduce AutoEnv-36, a benchmark dataset consisting of 36 distinct reinforcement learning environments.

- The environments originate from an initial pool of 100 themes generated using the AutoEnv framework, primarily utilizing text-only SkinEnv rendering.

- While the main dataset focuses on text-based interactions, the authors also explore multi-modal generation by integrating coding agents with image generation models.

Subset Details and Filtering

- Verification Process: The initial 100 environments underwent a three-stage verification pipeline, resulting in 65 viable candidates.

- Selection Criteria: The final 36 environments were selected to maximize diversity in rule types and difficulty, filtering out tasks that were either too trivial or too hard for meaningful evaluation.

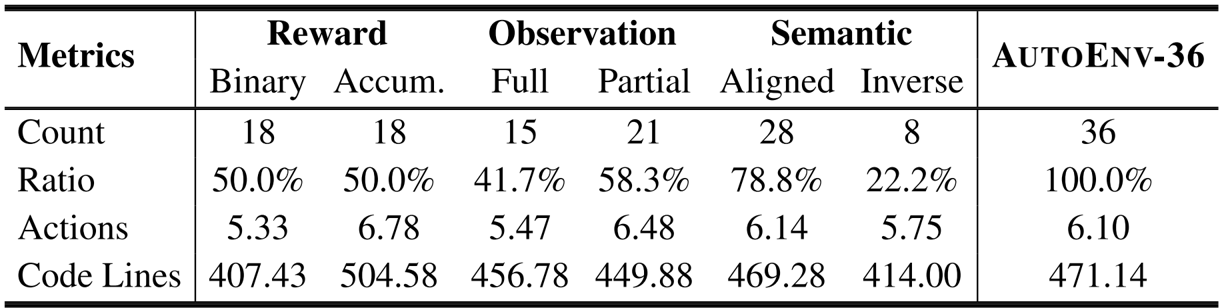

- Reward Distribution: The dataset is split evenly (50/50) between binary rewards (success/failure at the end) and accumulative rewards (scores collected over time).

- Observability: The environments favor partial observability (58.3%) over full observability (41.7%) to reflect realistic information constraints.

- Semantic Alignment: Most environments (78.8%) use aligned semantics where descriptions match rules, while a subset (22.2%) uses inverse semantics (e.g., "poison restores health") to test robustness against misleading instructions.

Usage in Evaluation

- The paper utilizes the dataset to evaluate agent performance across specific capability dimensions, including navigation, memory, resource control, pattern matching, and planning.

- The authors use the inverse semantic subset specifically to force agents to rely on interaction rather than surface wording.

- The environments present moderate structural complexity, featuring an average of 6.10 available actions and approximately 471 lines of implementation code per task.

Processing and Metadata

- File Structure: Each environment is organized with specific metadata files, including YAML configurations, Python implementation scripts, reward computation logic, and natural language agent instructions.

- Standardization: The dataset employs a unified interface for action spaces and observations, supporting both procedural generation and fixed level loading.

- Feature Tagging: The authors explicitly tag each environment with the primary skills required (such as "Multi-agent" or "Long-horizon Planning") to facilitate detailed performance analysis.

Method

The authors leverage a component-centric framework for agent learning, formalized through four basic objects—candidate, component, trajectory, and metric—and three iterative stages: Selection, Optimization, and Evaluation. A candidate represents a version of the agent, encapsulating its internal components and associated metadata such as recent trajectories and performance metrics. Components are modifiable parts of the agent, including prompts, agent code, tools, or the underlying model. During interaction with an environment, a candidate produces a trajectory, which is then used to compute one or more metrics, such as success rate or reward. The learning process proceeds through the three stages: Selection chooses candidates from the current pool based on a specified rule (e.g., best-performing or Pareto-optimal); Optimization modifies the selected candidates' target components using an optimization model that analyzes the candidate's structure, past trajectories, and metrics to propose edits; and Evaluation executes the updated candidates in the environment to obtain new trajectories and compute updated metrics. This framework enables the expression of various existing learning methods, such as SPO for prompt optimization and AFlow for workflow optimization, by specifying different combinations of selection rules, optimization schemes, and target components.

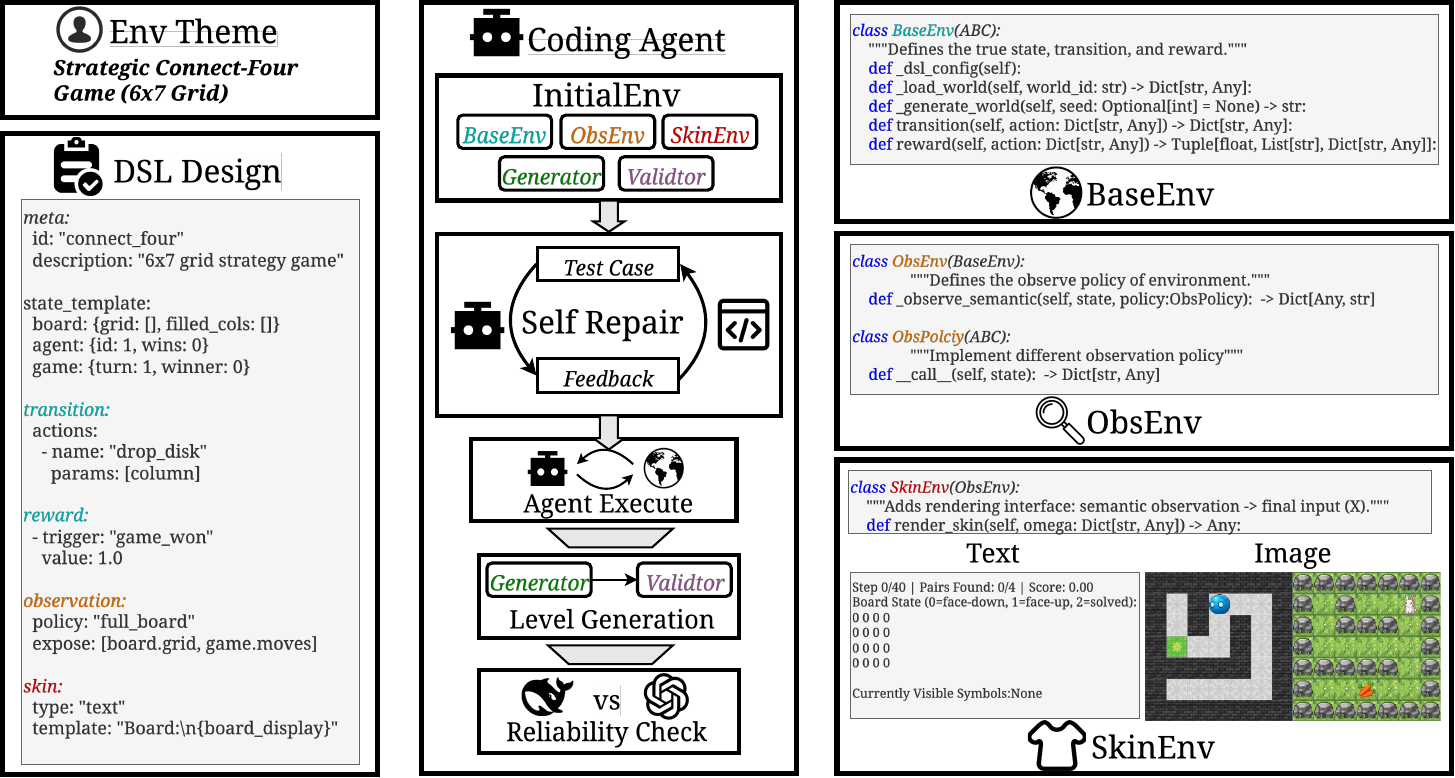

The environment generation process, referred to as \textscAutoEnv, follows a pipeline that leverages a three-layer abstraction to systematically create and validate environments. The process begins with an environment theme, which is translated into a detailed language description. This description is then converted into a YAML-based domain-specific language (DSL) that specifies the core dynamics for the BaseEnv, the observation policy for the ObsEnv, the rendering rules for the SkinEnv, and the configuration of a level generator. Coding agents then read this DSL to implement the three-layer classes, the level generator, and concise agent-facing documentation. The initial code is subjected to a self-repair loop, where the agents run syntax and execution tests, collect error messages, and iteratively edit the code until the tests pass or a repair budget is exhausted. The final outputs are executable environment code for all three layers, a level generator, and a validator. The generated environments undergo a three-stage verification pipeline: Execution testing ensures runtime stability by running a simple ReAct-style agent; Level Generation checks that the generator can produce valid levels with properties like goal reachability; and Reliability testing uses differential model testing with two ReAct agents to ensure the reward structure is skill-based and not random. This pipeline ensures that the final environments are executable, can generate valid levels, and provide reliable, non-random rewards.

The environment abstraction is structured into three distinct layers to support systematic generation and agent interaction. The BaseEnv layer implements the core dynamics of the environment, including the state space, action space, transition function, reward function, and termination predicate, capturing the fundamental rules and maintaining the full world state. The ObsEnv layer specializes the observation function by applying configurable observation policies to the underlying state and transitions from the BaseEnv, determining what information is exposed to the agent. This allows for controlled variation in information availability, from full observability to strong partial observability. The SkinEnv layer applies rendering on top of the ObsEnv, converting observations into agent-facing modalities such as natural language text or images. This layered design enables the same observation policy to be paired with different skins, creating environments that appear semantically different to the agent while sharing the same underlying rules. The code implementation of these layers is provided in the appendix, with the BaseEnv defining the true state, transition, and reward, the ObsEnv adding an observation interface, and the SkinEnv adding a rendering interface that transforms semantic observations into final inputs.

Experiment

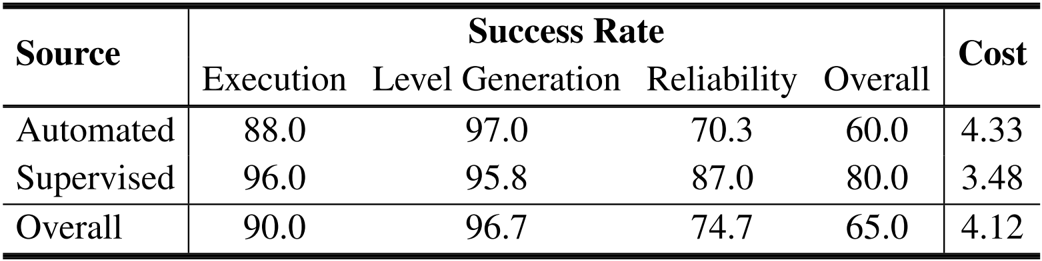

- Environment Generation Analysis: Evaluated AutoEnv across 100 themes, achieving 90.0% execution success and 96.7% level generation success with an average cost of $4.12 per environment. Human review of themes improved overall success rates from 60.0% to 80.0% by reducing rule inconsistencies.

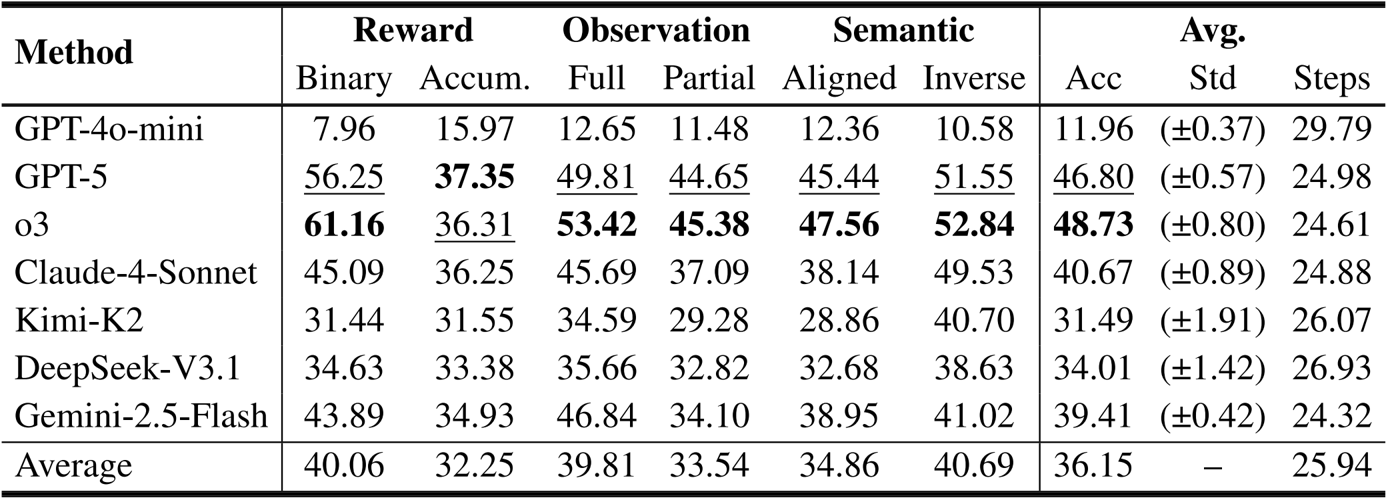

- Model Performance Benchmarking: Validated AutoEnv-36 as a capability benchmark by testing 7 models; O3 achieved the highest normalized accuracy (48.73%), surpassing GPT-5 (46.80%) and significantly outperforming GPT-4o-mini (11.96%).

- Semantic Inversion Control: Conducted a "Skin Inverse" experiment which showed that inverting only semantic displays caused an 80% performance drop, clarifying that inverse semantics inherently increase difficulty and that higher scores in original inverse environments stemmed from simpler structural generation.

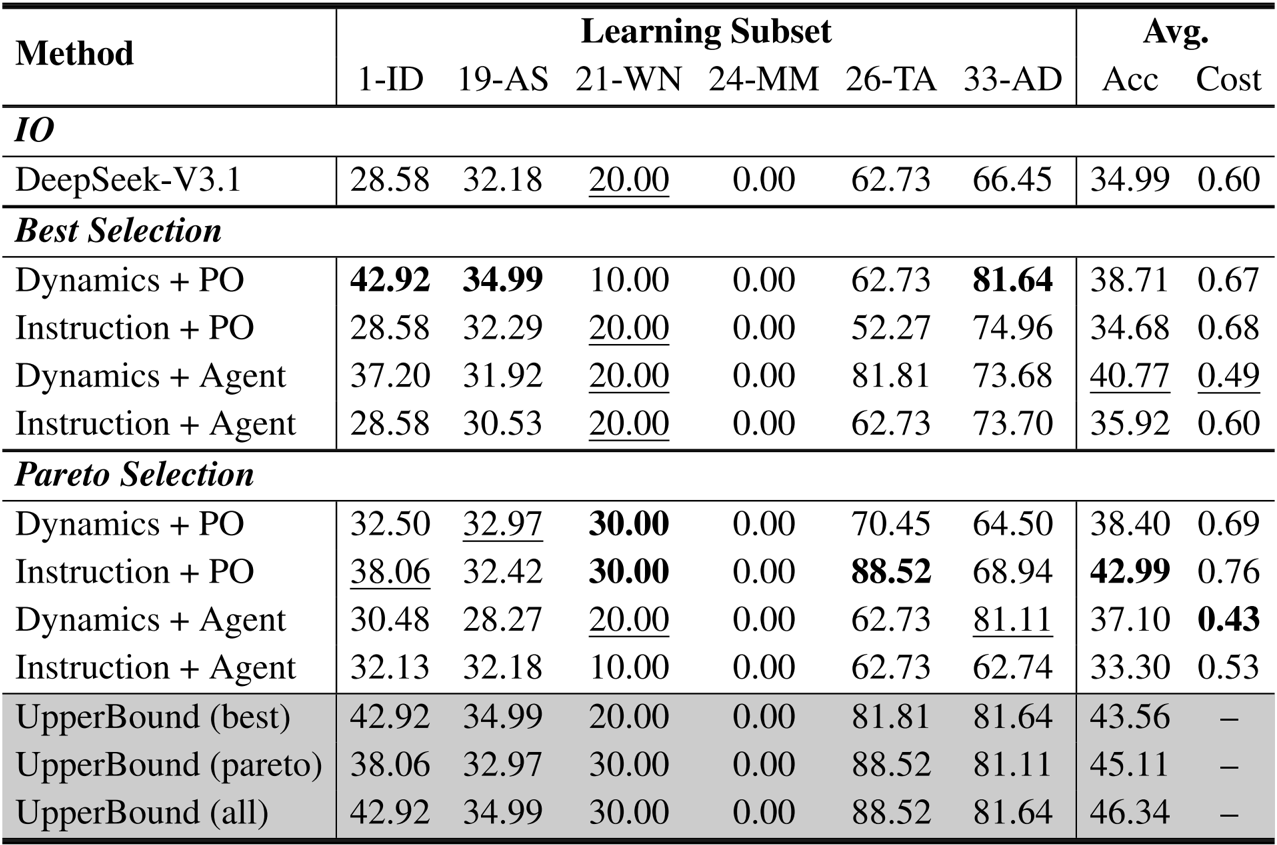

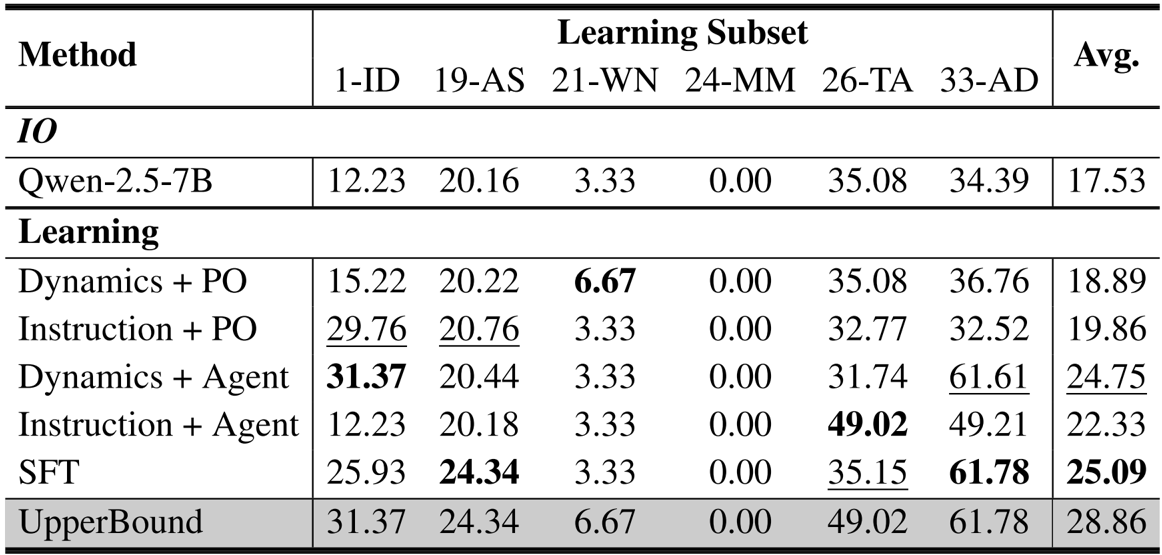

- Learning Method Diversity: Experiments on a 6-environment subset using Qwen-2.5-7B and DeepSeek-V3.1 revealed strong environment-method interactions. On DeepSeek-V3.1, the upper bound using 8 methods reached 46.34%, exceeding the best single method performance of 42.99%.

- Adaptive Learning Potential: Scaling to 36 environments demonstrated that single learning methods yield marginal gains over baselines (42.40% vs. 39.41%), whereas the adaptive upper bound achieved 47.75%, highlighting the necessity of environment-specific strategy selection.

The authors use the table to summarize the characteristics of the 36 environments in the AutoEnv-36 benchmark, showing the number of environments and their distribution across different reward, observation, and semantic types. Results show that the benchmark includes a diverse set of environments, with binary and accumulative rewards each covering 50.0% of the total, full observation environments making up 41.7%, and aligned semantic environments being the most common at 78.8%.

The authors use the table to evaluate the performance of their AutoEnv generation pipeline across 100 environment themes, distinguishing between purely LLM-generated (Automated) and human-reviewed (Supervised) themes. Results show that human review significantly improves overall success rates from 60.0% to 80.0%, primarily by reducing rule inconsistencies, while also lowering generation costs. The overall success rate across all themes is 65.0%, with 90.0% execution success, 96.7% level generation success, and 74.7% reliability verification rate.

Results show that O3 achieves the highest performance at 48.73% normalized accuracy, followed by GPT-5 at 46.80%, while GPT-4o-mini obtains the lowest at 11.96%. The performance gap across models validates the benchmark's ability to differentiate agent capabilities, with binary reward environments outperforming accumulative ones and full observation environments outperforming partial ones.

The authors use a subset of six environments to evaluate different learning methods, including training-free approaches and supervised fine-tuning (SFT), on the Qwen-2.5-7B model. Results show that the upper bound performance, achieved by selecting the best method per environment, is 28.86%, significantly higher than the best single method (SFT at 25.09%), indicating that optimal learning strategies are environment-specific and that combining diverse methods improves overall performance.

The authors use DeepSeek-V3.1 to evaluate five learning methods across six environments, comparing performance under Best Selection and Pareto Selection. Results show that the upper bound performance increases with more methods, but gains diminish after four methods, with the best single method achieving 38.71% accuracy and the upper bound reaching 46.34% when all methods are combined.