Command Palette

Search for a command to run...

B. Y. Yan Chaofan Li Hongjin Qian Shuqi Lu Zheng Liu

초록

메모리는 AI 에이전트에게 있어 핵심적인 요소이지만, 즉시 사용 가능한 기억을 사전에 생성하는 것을 목표로 널리 채택되고 있는 '정적 메모리(static memory)' 방식은 필연적으로 심각한 정보 손실을 겪을 수밖에 없습니다. 이러한 한계를 극복하기 위해, 본 연구에서는 범용 에이전트 메모리(General Agentic Memory, 이하 GAM)라는 새로운 프레임워크를 제안합니다. GAM은 "적시 컴파일(JIT, Just-In-Time compilation)" 원칙을 따르는데, 이는 오프라인 단계에서는 단순하지만 유용한 메모리만을 유지하고, 런타임(runtime) 시점에 클라이언트를 위해 최적화된 컨텍스트를 생성하는 데 집중하는 방식입니다. 이를 구현하기 위해 GAM은 다음과 같은 두 가지 구성 요소로 이루어진 이중 구조를 채택하고 있습니다.1) 메모라이저(Memorizer): 범용 페이지 스토어(page-store) 내에 전체 이력 정보를 보존하는 동시에, 경량화된 메모리를 사용하여 핵심적인 과거 정보를 선별 및 강조합니다.2) 리서처(Researcher): 사전에 구축된 메모리의 가이드를 바탕으로, 온라인 요청 처리에 필요한 유용한 정보를 페이지 스토어에서 검색하고 통합합니다.이러한 설계는 GAM이 최첨단 대규모 언어 모델(LLM)의 에이전트적 역량(agentic capabilities)과 테스트 타임 확장성(test-time scalability)을 효과적으로 활용할 수 있게 하며, 동시에 강화 학습(reinforcement learning)을 통한 엔드투엔드(end-to-end) 성능 최적화를 촉진합니다. 실험 연구 결과, 우리는 GAM이 기존 메모리 시스템 대비 다양한 메모리 기반 작업 수행 시나리오에서 상당한 성능 향상을 달성했음을 입증하였습니다.

Summarization

Researchers from the Beijing Academy of Artificial Intelligence, Peking University, and Hong Kong Polytechnic University introduce General Agentic Memory (GAM), a framework that overcomes static memory limitations by applying a just-in-time compilation principle where a dual Memorizer-Researcher architecture dynamically constructs optimized contexts from a universal page-store for enhanced memory-grounded task completion.

Introduction

AI agents are increasingly deployed in complex fields like software engineering and scientific research, creating an urgent need to manage rapidly expanding contexts. As these agents integrate internal reasoning with external feedback, effective memory systems are essential for maintaining continuity and accuracy without overwhelming the model's context window.

Prior approaches typically rely on "Ahead-of-Time" compilation, where data is compressed into static memory offline. This method suffers from inevitable information loss during compression, struggles with ad-hoc requests due to rigid structures, and depends heavily on manual heuristics that hinder cross-domain generalization.

The authors propose General Agentic Memory (GAM), a framework based on "Just-in-Time" compilation that preserves complete historical data while generating customized contexts on demand. By treating memory retrieval as a dynamic search process rather than a static lookup, GAM ensures lossless information access tailored to specific queries through a dual-agent system.

Key innovations include:

- Dual-Agent Architecture: The system employs a "Memorizer" to index historical sessions and a "Researcher" to perform iterative deep research and reflection to satisfy complex client needs.

- High-Fidelity Adaptability: By maintaining full history in a database and retrieving only what is necessary at runtime, the framework avoids compression loss and adapts dynamically to specific tasks.

- Self-Optimizing Generalization: The approach eliminates the need for domain-specific rules, allowing the system to operate across diverse scenarios and improve continuously through reinforcement learning.

Method

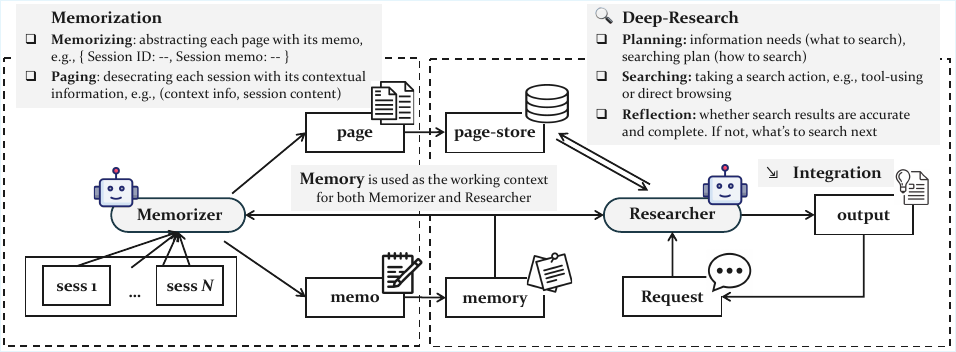

The authors leverage a dual-module architecture for their General Agentic Memory (GAM) system, designed to manage long agent trajectories efficiently while maintaining task performance. The framework operates in two distinct phases: an offline memorization stage and an online research stage. As shown in the figure below, the overall system consists of a memorizer and a researcher, both of which are large language model (LLM)-based agents. The memorizer processes the agent's historical trajectory during the offline phase, generating a compact memory representation and preserving the complete trajectory in a page-store. The researcher, in contrast, operates online to address client requests by retrieving and integrating relevant information from the page-store, ultimately producing an optimized context for downstream task completion.

During the offline stage, the memorizer performs two key operations for each incoming session si. First, it executes a memorizing step, which generates a concise and well-structured memo μi that captures the crucial information of the new session. This memo is produced based on both the current session and the existing memory mi, and the memory is incrementally updated by adding the new memo to form mi+1. Second, the memorizer performs a paging operation, which creates a complete page for the session. This process begins by generating a header hi that contains essential contextual information from the preceding trajectory. The header is then used to decorate the session content, forming a new page that is appended to the page-store p. This two-step process ensures that the system maintains both a lightweight, optimized memory and a comprehensive, semantically consistent record of the agent's history.

In the online stage, the researcher is tasked with addressing a client's request. It begins by performing a planning step, which involves chain-of-thought reasoning to analyze the information needs of the request r. Based on this analysis, the researcher generates a concrete search plan using a provided search toolkit T, which includes an embedding model for vector search, a BM25 retriever for keyword-based search, and an ID-based retriever for direct page exploration. The planning process is guided by a specific prompt, as illustrated in the figure below, which instructs the model to generate a JSON object specifying the required tools and their parameters.

Upon receiving the search plan, the researcher executes the search actions in parallel, retrieving relevant pages pt from the page-store. It then integrates the information from the retrieved pages with the last integration result I for the request r, updating the integration result. This process is repeated iteratively. After each integration, the researcher performs a reflection step to determine if the information needed to answer the request has been fully collected. This is done using a binary indicator y. If the reflection indicates that information is still missing (y=No), the researcher generates a new, more focused request r′ to drive another round of deep research. If the information is deemed complete (y=Yes), the research process concludes, and the final integration result is returned as the optimized context. The reflection process is guided by a prompt that instructs the model to identify missing information and generate targeted follow-up retrieval questions.

Experiment

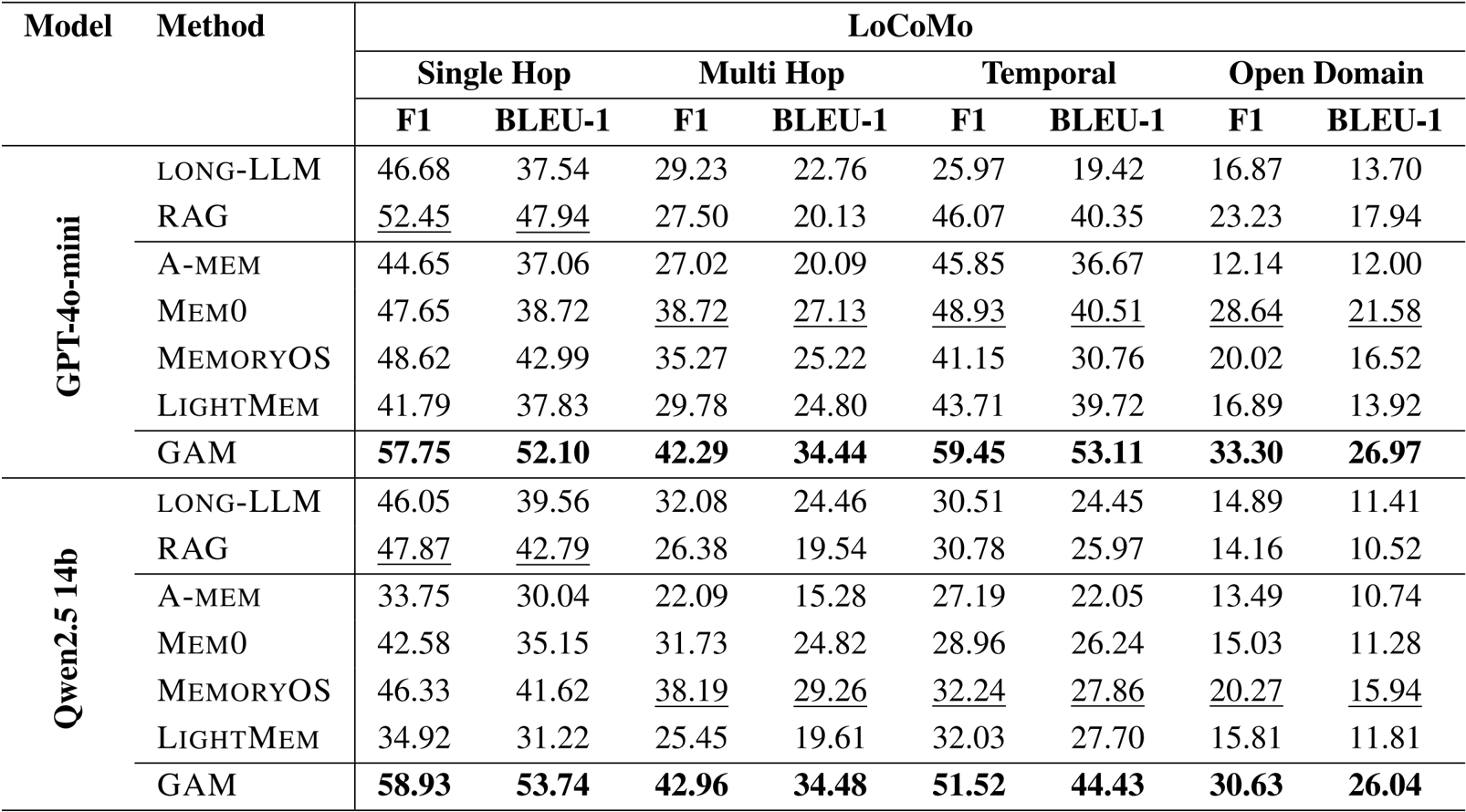

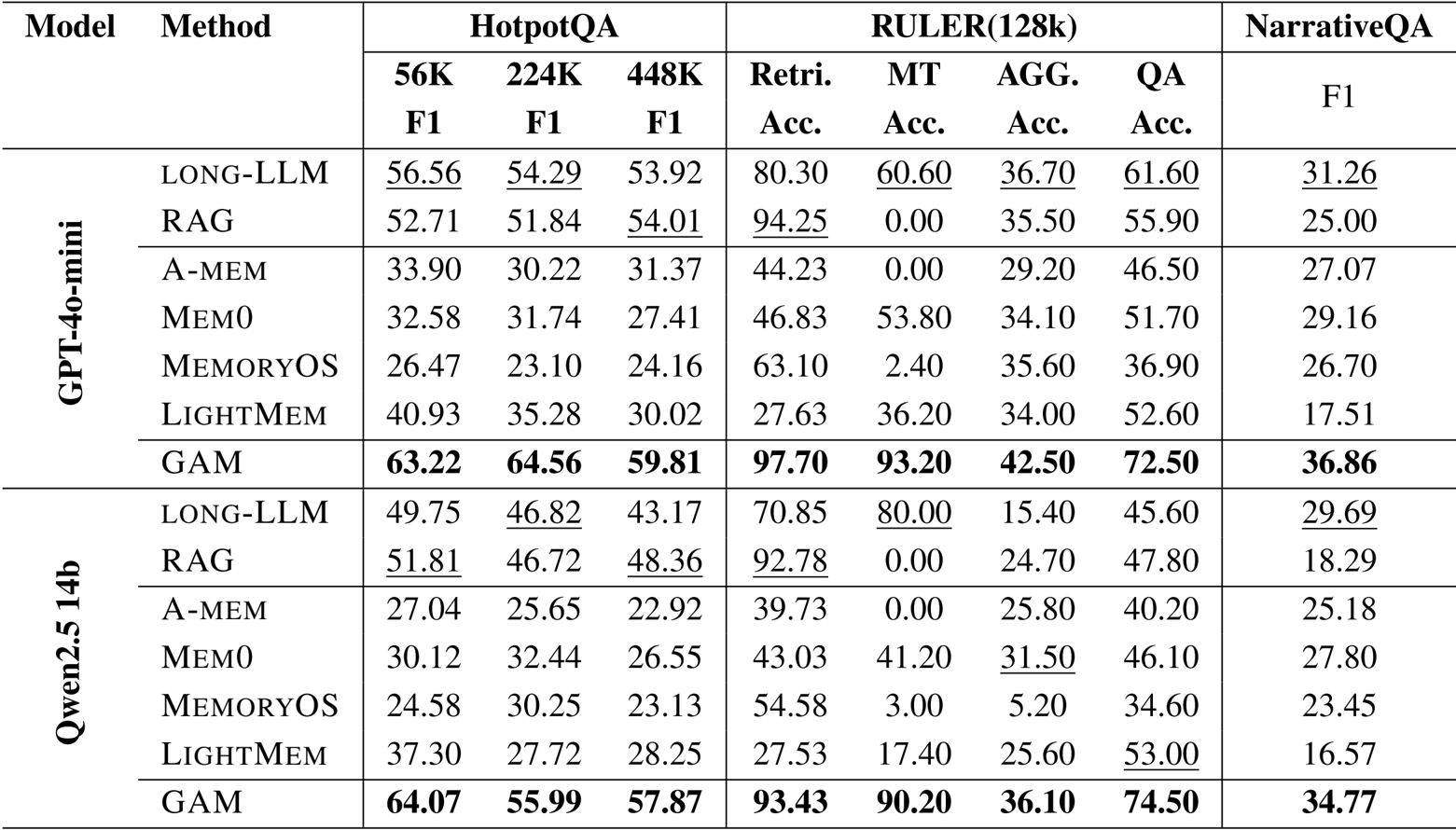

- Evaluated GAM against memory-free methods (Long-LLM, RAG) and memory-based baselines (e.g., Mem0, LightMem) using LoCoMo, HotpotQA, RULER, and NarrativeQA benchmarks.

- GAM consistently outperformed all baselines across every dataset, notably achieving over 90% accuracy on RULER multi-hop tracing tasks where other methods failed.

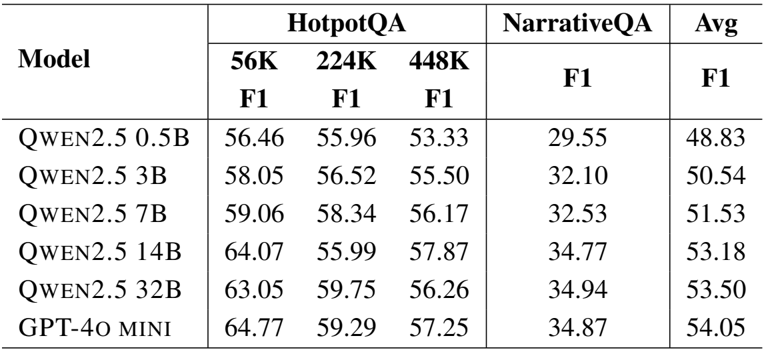

- Demonstrated robustness to varying context lengths, maintaining high performance on HotpotQA contexts ranging from 56K to 448K tokens.

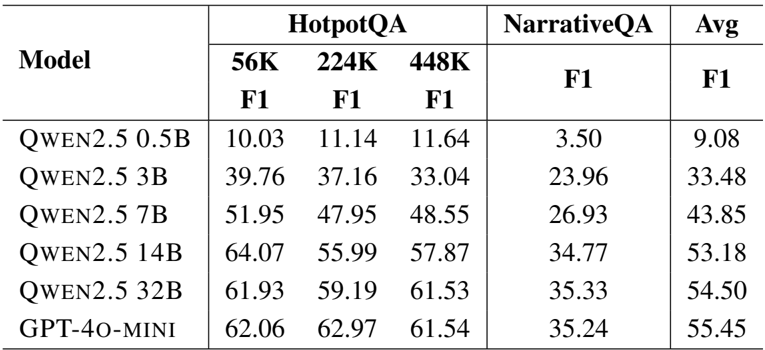

- Model scaling analysis indicated that larger backbone models improve results, with the research module showing significantly higher sensitivity to model size than the memorization module.

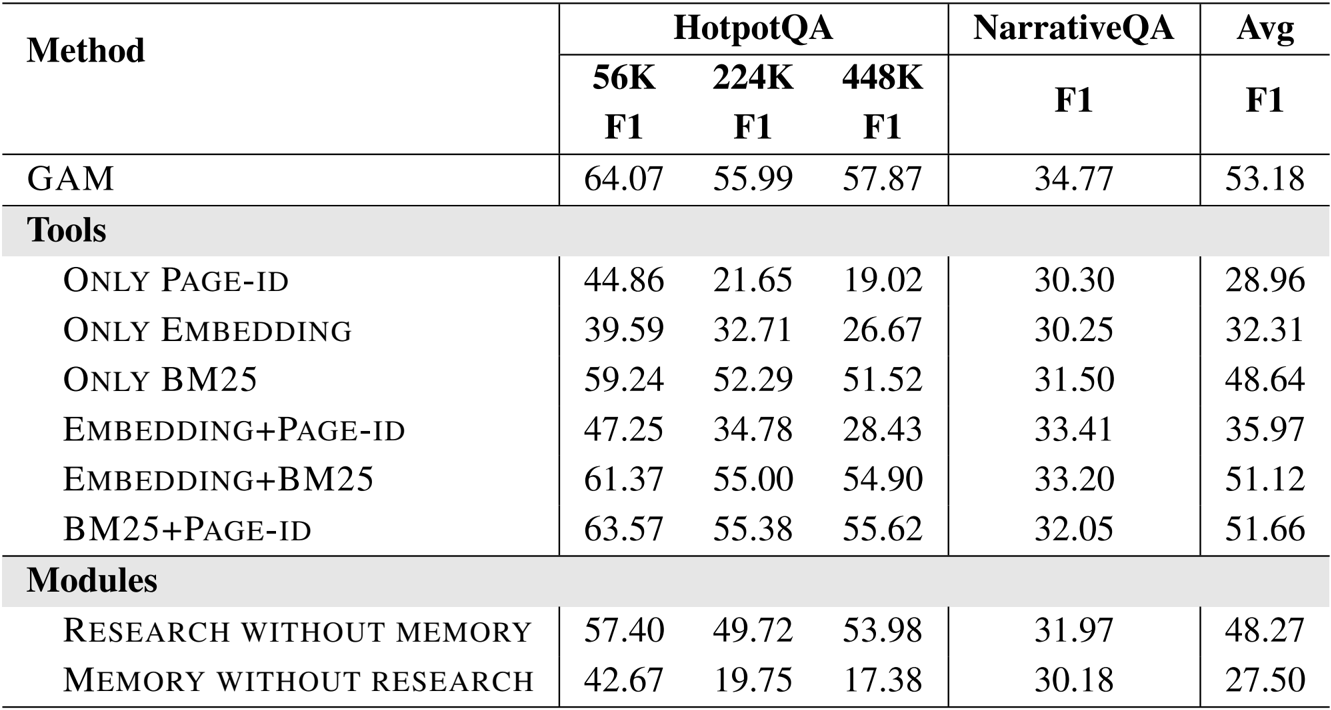

- Ablation studies confirmed that combining search tools (Page-id, Embedding, BM25) yields the best results and that removing the memory module causes substantial performance degradation.

- Increasing test-time computation, specifically through higher reflection depth and more retrieved pages, resulted in steady performance gains.

- Efficiency evaluations showed GAM incurs time costs comparable to Mem0 and MemoryOS while delivering superior cost-effectiveness.

The authors use GAM to achieve the best performance across all benchmarks, consistently outperforming both memory-free and memory-based baselines on LoCoMo, HotpotQA, RULER, and NarrativeQA. Results show that GAM significantly improves over existing methods, particularly in complex tasks requiring multi-hop reasoning and long-context understanding, while maintaining competitive efficiency.

The authors use GAM to achieve state-of-the-art performance across multiple long-context benchmarks, consistently outperforming both memory-free and memory-based baselines. Results show that GAM significantly improves accuracy on complex tasks requiring multi-hop reasoning and retrieval, particularly on HotpotQA and RULER, where it achieves over 90% accuracy on multi-hop tracing tasks, while also maintaining stable performance across varying context lengths.

The authors use GAM with different LLM backbones to evaluate its performance on HotpotQA and NarrativeQA, showing that larger models generally improve results. GAM achieves the highest average F1 score with GPT-4o-mini, outperforming all Qwen2.5 variants, and demonstrates consistent gains as model size increases, particularly on longer contexts.

The authors use GAM with different LLM backbones to evaluate the impact of model size on performance. Results show that larger models consistently improve performance, with GPT-4o-mini achieving the highest average F1 score of 55.45, while the smallest Qwen2.5-0.5B model achieves the lowest at 9.08.

Results show that GAM achieves the highest performance across all benchmarks, with its effectiveness enhanced by combining multiple search tools and both memory and research modules. The ablation study indicates that using the full system with all tools and modules yields the best results, while removing either component significantly reduces performance.